Thanks to our amazing team of data scientists! After a bit of cleaning and hours of modeling based on historical data spreading over 2015 years, we have determined that Christmas 2015 would happen between Thursday December 24th and Friday December 25th with a credible interval of 90%.

A royal tradition: the Christmas message

One of the many benefits of that prediction is that we have a precise idea of when Queen Elizabeth will deliver the Royal Christmas Message. It all goes back to 1932 when King George V delivered the first radio broadcast Christmas message to the Commonwealth. With an estimated audience of 20 million people across Australia, Canada, India, Kenya, South Africa, and of course the United Kingdom, it quickly became a tradition.

After Queen Elizabeth's accession on 6th February 1952, the Queen kept the tradition while following the evolution of technology with the first live Christmas broadcast on television in 1957 and the launch of the Royal Channel on YouTube in 2007.

For the joy of data scientists and natural language processing amateurs, all of the Queen's Christmas messages can be found at the official webiste of the British Monarchy.

If the 63 royal Christmas messages are definitely not big data, we can still gain some interesting insights by applying some natural language processing (NLP) techniques. More precisely, we will talk about topic modeling today. Topic models are statistical models that aim to discover the 'hidden' thematic structure of a collection of documents, i.e. identify possible topics in our corpus.

For that, the NLP toolbox of the data scientist contains many powerful algorithms: LDA (Latent Dirichlet Allocation) and its nonparametric generalization, HDP (Hierarchical Dirichlet Process), but also NMF (non-negative matrix factorization) are amongst the better known. If both LDA and HDP are probabilistic Bayesian model that give uncertainty about the assignment of words to topics, NMF proposes a deterministic approach.

Today we will focus on understanding NMF as we will apply it to the dataset consisting of the Queen's Christmas messages.

Getting the data merry (or ready, whatever)

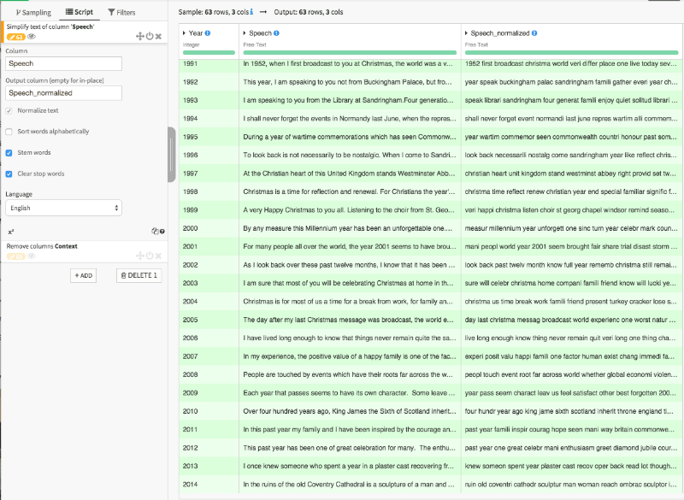

The first thing we need to do is normalize the text by removing the punctuation and converting to lower case. We also remove all the stop words and proceed to a stemming of the words. Thankfully, all of this can easily be done within a preparation script.

Simplify Text Processor

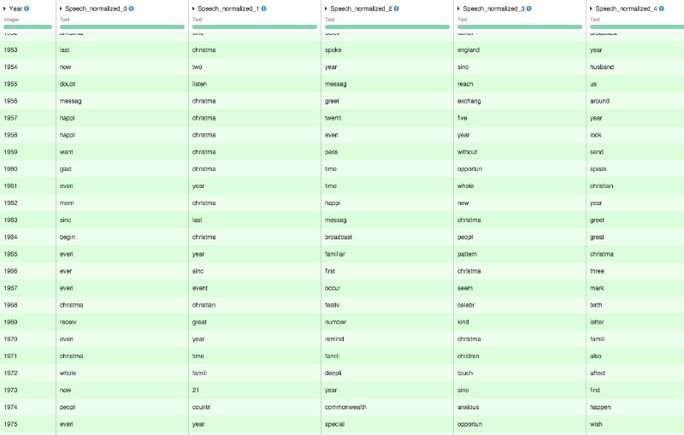

This leads us to the following document-term dataset:

We now have a document-term dataset on which we can apply a tf-idf weighting followed by a unit-length normalization. Tf-idf measures the importance of terms in the documents based on how frequently they occur in the corpus of documents... and it is a great way to transform text data into numerical data.

What is non-negative matrix factorization (NMF) anyway?

As the name suggests, a non-negative matrix factorization is an approximation of a non-negative matrix as the product of two non-negative matrices. That's it! More concretly, our document-term matrix will be approximated as the product of a document-topic matrix and a topic-terms matrix. Of course, there is an implementation of NMF in scikit-learn.

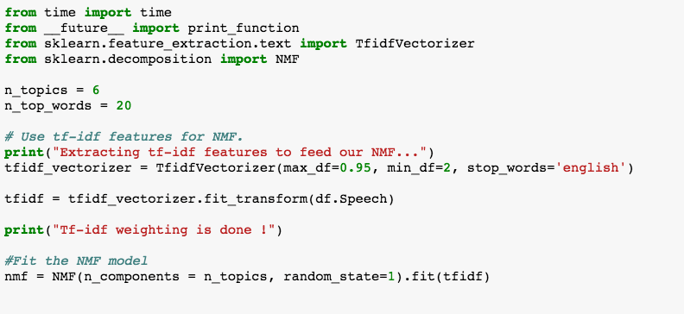

NMF in only a few lines of codes

NMF in only a few lines of codes

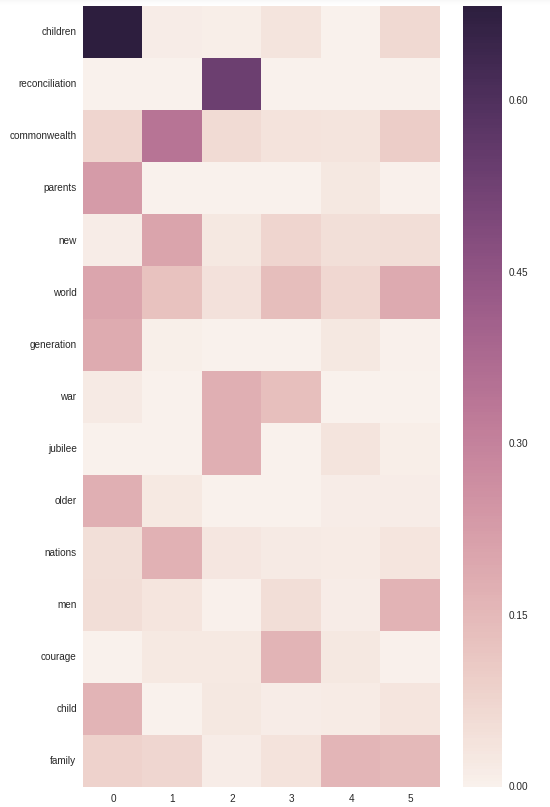

Looking at the list of the most frequent words per topic gives a good idea of how to name each topic. For instance, I'd let you imagine what those two topics are about:

Topic #2:

commonwealth new nations canada world year great zealand countries

freedom britain peoples unity development

Topic #3:

reconciliation war jubilee games conflict king celebrations peace

year britain sculpture needed reminder americaWe can then analyze the topic-term dataset and document-topic dataset as heatmaps. This will gives us an idea of the repartition of words per topic and topics per document.

Heatmap of the topic-term dataset

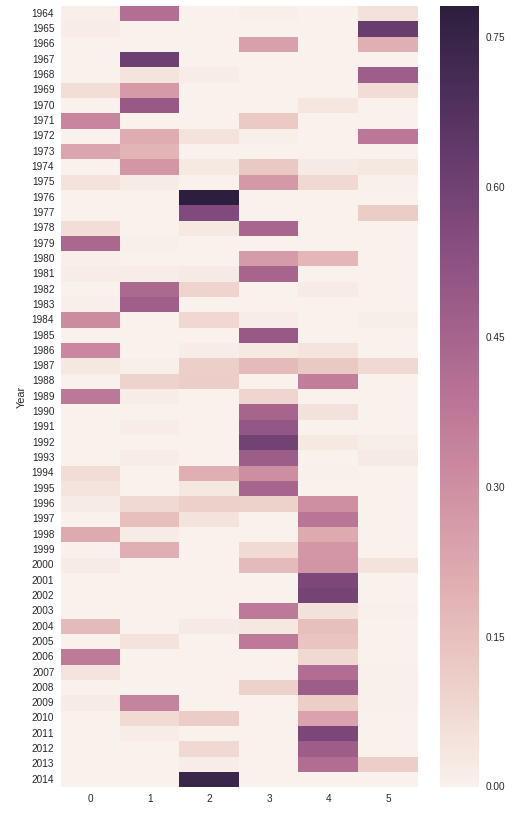

And here is the document-topic heatmap:

Heatmap of the document-topic dataset

Wait... How to choose the number of topics?

There is no clear heuristic for the selection of the number of topics k. However, after a careful inspection of the top ranked terms per topic (i.e. you keep going until you cannot interpret the topics anymore), and a lot of trial and error, we've reached the most conclusive results when setting the number of topics to 6. Note that HDP could automatically determine the most appropriate number of topics... but it will still be up to you to make sense of them!

Exploring topics and messages

Let's just focus on exploring three topics for now. We see that the Christmas messages of 1976 and 2014 refer to the same topic: Reconciliation. Indeed, 1976 was America's bicentenary and 2014 was the one-hundred anniversary of World War I. On those two occasions, the Queen delivered a message of peace and appeasement.

Of course, the Commonwealth is one of the most recurrent topic in the Queen's allocution throughout the years: from 1953 when the Queen toured the Commonwealth on a six-month tour to the sixtieth anniversary of the Commonwealth in 2009. As for messages occurring after tragic events, like the terror attack of London in 2005, they all appeal to "courage" and "help".

That's it, folks ! Next time, we will develop a bayesian model to assess the probability that you'll receive a Christmas gift given that you have been a good data scientist.