This is a guest article from Kevin Petrie, VP of Research at BARC, a global research and consulting firm focused on data and analytics.

As companies mature, they are taking a more sober view of the opportunity that agentic AI represents. In fact, only 27% of executives say they would trust fully autonomous agents for enterprise use, down from 43% one year ago, according to a recent Capgemini survey. They recognize the need to manage risks before reaping the innovation benefits of agentic AI. This requires a robust governance program, supported by effective technology.

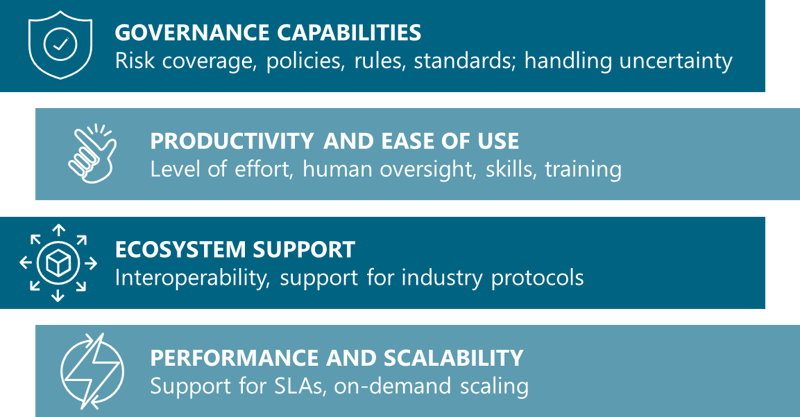

This blog, the final in a three-part series, defines criteria and recommends questions for data and AI teams to ask when evaluating AI governance tools. It builds on the first blog, which explored how to control AI model and agent risks, and the second, which described how policies, rules, and standards make it happen. Our evaluation criteria include governance capabilities, ease of use, ecosystem support, and performance and scalability. We focus on the new domains of models and agents rather than the heritage domain of data governance. While the market is new, we recommend setting a high bar for commercial tools.

Evaluation Criteria for Agentic AI Governance Tools

Evaluation criteria for agentic AI governance tools should include governance capabilities, ease of use, ecosystem support, and performance and scalability.

1. Governance Capabilities

The primary criterion, of course, is governance. Your team must assess the risks, policies, rules, and standards that these products support, and how they handle the uncertainty of AI governance.

Which of the following risks does the product address? What controls and techniques does it use to address them?

Your governance team should have a prioritized list of AI risks, including some or all of the following. Make sure the candidate product addresses your primary risks:

- Data: Accuracy, privacy, bias, intellectual property, and regulatory compliance

- Models: Black-box logic and toxicity

- Agents: Misguided decisions, damaging actions, and subversive intentions

Which of the following governance policies does the product help enforce?

An AI governance product should also address your primary policies for risk mitigation, including some or all of the following:

- Data: Stewardship, authorization, and auditing/documentation

- Models: Transparency/explainability, responsible output, and continuous oversight

- Agents: Safe decisions, accountable actions, and permissible behavior

What rules and standards does it enforce for each policy?

Rules and standards provide technical structure to enforce governance policies. They vary by business objectives, industry, technical environment, existing processes, employee skillset, and culture. Your team should have a draft set of AI rules and standards, and your candidate product should support them in a comprehensive manner.

Evaluate how each product supports your governance policies, rules, and standards.

How does this product handle the inherent uncertainty of governing models, agents, and unstructured data?

To handle the nuances of agentic AI, your governance product must do more than just validate table values. It must help your team interpret ambiguous signals (e.g., the accuracy of a picture, logic of a machine learning (ML) model, sentiment of an agent-customer interaction, and so on) in a reasonable and consistent fashion. Users need to configure and adjust various output ranges, alert thresholds, and intervention techniques. They also need to label outputs and share notes as they collaborate.

2. Productivity and Ease of Use

Any tool should make governance teams more productive. Ask vendors about the level of effort, human oversight, skills, and training they require.

What level of effort is required to implement and maintain this product?

Rather than relying on a checklist of “easy buttons” (e.g., automated tasks, graphical interfaces, AI detection, copilots, or assistants), have your data and AI teams estimate the time required to manage key tasks compared with other products or homegrown tools. This will enable you to measure the real productivity benefits.

What level of human oversight is required to identify, assess, and remediate governance issues?

Your time estimates also should include the time that data/ML engineers, data scientists, or data stewards spend spotting and fixing issues. When ML models predict nonsense or agents misbehave, your product should minimize the manual effort required to ensure production activities remain safe and compliant. Have your team devise threat scenarios and test candidate products against them.

What skills and training does it require?

The less technical expertise a product requires, the better. Assess what level of expertise each product requires and how that compares with your target users’ knowledge and skillset. Then see how the vendor can close gaps with training.

3. Ecosystem Support

AI projects require flexible, but governed access to a rich ecosystem of commercial and open-source elements. Your team must assess candidate products’ interoperability requirements and support for major industry protocols.

How does the product interoperate with data platforms, pipeline tools, AI/ML tools, and libraries?

Probe vendors about the technical requirements for maintaining data, model, and agent portability across these elements. They should provide ready-made application programming interfaces (APIs) and simple import/export functions, rather than instructions, to create custom scripts.

Does the product support model context protocol (MCP) or agent to agent (A2A) protocol?

Two new open source protocols have become the lingua franca for agentic AI elements to speak and collaborate. MCP from Anthropic standardizes how AI agents access data from various tools and platforms. A2A from Google, meanwhile, standardizes how agents interact with one another. Ensure your governance vendor supports protocols such as these and secures the interfaces that use them.

Agentic AI projects require flexible, but governed access to a rich ecosystem of commercial and open source elements.

4. Performance and Scalability

Most agentic AI use cases require real-time interactions with users. Ask vendors about the ability of their governance products to enforce policies, rules, and standards without breaking service-level agreements (SLAs). For example:

Can the product support your latency, throughput, and user concurrency requirements?

Once in production, AI models and agents must meet rigorous user expectations. Forecast your traffic levels and budget for growth in case adoption takes off. Then test the ability of candidate products to monitor, identify, and respond to governance risks inline while maintaining these traffic levels.

Can it scale on demand?

Assess the ability of candidate products to auto-scale and consume elastic cloud resources, compute in particular, to stay on top of production flows. Your team cannot afford to lose control of governance when workloads spike.

Getting Started

Organizations must take a pragmatic, risk-aware approach to agentic AI. This means selecting AI governance tools that balance innovation with safeguards, such as enforcing policies, rules, and standards across data, models, and agents. Data and AI teams should now shortlist vendors, devise test scenarios, and evaluate how well each tool supports their specific governance needs and risk profile. The evaluation criteria outlined here (governance capabilities, ease of use, ecosystem support, and performance and scalability) can provide structure for those conversations.