And just like that, we’re on to part 3 of this Alteryx to Dataiku series! Part 2 was all about Dataiku’s modern take on data — where it’s similar to Alteryx and where things diverge. Today, I’ll shift gears from the data itself to what can be done with it inside of Dataiku. I think of it as moving from raw ingredients to finished meals. Appropriately, in Dataiku, we get to those end results through repeatable steps called recipes.

At a high level, Dataiku’s approach to recipes enables:

- Intelligent, efficient push-down compute

- Rapid and flexible iteration

- The ability to find and reuse logic

What's It Like in Alteryx:

Alteryx’s approach is all about a wide-ranging palette of tools to meet data and analytic needs. With dozens of categories and hundreds of tools, there’s a piece of logic for a huge number of scenarios. As you build out a workflow and configure each step, there are typically a large number of runs to iterate and verify results. Caching is available to help avoid running an entire chain of tools every time.

If you’re starting a new process and want to reuse some logic, you might copy and paste some tools from one workflow to another after opening a teammate’s workflow from Alteryx Server or some other shared location.

How Dataiku Does It Differently:

Fortunately, Dataiku’s approach is very similar to Alteryx’s from an end user perspective. There are different recipes to accomplish different tasks, from summarizing to cleansing to transforming. But as a modern, cloud-first platform, there are key differences that can really speed up development time. We’ll start by diving into how recipes get their work done.

Where the Work Gets Done: Intelligent Push Down

Part of Dataiku’s secret sauce is that it’s really designed to be an orchestrator. At its core, Dataiku acts as an easy-to-use layer that intelligently does your data work where it should. It does this by letting every individual recipe in a flow execute with its own engine.

When it makes sense to leverage the underlying speed of a modern database, Dataiku can turn recipes into SQL underneath the hood. If that’s not an option, a scalable cluster can churn through the work. But as the user, you just get to choose the business logic that you need; Dataiku takes care of the rest. And if you’d like to change your engine, you can without changing how you’ve set up your recipes.

Engine intelligently selected for each recipe

So no matter your process, you can ensure the right work is happening at each step. For example, I might use a Join recipe to bring together several external vendor datasets from cloud storage with enough cluster compute to scale to any data size. With a click, I could store the results in my Snowflake environment. From there, I could immediately create a dozen visual cleansing steps inside of a Prepare recipe that all execute with Dataiku’s in-database engine without moving any data! The best part is Dataiku would automatically choose those best practice recipe engines for me.

Chain together different engines across your flow

Another key difference: Dataiku doesn’t have hundreds of recipes. Instead, it combines many different types of logic into a more compact number of recipes. For example, Dataiku’s join recipe combines the functionality of an Alteryx Join, Join Multiple, and Filter tools into one step. But the real difference comes with Dataiku’s Prepare recipe. With hundreds of row-level processors, it can tackle everything from data cleansing to formulas to regular expressions. Which leads us to our next topic: What’s it like to actually build with Dataiku recipes?

Working with Recipes: Run What You Want, When You Want

Hopefully, you’re starting to realize the benefits of Dataiku as an orchestrator. Now, let’s focus on how recipes enable a more iterative and flexible way to build. In particular, I’d like to focus on the star of the show when it comes to Dataiku visual recipes: the Prepare recipe.

The Prepare recipe is Dataiku’s answer for any sort of row-level data work. But it’s not just the breadth of what it can do — it’s how it does it. When you start work with a Prepare recipe, the first thing you’ll see is a sample slice of your data that’s very similar to a Dataset’s explore pane from part 2 in this series. The magic is that as you click on different columns, Dataiku will recommend different transformations, from date parsing to filtering out bad data based on patterns.

Once you make a selection, a step appears on the left side of your screen and the change happens live immediately! This gives the best of both worlds; immediate feedback as you work and an automatic bread crumb trail left behind as you go. And, of course, if the built-in recommendations aren’t what you need, you can search from a list of almost 100 processors. You can even type in some text and let GenAI create the logic for you.

Parsing dates inside a Prepare recipe with the help of GenAI

Now, not every recipe in Dataiku works quite like the Prepare recipe, but iterative experimentation is still the foundation. And that’s because you can always run as little or as much of a flow as you’d like without having to remember to cache. If I’m adding on to the end of a process, I can just run that last new recipe to verify my results. If I realize I need to add some logic upstream, I can quickly insert a new step and only run everything downstream once the changes look good!

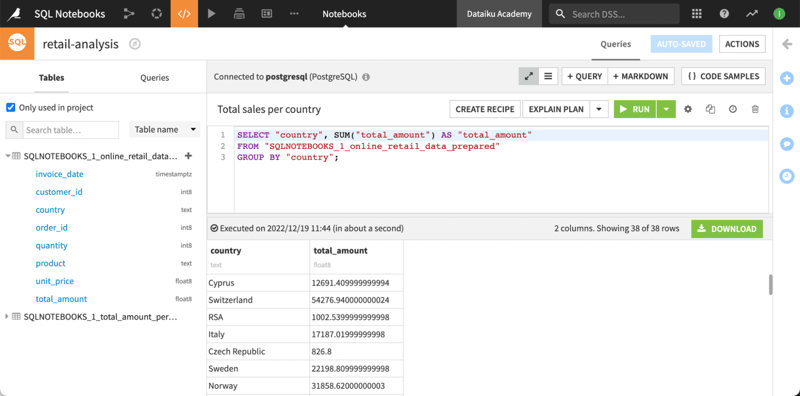

Dataiku’s visual recipes can satisfy a huge number of use cases. But if I would like to write a little Python or use a trusted SQL query, Dataiku also provides first-class coder experience through code recipes and notebooks. For rapid testing, notebooks are the way to go, but once I have something I like, I’m just a click from saving it to my flow. I can install any packages I need into code environments that can be reused across my work and with my project’s collaborators. What code I use is totally up to me from simple SELECT statements to calling stored procedures to calling custom APIs.

SQL notebooks built right in

Don't Start From Scratch: Search, Understand, Reuse

Intelligent engines and iterative analysis both make Dataiku recipes a fast way to get data work done. But real scale comes from reuse, and the first step is finding those quality ingredients. Dataiku doesn’t just make finding the right data easy — every recipe is searchable automatically right through the catalog. With just a few clicks, I can look specifically for a Window recipe that already has the logic for running 30 day sales averages. Or I could look for a trusted SQL query tagged as grabbing the exact slice of data to do financial reconciliations. Once I find something that’s useful, I can head to the recipe’s project to understand more about how it should be used, who originally built it, and when it was last updated.

Finding the right recipe to reuse in the catalog

Just like you’d hope, getting a recipe into another project is as simple as highlighting, copying, and pasting. I can even duplicate entire template projects to grab data, logic, and automations all at once. With this approach to collaborative reuse, the more a team works in Dataiku, the more tools they have in the toolbox.