Recommendation engines give your favorite websites the ability to predict what information you would most like to see. While this is an incredible tool for driving traffic to websites (potentially increasing revenues by billions), it presents a huge challenge when it comes to the dreaded problem of fake news.

Many do not realize that Google search results for identical terms can vary significantly based on the person doing the search and that Facebook news feeds are not at all chronological. In theory, this is how these sites are able to give you the results that you are looking for as quickly as possible. However, an unintended consequence of this has surfaced in the form of filter bubbles.

Many do not realize that Google search results for identical terms can vary significantly based on the person doing the search and that Facebook news feeds are not at all chronological. In theory, this is how these sites are able to give you the results that you are looking for as quickly as possible. However, an unintended consequence of this has surfaced in the form of filter bubbles.

Filter Bubbles

Generally speaking, filter bubbles form when the algorithms used to curate content to users generates information bubbles where you are only being given news stories and social media posts biased to your existing beliefs, isolating users from differing viewpoints and perspectives. For example, Facebook might place news articles that are aligned with your political beliefs above those that might contradict them.

As Eli Pariser claimed in his famous TED Talk (in which the term “filter bubble” was first defined), algorithms have become the “gatekeepers” for information online in the same was that editors curate what gets into magazines and newspapers. The trouble is that these algorithms do what they do without the ability to consider then implications of the choices they make.

The term "filter bubble" refers to the isolated community of ideas these algorithms are unintentionally creating.

The term "filter bubble" refers to the isolated community of ideas these algorithms are unintentionally creating.

Fake News

In the context of fake news, filter bubbles have a particularly damaging reach because fake news stories often contain exaggerated, falsified claims that algorithms looking for specific keywords or tags would likely be attracted to (a feature to which many of the creators of fake news have caught on). Additionally, fringe or extreme viewpoints might generate more likes and comments, giving a further algorithmic boost.

Algorithms can also be manipulated with fake views and automated comments. The algorithms that Facebook or Google use to recommend information are fairly agnostic when it comes to quality or veracity. This creates the perfect environment for viral, inflammatory content (that is often not truthful) to shoot to the top of news feeds and search results.

As has been seen in the last few years, these kind of curated, false stories can have huge implications on the world. Filter bubbles (across the political spectrum) might have influenced both the 2016 United States election, the Brexit vote in the UK, and a host of other political and social events over the last year. The echo chamber created by filter bubbles both clouds the ability of users to hear the opinions of the other side while pushing viewers toward more and more extreme views.

Building a Better Recommendation Engine

As always, algorithms themselves are not really to blame for the problem of filter bubbles, fake news, and (more broadly) poorly performing recommendation engines of all kinds — it’s the human beings building these systems that carry that crown.

Companies like Google and Facebook are working hard to ensure that their algorithms give results that are more balanced and more truthful. But any company building a recommendation engine — no matter how big or small — can make it better by:

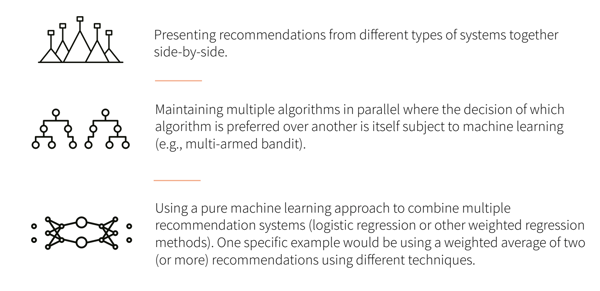

- Experimenting with different types of recommendation engines to determine which is the best for a particular use case, potentially further refining it using more sophisticated methods like:

- Using more (and preferably more diverse) sources of data for building recommendations.

- Continually monitoring and refining recommendation engines in production (not simply designing it once, setting it, and forgetting it).

- Using a data science tool that can clearly map data sources and how they’re being transformed/used in a particular model to not only increase transparency around recommendations, but also to identify potential problems and introduction of bias in a collaborative group setting where others can weigh in.

As with anything, the problem of filter bubbles comes down to the proper use of available technology. Recommendation engines can be powerful tools that can make the lives of customers (and employees) easier.