This is a guest article from Kevin Petrie, VP of Research at BARC, a global research and consulting firm focused on data and analytics.

We all see the headlines about AI failures. They range from amusing mishaps (fake book reviews and the like) to disasters such as lost enterprise databases and fatal turns by self-driving cars.

Behind each headline lies a failure of governance. As companies race to deploy AI, they exacerbate data governance risks such as accuracy, privacy, bias, intellectual property, and regulatory compliance. AI models and agents add new risks as well: black-box logic, toxic outputs, misguided decisions, damaging actions, and even subversive intentions. Organizations that manage the risks poorly often experience a blowup.

To avoid this, organizations need to extend their traditional governance programs to address AI models and agents. This blog, the second in a three-part series, recommends policies, rules, and standards to achieve this. It builds on the first blog in our series, which defined key risks and technical controls to mitigate them. Our third and final blog will describe criteria for data/AI teams to evaluate AI governance tools and platforms.

What Is Agentic AI?

Let’s start by defining agentic AI and agents. Agentic AI is a sophisticated form of AI that makes decisions and takes action autonomously to achieve specific goals and outcomes in dynamic environments. Agents are AI-enabled applications designed to execute tasks, from simple transactions to navigating complex decision trees for smart actions and valuable outputs.

An agent’s “perception” of its environment and surrounding context allows it to work in a human-like fashion to solve problems and execute tasks while operating autonomously at scale and with limited oversight. AI agents can learn and iterate over time. They represent the next step in insight-driven actions beyond simple rule-based, policy-driven robotic process automation (RPA).

Governed well, agentic AI creates a massive innovation opportunity. Governed poorly, it can damage the business.

Enter the Governance Program

A governance program organizes policies, rules and standards to guide business processes while controlling risk. The policies define a program’s overarching objectives and principles, the rules define how to enforce them, and the standards provide technical structure. Governance programs must organize these elements in a comprehensive manner while holding stakeholders accountable and flexibly adapting to new or changed requirements.

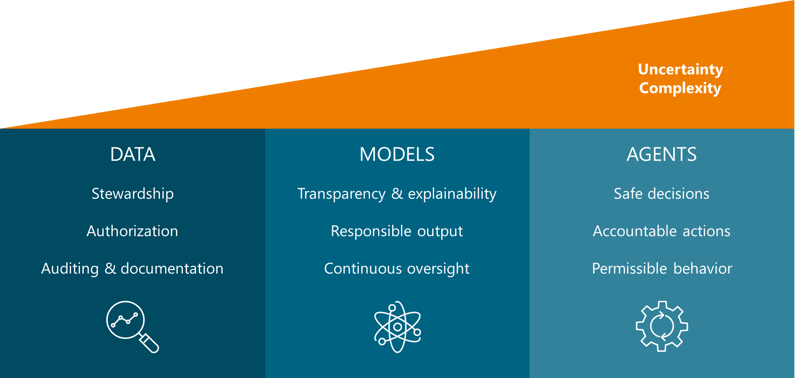

To support new AI initiatives, modern governance programs must address both the traditional domain of data and the new domains of AI models and agents. Those new domains increase the uncertainty and complexity of governance. Does that GenAI model treat different ethnicities fairly? How will its responses influence different agents across departments, companies, and customer groups? And so on. Let’s explore the policies that address all this, then drill into rules and standards for models and agents.

Governance Policies

Data

Data can misrepresent the truth, breach privacy, exhibit bias, compromise intellectual property, and hinder regulatory compliance. Governance policies must mitigate these risks by mandating data stewardship, ensuring authorization of data access and usage, and auditing and documenting data-related activities.

Stewardship: Governance programs start with data stewardship. This policy assigns human stakeholders, ranging from dedicated data stewards to data engineers or business managers, to oversee the data lifecycle. They enforce rules to inspect, approve, and curate datasets for AI usage, then remediate issues with accuracy or bias. Standards help with the technical detail, for example by defining file formats and remediation processes.

Authorization. Governance programs also need an authorization policy to control data preparation, access, and consumption. This policy states the types of users, applications and datasets that can interact with one another. The rules go further to delineate the acceptable individuals, application tasks, and data objects, and of course the models and agents that layer on top. Standards, meanwhile, might require certain enabling APIs and tools.

Auditing and documentation. Data governance is incomplete without auditing and documentation to demonstrate compliance with policies, regulations and laws. Governance teams must describe in sufficient detail how they meet the spirit and letter of the General Data Protection Regulation (GDPR) in Europe, the Health Insurance Portability and Accountability Act in the U.S., and myriad other legislation that safeguards the handling of sensitive data.

Models

Models can have opaque, confusing logic or generate toxic outputs that treat humans unfairly. Reducing these risks requires policies for transparency and explainability, responsible outputs, and continuous oversight of production activities.

Transparency and explainability. As a policy, stakeholders must understand and trust model inputs, logic, and outputs. The corresponding rule might require detailed documentation that explains how inputs affect outputs. Standards can support this rule by codifying the use of techniques such as SHAP or LIME to quantify the contributions of data samples and features to model outputs. Standards also might specify that model documentation and registries include model versions, audit logs, and lineage.

Responsible outputs. As a policy, companies must prevent toxic, biased, or inappropriate model outputs that harm their reputation and customer relationships. This requires rules that, for example, all GenAI workflows include evaluator models, filters, and kill switches to block impermissible outputs. Various standards can help here. They might define the keywords and ML sentiment indicators that cross red lines for a given GenAI response, along with the toxicity thresholds or confidence scores that trigger the full removal of a production model.

Continuous oversight. The policy of continuous oversight ensures that data and AI teams maintain vigilance by monitoring, flagging, and inspecting model activities once in production. They can enforce this policy with rules for scheduled audits, for example to explain what inputs or logic contributed to toxic outputs. And standards define the frequency, triggers, and formats of such audits as well as corrective actions for specific stakeholders.

Agents

Agents can draw incorrect or inappropriate conclusions, act in damaging ways or even try to subvert human intentions. Reducing these risks requires policies for safe decisions, controllable actions, and permissible behavior.

Safe decisions. A modern governance program must include a policy that agent decisions fall within defined business, ethical, and legal boundaries. Supporting rules should include alerts and overrides that stop agents from making unsafe decisions. For example, an e-commerce agent should never decide to buy a product that exceeds the customer’s budget or comes from a suspicious merchant. The program’s standards can codify such processes with spending caps, approved merchant lists, escalation criteria, and so on.

Accountable actions. After decisions come actions. As a policy, agent actions must be traceable, controllable, and reversible. This might require rules for human review and approval of high-impact actions such as monetary transactions, customer recommendations, and communications to third parties. It also might require rules that evaluator models double-check decisions before agents act. Standards, meanwhile, define how to map each agent action back to originating model outputs and decision logic.

Permissible behavior. GenAI models such as OpenAI o1 might hide their actions, deceive users, or otherwise subvert human intentions. To mitigate this risk, companies need a policy for anticipating and identifying subversive model behavior. They can enforce the policy with rules for applying adversarial tests to all logged agent decisions and actions. And they can standardize the supporting metadata as well as human intervention procedures.

Risky Business

Given all that can go wrong, it’s no surprise that the Global Risk Advisory Council recently ranked AI as the #1 reputational hazard for organizations. Governance teams can minimize the hazards of AI by embedding these policies, rules, and standards into everyday workflows, ensuring that teams design and operate agentic AI with accountability in mind.

By treating governance as a continuous, iterative discipline rather than a one-time checklist, they can contain risk while accelerating innovation. Those that strike this balance will unlock the full potential of agentic AI without sacrificing trust, compliance, or business integrity. The third and final blog in our series will explore the criteria that organizations can use to evaluate tools and platforms that enable this.