Neural networks have been successfully applied to image problems with high levels of performance. However, it is extremely hard for companies to train a neural network because it requires resources that most of them can’t afford - both hiring Yann LeCun or another deep learning expert as well as boosting computations with GPUs is really expensive.

Heads Up!

This blog post is about an older version of Dataiku. To learn about how to do transfer learning with Dataiku in the latest version, take a look at the documentation in the Dataiku Academy.

In this blog post, we will first walk through some basics on neural networks and transfer learning. Then, since I just got back from an amazing holiday, I will use the INRIA Holiday dataset to show you how to tag images using deep learning and transfer learning, as well as build a nice visualization of the most exotic places you visited during your vacation.

What You Need In Order To Train Your Own Neural Network

Even with GPUs, the training process is very time consuming. What’s more, you would need millions of labeled images to train your own Deep Learning model.

Training a neural network is a complex task

Most companies dealing with images have lots of unlabeled images and don’t want to spend months tagging them manually -they need pre-trained deep learning models! Why reinvent the wheel when models designed and trained by deep learning experts are available in just a few clicks?

Deep Learning Or Transfer Learning?

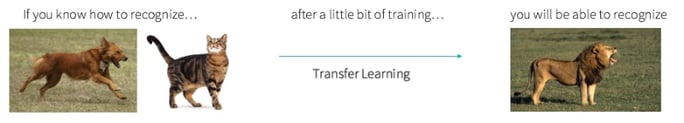

Transfer learning aims to improve the process of learning by using experience gained through the solution of a similar problem. Indeed, you can’t always find a model that corresponds exactly to your data. What you have to do is map your problem to an existing similar one.

Let’s imagine your child learns what a cat is and a dog is, and you offer her a book with lion pictures. With a little bit of training, I have no doubt she would be able to recognize a lion! That is what transfer learning is all about.

The transfer learning approach

A Business Use Case for Transfer Learning

Imagine you are an e-commerce company with thousands of flash sales available on your website every day. To view a product offer in detail, users have to click on the specific thumbnail, which is composed of an image and a short description. In these thumbnail images is hidden information, yet you don’t know how to use it.

It is definitely valuable to know which types of image a customer is attracted to in order to suggest adapted content to him or her. In other (data scientist) words, this could add image features for a content-based recommendation system.

Walking Through a Practical Example

We will use a pre-trained neural network available on the Caffe Model Zoo. The model we have selected has been trained on the 2M+ labeled images database Places205 for scene recognition. With categories such as coast, hotel, swimming pool, or bedroom, this database fits perfectly with our holiday images. The network is based on the VGG16 architecture, state-of-the-art on the Places205 challenge when I began my research into this topic.

We add more precise tags by exploring the SUN397 database. It is composed of 100,000+ images for scene recognition. Since we don’t have enough images to train a robust neural network, we will use transfer learning - we extract the last convolutional layer features from the aforementioned VGG16 network and build a 2-layer model to learn the SUN397 labels from these intermediate features.

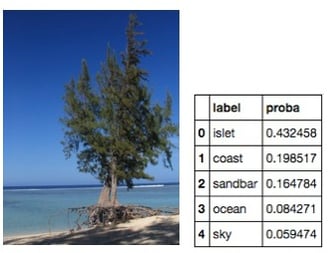

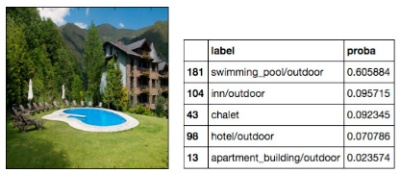

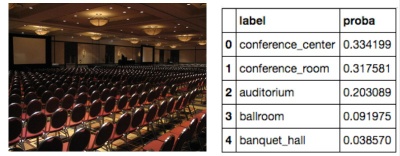

Let’s tag our images with the top five labels. I’ll let you appreciate the accuracy of the results:

Images tagged with the VGG16 neural network model trained on the Places205 dataset

Post Processing And Topics Extraction

Once our images are tagged, what do we do with them? As you can see in the above examples, a post-processing step is necessary. Some tags can bring complementary information (ocean – sky – coast), while others bring redundant information (inn – chalet – hotel, conference center – conference room – auditorium). It would be useful to group tags depending on what they represent and then build a more general topic. For instance beach scene = coast + ocean + sandbar. On the other hand, one tag should not be limited to belonging to one unique topic. I’m sure you wouldn’t pack the same clothes for a vacation in the snowy Alps as you would if you were going to the arid mountains of Colorado!

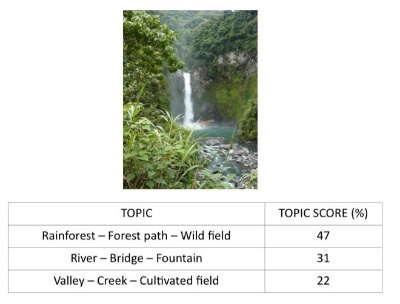

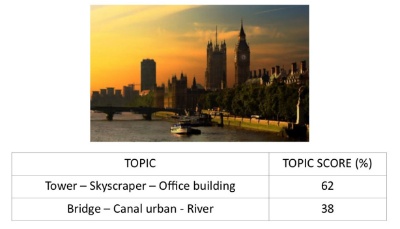

NMF is an unsupervised learning technique that addresses this issue - it automatically extracts the topics we expect from the holiday dataset tags. An image is now represented by 30 relevant topics. Let’s have a look at the new topic-based image representation:

Scored images in the topics space

T-SNE 2D Representation With Dataiku DSS

We decided to set the number of topics at 30: this is not easy to represent nicely. We apply a t-SNE transformation for a 2D visualization. This algorithm is useful for visualization because it reduces the dimension of your data and makes precise clusters appear.

Before applying the t-SNE transformation, we propose to post-processing the output of the pre-trained neural network with three different methods:

- We directly apply the t-SNE transformation on intermediate outputs of the neural network (the last convolutional layer features)

- We perform an NMF on the top-5 label matrix

- We perform an NMF on the intermediate outputs of the neural network (the last convolutional layer features)

Here is a short video of the Dataiku DSS workflow: you can visualize all the processing we have conducted for this project.

Dataiku DSS workflow: from images to-post-processed features for visualization

How To Visualise Image Clusters In A Dataiku DSS Webapp

The t-SNE representation enables us to visualize image clusters thanks to a Dataiku DSS webapp. I’ll let you select one of the post-processing steps on the top left corner and move your mouse over the images to check the relevance of the represented clusters.

Basically, without an NMF step, the image content varies continuously depending on where it is in the plan, but no cluster appears. On the contrary, performing an NMF before applying the t-SNE transformation proves very useful for cluster detection. If you click on the third button last-layer+NMF, you’ll see a precise coral cluster on the top, a boat cluster on the left, and a ruins cluster on the bottom left.

Since similar images are closed in this representation, the topics based-image representation seems to make sense. We are confident when working in the topics space.

30 relevant numerical features have been extracted from the images. With content-based methods, it is now quite easy to recommend a vacation destination to someone with similar specificities: coral, boat, ruins, and others. Business use cases are obvious with this approach, from e-commerce recommendation systems to customer targeting.

Thanks to transfer learning, there is no need to worry about the network architecture, GPU settings, or the optimization parameters: pre-trained neural networks provide more than satisfying results and enable us to save time and money.