Once a data science project has progressed through the stages of data cleaning and preparation, analysis and experimentation, modeling, testing, and evaluation, it reaches a critical point. After significant time and effort have been invested into a data science or AI project and a viable machine learning model has been produced, it needs to be operationalized, meaning deployed for use across the organization.

Before diving into the execution of operationalization, it’s always helpful to learn from the previous experimentation (and, ultimately, mistakes and failures) of others. This blog post rounds up four main reasons that operationalization efforts may fall flat so you can prevent them from happening in your own organization.

1. The Process Is Too Slow

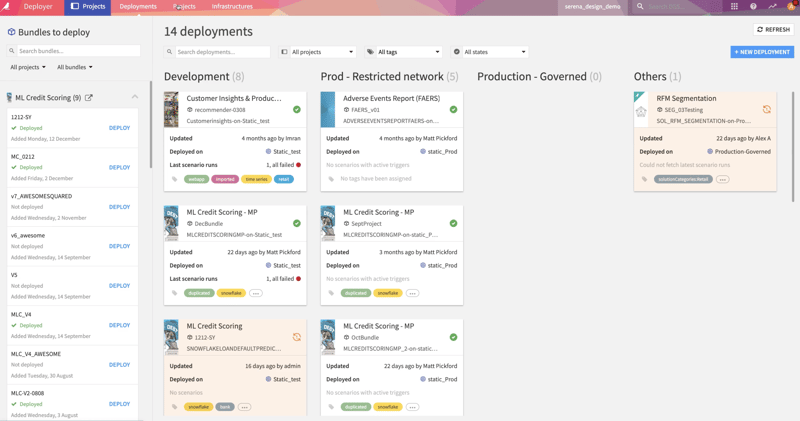

The reality around most analytics and AI projects is that they don’t bring real value to the business until they’re in a production environment. Therefore, if this process isn’t happening quickly enough — both in terms of total overall start-to-finish time-to-insights as well as the ability to rapidly iterate once something’s in production — operationalization efforts will falter. In addition to having strong communication between teams responsible for operationalization, proper tools that allow for quick, painless incorporation of machine learning models in production are the key to a scalable process.

Speed is also of the essence in that feedback from models in production should be delivering timely results to those who need it. For example, if the data team is working with the marketing team to operationalize churn prediction and prevention emails, the marketing team should have immediate insight into whether the churn prevention emails sent to predicted churners are actually working, or if they should re-evaluate the message or the targeted audience.

2. Lines of Business Are Not Involved in the Process

Operationalization happening in a vacuum without any input from business teams is doomed to failure, as projects tend to get delivered that don’t address real needs, or do so superficially. Even when data team members are embedded in the lines of business, they need to work closely together every step of the way to ensure alignment of project goals with business realities.

Similarly, operationalization should interplay with self-service analytics in the sense that projects started with self-service analytics might be good candidates for operationalization. When the teams don’t work together, these opportunities can be easily missed.

3. Lack of Alignment Between Data Science, Engineering, and/or Traditional IT

Data projects are beasts of their own when it comes to integrating into a production environment. On the data science side, they need to provide reliable, business-validated predictions or outcomes not only at first, but also over time.

On the data engineering side, they leverage advanced data processing systems that need to be tuned and monitored. On the traditional IT side, they need to abide by all of the requirements of enterprise-ready, robust IT systems. A lack of coverage or alignment between these three dimensions quickly leads — at best — to the inability to get data innovation up and running, and at worst to actual in-production failures.

4. Lack of Follow-Up and Iteration

The point of rapid operationalization is to get models out of a sandbox and into production quickly to evaluate their impact on real business processes. If models are operationalized and then forgotten, they could — over time — have adverse effects on the business. Instead, constant monitoring, tweaking, and follow-up is the key.

Putting It All Together

The ultimate success of an analytics or AI project comes down to contributions from individual team members working together toward a common goal. This means effective contributions go beyond specialization in an individual skill set; team members must be aware of the bigger picture and embrace project-level requirements, from diligently packaging both code and data to creating web-based dashboards for the project’s business owners.

In today’s highly competitive environment, individual silos of knowledge will hinder team productivity and effectiveness. Best practices, model management, communications, and risk management are all areas that need to be mastered in order to, ultimately, operationalize a project that drives repeatable business value for the organization.