By writing this article, I wanted to gather statements and thoughts about DataOps to create a better understanding of the role and its future. In the era of infrastructure as code and cloud, do we really need yet another movement? Is DataOps yet another trendy label brought up in a tiny circle of already-convinced early or lonely adopters?

I chose to quell my preconceived notions, and I instead dug around looking for grey areas or gaps in my understanding of DataOps to fuel my writing. I don’t pretend to draw an irrefutable line of truth here, but I hope this will be a base for guidance and spur some input from the community on what DataOps is (or should be).

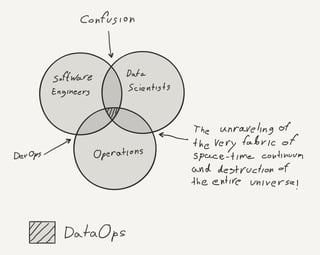

Is DataOps Just DevOps?

It is at this moment I have to describe my understanding and give my definition of DataOps. I will assume that you are already familiar with DevOps (I therefore won’t explain much and risk losing the attention of those that are familiar, but if you’re not, I like Rackspace’s explanation — “an approach in which software developers and IT operations work together to produce software and infrastructure services rapidly, frequently and reliably by standardizing and automating processes”).

There's a fundamental difference between DataOps and DevOps, but it's critical to understand both.

DevOps and DataOps are similar in that they grow out of a need to scale delivery. DevOps is necessary to meet the challenge of shipping more and more code to an ever more complex production environment. The need for DataOps arises from the need to productionalize a rapidly increasing number of analytics projects and then to manage their lifecycles.

Even so, there’s a fundamental difference between DevOps and DataOps: its underlying influence.

DevOps is driven by application delivery. That is, its components and dependencies, containers and lifecycle. DataOps is driven by data lifecycles and insights. Data lifecycles are something DevOps doesn’t concern itself with.

If we take a dashboard with charts, a data pipeline, or a recommender system, the data is much more critical at every time scale. Obviously, every application ingests or produces data, and every interaction with data is made through applications. So that means more than ever, the two disciplines are intrinsically tied together.

But wait! I still have a good chance to fight my own belief here!

OK, So What’s the Difference?

If DevOps is all about a new way of thinking about applicative service delivery and automation, and if my application sets are Spark applications and Hadoop jobs or a model in production, what’s the difference?

One of the primary differences between the two that strikes me immediately is that data is always in production. When a data scientist works on a machine learning model, (s)he will always need real (and most of the time production) data as much as a data analyst who’s working on an efficient monitoring dashboard.

Yet there is still a level of understanding that data teams and ops have to share during the bootstrap phase and for the whole project lifetime. Storage format choice, execution engines, computation type, model performance, metrics dynamicity, and monitoring in production the technical limitations for each technology used, etc. - none of these elements can be done without different insights and perspectives from both sides.

There's a level of understanding that data teams and ops have to share for the whole project lifetime.

If DevOps aims to bring agility, I would say that DataOps aims to create a symbiosis. If there’s a sudden change in data that a model relies on and you have the right diagnostic scenarios in production, the business team would be alerted to deal more carefully with the latest insights, and the data team will be notified to investigate the change or revert a library upgrade and rebuild the related partition.

How DevOps Fits in the Bigger Picture

In a data team, each role needs to understand the work of the others. The scientists need to know which library changed (let’s say a security library to which an upgrade is inevitable), and ideally DataOps need to be aware of the dependencies of each project.

Automation of platforms, projects and products evolution, definition of business-driven technical monitoring and in-production testing, elasticity of technical resources: this is what DataOps should be."

DevOps is part of a team, and ideally, they need to be aware of the dependencies of each data project.

It has to be said that there is an undeniable similarity between statistical process control introduced by lean and the continuous delivery processes that could be applied for many data projects. In an organization, a data project cannot be isolated and implemented without regard for other projects.

In data science you have a goal, but you rarely have detailed specifications about what data should be used or not. Sometimes you’ll end up using completely different sources than what you first planned. And you’ll need real data (mostly production data), which means part of DataOps’ challenge is having to monitor input data quality constantly.

When Is It Time to Bring In DataOps?

The right moment to introduce DataOps in an organization is when the amount and diversity of data makes it more valuable than most applications (instead of being just a persistence layer). This threshold was reached very quickly by internet giants, which explains why they are so advanced on the matter.

The fact is, once your data becomes a strategic asset, there are necessary transformations of business services and processes, and the operational requirements and priority evolves accordingly. Moreover, in addition to the classic time-to-market value brought by DevOps, there are now new optimization areas like resource management, data quality, and new business services."

Times They Are A Changin’

Despite the fact that DataOps is a relatively new discipline, we can already observe many changes in the field today. Two of the most obvious examples to illustrate this are:

- Log files older than one month that used to be garbage 10 years ago now not only are very efficient for analytics, but they are the foundation of very innovative products like Apache Kafka.

- A language dedicated to research and thus very unstable over time breaks in production, but the insights produced are worth the risks and instability it brings.

DataOps: Job or Standard?

DataOps is about putting and maintaining data, models, insight, and workflows in production. Size and process in your organization may allow the designation of some of these tasks to your data scientists or data engineering.

But ultimately, these tasks require commitment and intimate knowledge of systems and production maintenance, and they risk slowing data scientists and data engineering down on their main assignment. For these reasons, it’s entirely expected that DataOps will become widely recognized as a job of its own.

Taking this one step further, I personally think that we’re eventually going to see specialized DataOps roles for certain domains like connected- or self-driving cars, predictive maintenance, deep learning, or simply massive data ingestion.

With feedback and time, the DataOps role will continue to be defined in the years to come.

Though the first wave of DataOps might be comprised of people like software engineers specialized in distributed systems or data scientists familiar with agile process and continuous integration, feedback and time will continue to refine the DataOps role and its responsibilities. Have thoughts, feedback, questions? Agree (or disagree) about the future of DataOps? Don’t hesitate to reach out.