The great statistician George Box worked in many areas — time-series analysis, Bayesian inference — and earned a handful of prestigious awards, on top of introducing the response surface methodology (RSM).

TL;DR: he was a really smart guy who knew his stuff. The kicker? He was probably best known for coining the expression “All models are wrong” back in the 1970s.

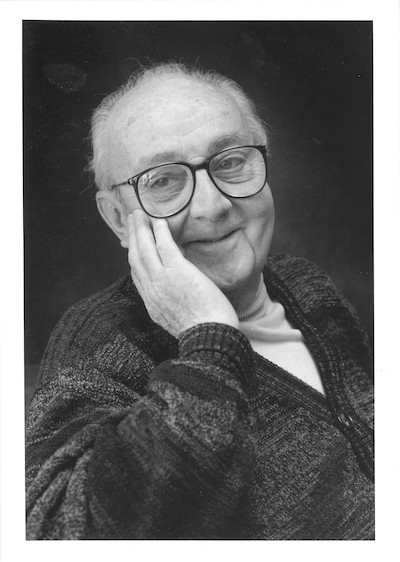

Statistician George Box, early skeptic of model usefulness

Now, you might be saying to yourself, “Yeah, but that was the 1970s. Now we have deep learning and AI and fancier technologies and stuff, and you can’t say that all those models on which our systems and lives are increasingly based are all wrong. He couldn’t have been talking about those models.”

Well… he wasn’t, at least not explicitly. But also, he was, at least broadly. And when reading the second (and lesser quoted) half of this quote, its applications to today’s world of AI become even more clear:

The practical question is how wrong do (models) have to be to not be useful." - George Box

Bonini’s paradox is, in many ways, a continuation of Box’s thinking (and is reflected again and again in the work of many other modern statisticians, mathematicians, and even philosophers). It is the idea that the model of a complex phenomenon is just as hard to understand as the phenomenon that it is supposed to explain. Or more generally, that models of complex systems can become so complex that they’re essentially unusable.

An often-cited example to contextualize the paradox is imagining a map that is at a 1:1 scale. Detailed? Yes. Useful? Not exactly.

What We Can Learn

If these ideas surrounding complexity (and usefulness) in models has been discussed for decades, how did we seemingly get to a place where machine learning models, and by extension outputs of AI, are accepted as truths?

Machine learning models are already working every day, determining outputs for things like loan approval (or not), setting pricing, determining loyal customers from those likely to churn, and much more. Generally, these decisions go unquestioned. Which is maybe fair, for now: these issues being addressed by AI systems today are relatively non-complex, though I’d argue we’re really starting to toe this line.

At what point do things start to become so complex that they are no longer useful?

At what point do things start to become so complex that they are no longer useful?

One can imagine that after organizations tackle low-hanging fruit, they will try to take on increasingly compounded business questions. This means developing very complex systems to handle those questions. So at what point will they become too complex to be useful? And how will companies respond when customers or users start questioning models and no longer accepting them as truths?

What Do We Do Next?

The good news is that regardless of how we got here, things are already beginning to shift. The world is starting to recognize that the buck stops with companies or the people developing models to provide explanations. Interpretable AI has joined the mainstream news cycles. And that’s great — but it shouldn’t be up to consumers or regulators to demand interpretable AI (which has been largely the case today).

It’s also up to companies to think about what level of complexity in a model is useful, and what level of complexity is so high that it’s actually too complex to explain the situation at hand. That means if a model is built in order to answer a specific question, complexity may be appropriate, because it can digitally “execute” and create predictions and outcomes faster than working it out in “real life.”

But introducing complexity in order to achieve understanding or explanation of a problem at hand may not be the right move. Nebulous use cases for AI may end up with needlessly complex models that do more harm than good. So the next time you or your organization is implementing a solution — think of Bonini’s Paradox.