Today, a single technological innovation can turn a market upside down in a couple of days. However, when the time comes to provide infrastructure to support these technologies, decision makers and enterprise architects face the risk of a negative impact when integrating new solutions in an existing environment while eventually trying to contain costs. So what are they to do?

Key Data Architecture Considerations

-

Tech companies have taken full advantage and mastered these technologies to widen the gap with others in terms of profitability.

-

Open source communities have demonstrated their capability to gather resources to create and maintain software and solutions necessary to bring this disruptive approach to any organization.

-

Startups today rely on these communities heavily to disrupt every industry.

Let’s take the example of Hadoop. It makes sense to bet on technologies like this one. Not only does it allow distributed computing on any type of data, but, more importantly, it brings flexibility and commodity compared to existing business Intelligence (BI) appliances.

A company can start quickly with a small new infrastructure, reassign existing resources, or even build their data lake in the cloud. And none of these options will get them stuck with a same vendor forever, as is generally the case for data warehousing appliances. Even though cloud may seem like an expensive option today, cloud providers are increasingly competitive so it’s a pretty safe bet for now.

This is crucial since you don’t want to find that your resources don’t allow you to scale up the day your chief data scientist runs a revolutionary algorithm that requires the cluster to be twice as large, and see the project postponed for a month because you need to renegotiate a contract with a vendor. You should always be prepared to rethink your architecture at any time.

What Dataiku Brings to the Mix

This topic comes up a lot with the clients I work with. They want the ability to create great predictive services based on data from Hadoop or from their existing DBMS, but also from simple log files. Dataiku connects to more than 40 different types of data storage technologies, including Hadoop, Spark and more than 13 SQL and NoSQL Databases. This means it can be used by every type of organization on any type of data project.

It’s important to know that once you create a project, the tools you’re working with will allow you to scale up as much as you need. Also, this is something that anyone responsible for infrastructure management or investment knows: your analytics department will expect you to support and integrate all the various technologies you use, as well as the technologies you could one day use in the future.

Infrastructure management can benefit from data as well. From predictive maintenance to security audits, there are lots of use cases that can help you improve your existing tools or set up new ones. For instance, you can predict when you need more nodes for a web portal if you’re marketing campaign is more successful than expected and create additional ones right before your applications slow down (bye bye auto scaling and welcome to predictive scaling). You can even predict the exact impact of a failure in one of your systems.

So for you, as well as the teams you support, Dataiku could help make your work easier, helping you along the way to your own data revolution.

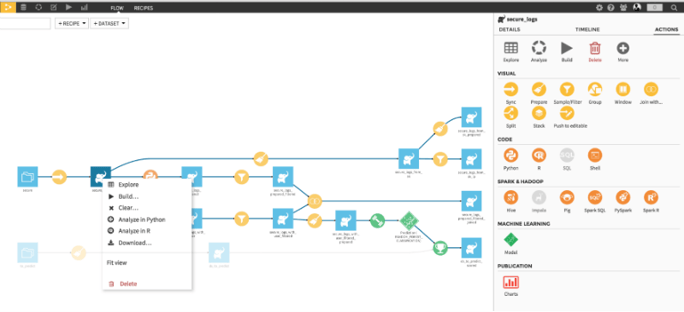

An example of a predictive maintenance workflow with Dataiku.