This blog is a guest post from our friends at Indizen-Scalian. They are experts in the entire business intelligence chain and the transformation of financial performance processes in the enterprise. They are an international company specialized in solutions for companies seeking to make their digital transformation by implementing big data, cloud, cybersecurity, or AI projects.

Several respiratory system diseases can be diagnosed by visually analyzing Chest X-Ray Images (CXR). In this article, we will illustrate how a pipeline of deep learning models helped doctors to interpret CXR and drive their attention to the most significant parts of the image. The article is not intended to be a technical blog, it is oriented to give an end-to-end view of Dataiku. Therefore, it is intended for business managers or people who want to know the features of Dataiku involved in such a use case.

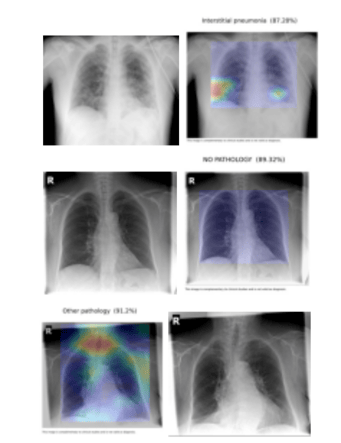

Although we will only display the classification between three classes: interstitial pneumonia, other pathology, and no pathology, it is possible to further specify the most likely disease among about 15 other categories which were grouped in the “other pathology” class. For more details, please refer to Cohen et al.

Due to the global health crisis, detecting interstitial pneumonia was especially relevant as it is one of the main diseases caused by the COVID-19 virus. Our ultimate goal is to develop a decision support tool for radiologists that allows us to collect feedback on the model results through validation by doctors. In this way, we will be able to further improve the results of the model and make it more accurate.

The X-Ray Image Set

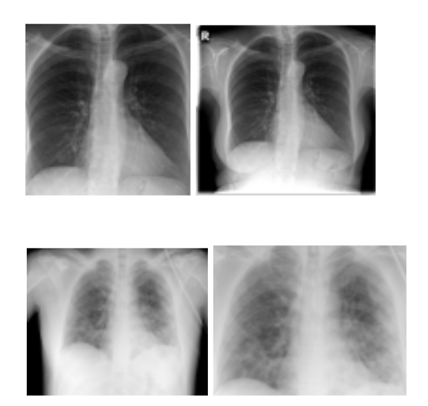

Like any other machine learning (ML) project, we started by making sure that we were not affected by the Garbage In, Garbage Out (GIGO) problem. After some meetings with the doctors to understand their needs and consider our alternatives, we decided to train the models with frontal CXR images:

Unfortunately, even with the best resources and partners, images are not always correctly labeled, can come from different X-ray machines, or are taken in different positions. We could not take the risk of testing and evaluating a wrong input — especially given the complexity and importance of detecting abnormalities in medical images. For more on how an active learning labeling framework can be used to assist with biomedical imaging, check out this Dataiku ebook.

Therefore, we decided to start the model pipeline with an image discriminator based on a generative adversarial network (GAN) and a more specific lateral/frontal x-ray discriminator.

The Discriminator

Why use an image discriminator? This will prevent the model from learning from images that have nothing to do with the training data. It makes sure that all images not only belong to the same distribution but also that they are in the correct orientation.

The Biomedical Image Segmentation

Okay, now we are dealing with frontal x-rays... but they may not have been taken by the same person, the same machine, or there may be significant differences in the size of the patients' chests. We want our model to only look at lung area features.

For this, we developed a U-Net model. The U-Net architecture is built using the fully convolutional network and is designed in a way that provides better segmentation results in medical imaging. It was first designed by Olaf Ronneberger, Philipp Fischer, and Thomas Brox in 2015 to process biomedical images.

So, Doctor, What Seems to Be the Problem?

The lung-centered CXR images can then go through a deep learning model based on a DenseNet structure. Taking the most significant convolutional layer and processing it into a heat map yields the pixels of the picture where the doctor should pay attention to. Note that the doctor always had the last word and these results were only meant to help them decide a diagnosis.

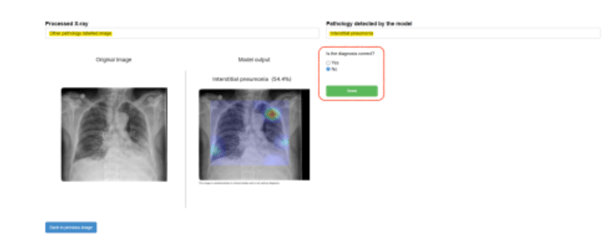

Dataiku Webapp Allows the Model to Get Feedback

Thanks to Dataiku's built-in functionality to deploy webapps, we developed a decision support application for doctors. Doctors could give feedback to the model when diagnosing the image. The information was stored for further learning from expert feedback. The model’s prediction, together with the doctor’s experience and knowledge, made the overall prediction of the task closer to the Bayes error. This project is an example of how ML can help humans perform better when it comes to a very complex task.

Thanks to the development of this project, we have been able to apply similar techniques to other problems. The most similar, with the same type of images, is bone age prediction, where the flow is practically the same. Other projects carried out with medical imaging with Dataiku have been the detection of skin cancer for dermatology, breast cancer, or the analysis of knee and prostate MRIs.

Dataiku has enabled us to create web applications for decision support for doctors, in addition to collecting information on the results of the models, so that it is possible to further improve their performance. The web applications allow doctors to apply the models to specific images so that they can obtain a result in a short time. These applications allow feedback on these results to be collected.