In the first webinar of our Generative AI bootcamp series “Bridging the Gap Between the Model and the Business, ft: Firstmark Capital, Hugging Face, and Stochastic,” Dataiku CEO Florian Douetteau asked his fellow panelists some of the biggest misconceptions about Large Language Models (LLMs) that they’ve seen so far:

Number one is bigger models always perform better. Sorry, but no. This one is kind of fading away, slowly. The next one I’m facing is ‘I’ll never need to fine tune, I’ll just do prompt engineering, whatever that means.’”

-Julien Simon, Chief Evangelist, Hugging Face

Matt Turck, Managing Director at Firstmark Capital, said, “Generative AI is AI. There’s been so much aircover on ChatGPT and so many people that are new to AI, which is a beautiful thing (ChatGPT has made AI mainstream. But so many people jump to the conclusion that that’s it, that’s AI and completely misunderstand that (and Dataiku is a perfect example of this), AI has been deployed in the enterprise at scale for a long time delivering real ROI and all of those use cases are not going away — but rather becoming much more commonplace across enterprises in general. Next, AI and Generative AI are becoming commoditized so that AI is no longer deep tech, it’s just something available as an API and anybody can use it, which echoes the conversation in 2016-2017 around TensorFlow.”

When people say ‘training the model’ or ‘training the LLM,’ often times people just describe updating their RAG (Retrieval Augmented Generation) system as training the model when you are actually not doing anything to the model, just updating your databases and indexing. I saw this quite often when people use the term ‘training,’ they misuse it by describing that as the training process.

Secondly, this idea that LLMs can do everything and should be the solution to everything, which is obviously not true. You can use BERT or smaller models for a lot of the applications and using bigger models is overkill and a waste of resources.” -Glenn Ko, CEO, Stochastic

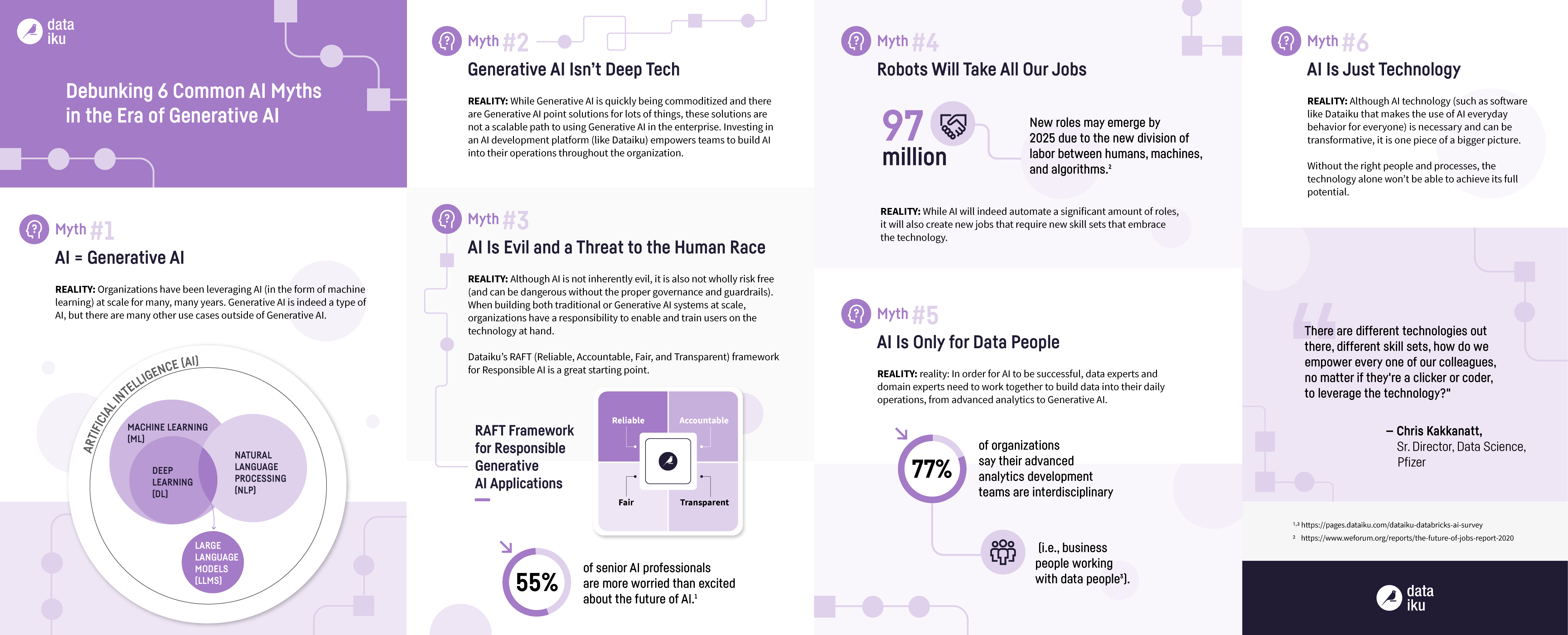

We’ve highlighted some of these key myths (and a few others) in a helpful infographic below:

Taking Myth Busting a Few Steps Further

Myth: AI Is Evil and a Threat to the Human Race

As the developers and consumers of AI tools and systems, we are more of a risk to ourselves if we don’t exercise caution in how we go about our AI experiments and projects. When building traditional and Generative AI systems at scale, organizations have a responsibility to enable and train their users on the technology at hand. Indeed, the increased investment in and use of AI presents risks such as:

- AI systems can behave in unexpected and inadequate ways in a production environment (versus the original design or intent).

- Models may reproduce or amplify biases contained in data.

- More automation might mean fewer opportunities for detecting and correcting mistakes or unfair outcomes.

In a June 2023 survey from Dataiku and Databricks of 400 senior AI professionals, results showed that people across multiple industries are anxious about the future of AI. With the rise of Generative AI, we are in uncertain waters, to be sure. Our take? It will take more stringent regulations and the development of more robust Responsible AI policies across more companies and teams to turn this worry into excitement.

Our latest ebook unpacks the risks of Generative AI and introduces the RAFT (Reliable, Accountable, Fair, and Transparent) framework for Responsible AI, showing how it can be applied to both traditional and Generative AI systems. Get your own copy of the framework here.

More widely, Responsible AI fits within an AI Governance framework, a requirement for any organization aiming to build efficient, compliant, consistent, and repeatable practices when scaling AI. For example, AI Governance policies will document how AI projects should be managed throughout their lifecycle (what we know as MLOps) and what specific risks should be addressed and how (Responsible AI). In order to create human-centered AI grounded in explainability, responsibility, and governance (and eliminate any concerns about an AI-initiated “Doomsday”), organizations need to:

- Provide interpretability for internal stakeholders

- Test for biases in their data and models

- Document their decisions

- Ensure models can be explained so organizations can accurately identify if something is wrong, causes harm, or involves risk of harm

- Create a data culture of transparency and a diversification of thought

- Establish a governance framework for data and AI

Further, AI is used to drive positive outcomes every day. Dataiku customer Vestas, a global leader in sustainable energy solutions, works to design, manufacture, install, develop, and service wind energy and hybrid projects all over the world. To date, with over 160 GW of wind turbines installed in 88 countries, Vestas has already prevented 1.5 billion tons of CO2 from being emitted into the atmosphere. Using a tool created with Dataiku, the company has reduced costs on express shipments by an estimated 11-36%.

Myth: Robots Will Take All Our Jobs

AI is a tool that can be used to augment the human workforce and help teams work in newer and smarter ways. With Generative AI, it’s helpful to think about the “centaur” approach popularized by chess grandmaster Garry Kasparov. Named after the half-human, half-horse mythical creature, this approach involves taking a blend of human and AI capabilities to tasks where AI is deeply ingrained.

Here, AI becomes an invaluable tool, augmenting human potential rather than replacing it. Further, new jobs will arise in this age, such as prompt engineers — those tasked with training Generative AI tools to deliver more accurate and relevant responses to the questions people are likely to pose.

Human talent is at a premium right now, so everybody, every organization that I speak to right now, they’re all focused on cutting cost, not jobs.” - Ritu Jyoti, Global AI Research Lead, IDC

Myth: AI Is Only for Data People

At Dataiku, we firmly believe that AI is for every person in the enterprise (no matter their job title), every use case, and every organization. We’ve been talking about the idea of true collaboration between data and non-data experts for more than 10 years. Democratization of data and, now, Generative AI won’t happen if all the tools and technologies remain in the hands of the few.

The good news is that interdisciplinary teams (i.e., business people working with data people) today are becoming status quo for the overwhelming majority of companies — and just in time for the next wave of AI! Our June 2023 survey with Databricks revealed that a staggering 77% of organizations say their advanced analytics development teams are interdisciplinary, reinforcing that AI is indeed a team sport.

By embracing the different strengths and skill sets of various contributors and enabling them to consolidate their work in a governed and organized way, the right tools can ease these types of pains and allow teams to develop AI projects both faster and more effectively. The Dataiku platform is unique because it enables this collaboration in practice through horizontal workstreams (people working together with others who have roughly the same skills, toolsets, training, and day-to-day responsibilities) as well as vertical workstreams (people working across different teams) with ease through a complete suite of features that enables communication and allows both technical and non-technical experts to work with data their way.

Putting It All Together

As we delve into the world of Generative AI, it’s critical to dispel misconceptions that can hinder our progress. To summarize, when it comes to Generative AI, bigger models don’t always equal better performance, Generative AI is more than just ChatGPT, and AI is more than just Generative AI. Beyond that, the rise of Generative AI doesn’t signal a job apocalypse; it augments human capabilities and brings forth new opportunities. AI is not exclusive to data experts, but rather a team sport that demands interdisciplinary collaboration. While the future might be AI powered, we hold the reins. By dispelling myths and fostering a Responsible AI ecosystem, we can unlock the many possibilities that Generative AI offers.