For the third year in a row, Dataiku was invited to take the stage in the Data Science and Machine Learning Bake-Off at the Gartner® Data & Analytics Summits in Orlando and London. The bake-offs are fast-paced, informative sessions that let you see three vendors side by side using scripted demos and a common dataset in a controlled setting. This bake-off focused on data science and machine learning vendors with significant momentum in the market. Talk about pressure!

Read on to learn what this year’s challenge entailed (no gingham altar required), the business case Dataiku pursued with our data project, and how you can participate in the Bake-Off fun yourself.

Behind the Scenes

On a personal note: As someone who represents Dataiku on stage in the Bake-Offs, I can attest that the honor of the assignment is a double-edged sword, and not one for the faint of heart.

We think success in the Bake-Off requires a delicate balance between technical and product knowledge, demo discipline and preparedness, and narration style and creativity … all scaffolded by nerves of steel! With that in mind, it’s no surprise I’ve truly enjoyed meeting my fellow “bakers in the tent” each year, finding kindred spirits in the talented performers who share my peculiar affinity for this particular session.

Scenario: Investigate the Economic and Human Impact of Flooding

The scenario was that we are government and business leaders working to understand the likelihood of more frequent and anomalous flooding and the resulting devastating human economic impact because of unpredictable weather conditions. Are there mitigation strategies that can be implemented successfully that could provide policy guidance and reasons for optimism in the face of ever-increasing frequency of extreme weather events?

This year, Gartner prompted vendors to use coastal and river flooding data from the Organization for Economic Cooperation and Development to help governments and organizations understand the human and economic impacts caused by extreme flooding events. Dataiku, alongside two other data science and ML platforms, delivered five-minute demos for four different stages of the analytics lifecycle:

- Data access, preparation, and feature selection

- Model building, training, and validation

- Model delivery and model management

- Business results and recommendations

Dataiku’s story explored flood risk by geography and flooding’s economic impact on commercial insurance. Our business scenario was that we were a shipping company looking to place a new warehouse in Florida. The goals of our data project were twofold. First, we wanted to better understand different areas’ exposure to floods in order to inform the specific location we would choose for the warehouse and mitigate risk. Second, for each potential location, we wanted to predict the potential insurance claim payout we might receive in the event of a flood in order to define the right insurance strategy for both our warehouse and its contents.

During the Bake-Off, we walked attendees step by step through how the team achieved these goals using a combination of descriptive analytics and ML modeling.

By the last segment, we were able to present specific, data-driven recommendations about which candidate location for our warehouse posed the lowest flood risks, as well as the dollar amounts of insurance coverage to obtain for both the building and its contents.

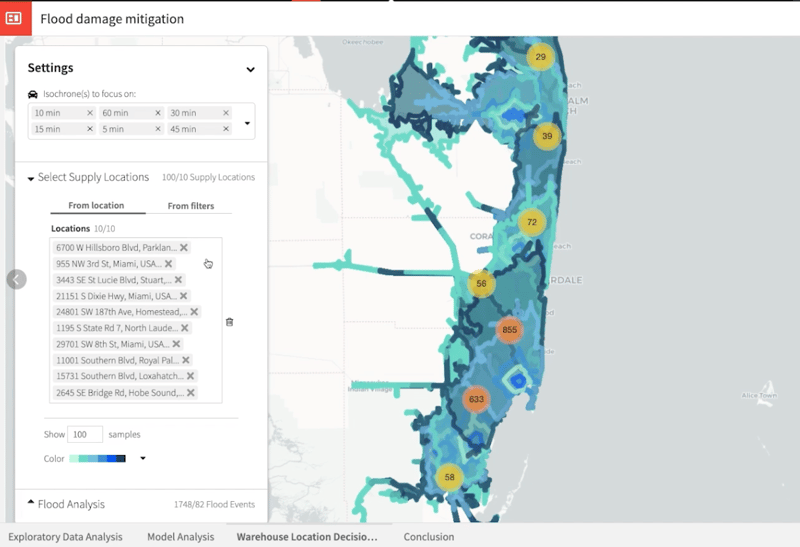

Interactive web application for exploring flood events around warehouse candidate locations

Interactive web application for exploring flood events around warehouse candidate locations

An Extra Slice

From start to finish, we demonstrated how Dataiku makes AI accessible to everyone, and how people with different roles and technical skill sets were all able to contribute their expertise to our shared project goals. Because Dataiku’s platform is infrastructure and technology-agnostic, we were able to connect to many data systems for storage and computation, using a flexible combination of visual tools and code as needed.

But in the end, what I feel made Dataiku really stand out from the pack was its ability to deliver everything from start to finish in one, single, native product, versus having to purchase multiple product or module licenses to construct similar end-to-end capabilities.

Since Gartner invites Bake-Off contestants based on market demand and interest, we take it as a very good sign that since our first appearance in 2019, they’ve invited us to participate in every Data Science and ML Bake-Off since. In fact, we're proud to be the only vendor who has been invited three times. Dataiku believes that this is validation of our commitment to delivering a comprehensive AI platform that empowers both business experts and technical experts and accelerates the process of converting raw data into business value.

The DSML Bake-Off occurred in Orlando, FL on March 22, 2023. Dataiku will also participate in the Bake-Off event at the London Gartner Data & Analytics Summit in May. We hope you’ll join us there!

GARTNER is a registered trademark and service mark of Gartner, Inc. and/or its affiliates in the U.S. and internationally and is used herein with permission. All rights reserved.