Learning a new language can seem like an insurmountable challenge - however, approaching the problem with data science and using a few smart techniques can ease the learning curve and provide a starting point from which to base your studies.

I started working at Dataiku in January 2018, and seeing as I was surrounded by many French colleagues and traveling more and more to Paris for internal meetings and events, I became fascinated with the culture and got curious and motivated to learn the language.

I signed up for a French class, which was useful, as I managed to understand the structure of the language and the syntax. But at the same time, I found myself slightly demotivated, primarily as I realized that the pace would only allow me to engage in very basic conversations (needless to say, picking up on a subject or a joke when my colleagues are talking in the office or in one of our gatherings was not happening anytime soon)!

The first thing I thought about was to google some tips and tricks on how to pick up a new language quickly - the majority of them were suggesting to listen to more French songs and to watch more French movies, but I already knew that!

Then, I came across this article and the topic was very interesting. Basically the idea is that after learning the most frequent 1,000 words used in day-to-day conversations, not only will I gain a workable vocabulary, but I will also have a stronger sense of the language’s structure, which was encouraging.

But how would I get the most frequent (ideally top 1,000) words used in day-to-day conversations in French? Through Books? or Newspapers? With more research I came across another article written by a fellow data scientist who was also interested in learning a new language (Swedish), and that gave me an idea.

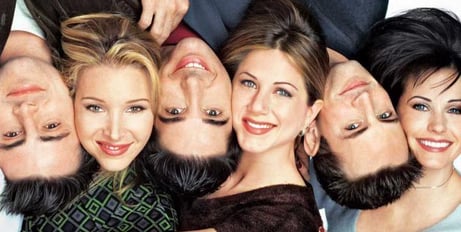

Meet my French tutors: Rachel, Ross, Monica, Chandler, Joey, and Phoebe

Friends, the most popular TV show with its 10 seasons, was the best way I could think of to get a script of typical day-to-day conversations (that is, vocabulary used repeatedly) between friends, coworkers, neighbors, colleagues, and more. So I decided to use this as my dataset from which to extract the 1,000 most commonly used words in French.

I managed to procure all the French subtitle files for each of the 10 seasons here, which I downloaded and used as the basis for the project. From there, I roughly followed the Seven Fundamental Steps to Completing a Data Project and was able to:

Clean the Data

I started with a very messy dataset, with rows similar to this:

<font color=#ffff00 size=14>www.tvsubtitles.net</font>

I had other rows full of special characters and symbols that I didn’t need (such as: ! ? . , … <j> </b/>) as well as rows that included numbers to identify the line of the subtitle and timing on the screen

I removed all this as well as empty rows, empty columns, columns of timing, episode number, and season number, which were not relevant to this particular data project.

After all this, I ended up with one very clean column with each row representing a line of a subtitle (i.e., one sentence or two of text).

Manipulate the Data

The next step was to work on those rows to decide what to keep and what to remove:

- I didn’t remove stop words as people often do in natural language processing (NLP) because for this case, I needed them - remember, my ultimate goal was to learn the language, and stop words are an essential part of the language.

- I decided to extract Ngrams of up to three words, as I wanted to keep sentences or phrases like ‘’good morning,” “I don’t know,” or even “what’s up” intact.

This is how I ended up with one column for my dataset, with each row including either an individual word or a combination of two or three words used together.

Almost done - all I needed to do after that is to sort these rows based on frequency to get my top 1,000 words (which, because of the Ngrams step, could actually also be expressions - more than one word used commonly together) used on a daily basis.

But I wanted MORE!!!

Enrich the Dataset

Remember, the goal of the project was to memorize and understand the top 1,000 words or expressions. For that, the list of the top 1,000 was not enough - I also needed to know their meanings. I could have manually used Google Translate on each row to get the translation. OR I could get all the translations for all the rows at once and have no excuse to actually start doing my homework!

Since I used Dataiku DSS for the project, which allows integrating with other data sources through external APIs, I was able to easily use the Google Translate API by:

- Generating my API key

- Adding it to my Python code recipe

- Getting a translation for each row using this simple algorithm

Voilà! Now I have my top 1,000 French words and phrases as well as their English translations, and I was able to do this all in just a few quick steps.