Periods of economic flux or uncertainty — not to mention growing geopolitical tensions — are inevitably a time for tight P&L scrutiny and cost-cutting initiatives as well as strict review of all investments. But should it be the time to deprioritize AI investments? Maybe not. This piece explores why savvy businesses will double-down on their commitment to AI projects as an actionable path to making the organization run faster and more efficiently.

Use Cases & Examples

It would be impossible to list out all the potential use cases organizations can take on to boost cost reduction efforts and maximize efficiency. However, in general, they fit into one of two categories:

- Optimization: In other words, doing more with less, or data and analytics projects whose express goal is to reduce costs. One concrete example is a machine learning model that drives more efficient hospital staffing or one that reduces the tangible cost of maintenance (otherwise known as predictive maintenance).

- Acceleration: This tenant involves furthering the business’s ability to execute on critical business functions, indirectly saving money. One concrete example is advanced churn prediction (also known as uplift modeling). Because it is six to seven times more expensive to acquire a new customer than to retain an old one, advanced techniques not only identify potential churners but also accelerate business by predicting who is most likely to respond positively to marketing efforts.

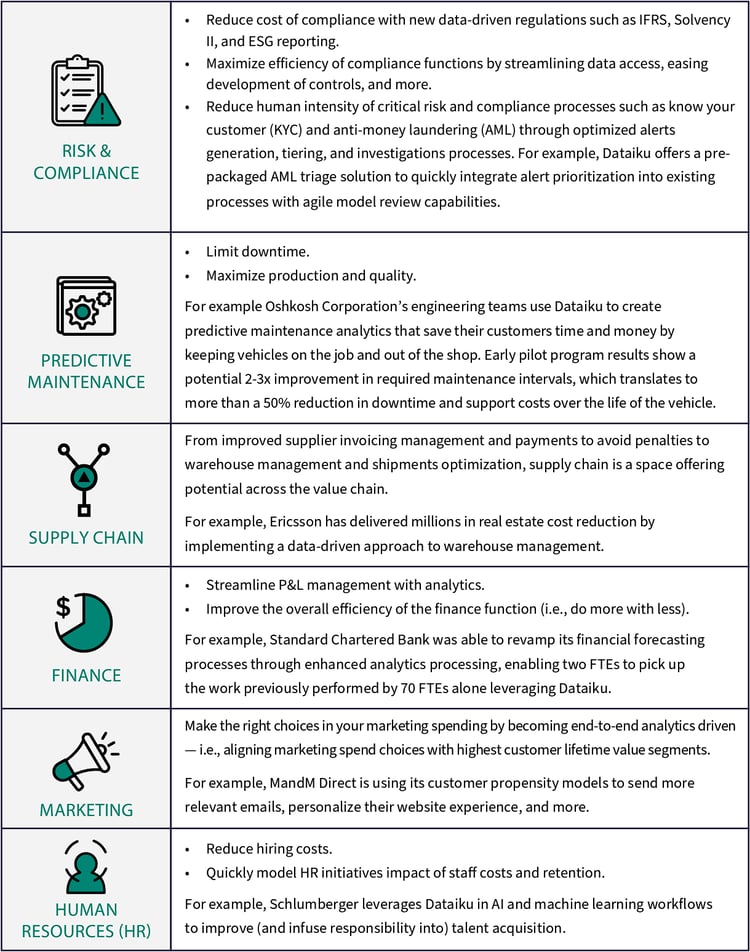

Again, while an exhaustive list of optimization and acceleration use cases is outside the scope of this article, there are some key, table-stakes cost-saving use cases to note for the following lines of business or business units:

How to Accelerate

It would be naïve to ignore the fact that AI initiatives represent a cost in and of themselves. Even if you know what use cases you need to tackle to cut costs, you won’t be able to benefit from them if you don’t have the right systems in place to move quickly and efficiently on AI initiatives.

The reality is that the AI project lifecycle is rarely linear, and there are different people involved at every stage, which means lots of potential rework and inefficiencies along the way. Here are three main areas where introducing efficiency — for example, through a centralized AI platform like Dataiku — can help accelerate AI efforts.

1. Make Sure You Can Push to Production Quickly

Packaging, release, and operationalization of data, analytics, and AI projects is complex, and without any way to do it consistently, it can be extremely time consuming. This a massive cost not only in person hours, but also in lost revenue for the amount of time the machine learning model is not in production and able to benefit the business with cost savings.

Dataiku has robust support for deployment of models into production (including one-click deployment on the cloud with Kubernetes), easing the operationalization of AI projects.

2. Don’t Counteract Cost Savings With High AI Maintenance Costs

Putting a model into production is an important milestone, but it’s far from the end of the journey. Once a model is developed and deployed, the challenge becomes regularly monitoring and refreshing it to ensure it continues to perform well as conditions or data change (and in a period of economic turbulence, as many businesses learned during the pandemic, conditions and data will change). Depending on the use case, the model can either become less and less effective in a best case scenario; in the worst case, it can become harmful to and costly for the business.

That means paying attention to AI project maintenance, and more importantly, being efficient at it. MLOps has emerged as a way of controlling the cost of maintenance, shifting from a one-off task handled by a different person for each model — usually the original data scientist who worked on the project — into a systematized, centralized task.

Dataiku has robust MLOps capabilities and makes it easy not only to deploy, but to monitor and manage AI projects in production.

3. Facilitate More Cost-Saving Use Cases for the Price of One

Leveraging AI for cutting costs requires massively increasing the number of cost-saving use cases being addressed across the organization. This, in turn, requires empowering anyone (not just people on a data team) to leverage the work done on existing AI projects to spin up new ones, potentially uncovering previously untapped cost-savings use cases.

Being able to leverage one project to spur another requires:

- Radical transparency. For example, how can someone from marketing build off of a use case developed in the customer service department if neither knows what AI projects the other is working on, much less can access and leverage those components?

- The right tools — like Dataiku — that make AI and data accessible to anyone across the organization, from data scientist to analyst to business people with only simple spreadsheet capabilities.

The surfacing of these hidden use cases often comes from the work of analysts or business users. It is one of the keys to data democratization and eventually to Everyday AI, where it’s not just data scientists that are bringing value from data, but the business itself.

4. Reevaluate Your Data & Analytics Stack With an Eye for Cost

If your company is like a lot of organizations today, the data and analytics stack was built over a period of years (or maybe even decades) and was probably dictated by existing investments rather than driven by explicit choice in making new investments that are the best fit for the needs of the business. In other words, “We already have tools for x, y, and z, what can we add to complete the stack, and how can we tie it all together?”

Today’s cost-cutting mindset can help examine these choices more closely and can provide the necessary spark to rethink the technology stack, including the challenges or inefficiencies it brings. For example, if there are lots of disparate tools throughout the data-to-insights process, there are likely missed opportunities for automation (read: efficiency) between steps in the life cycle. For example, triggering automated actions when the underlying data of a model or AI system in production has fundamentally changed.

Good questions to ask when economic times get tougher include: How can I limit the reliance on and recurring cost of external vendors? Is my data and analytics technology stack hindering the organization's ability to be efficient, and thus, cut costs?

Conclusion: Emerge Ahead

Organizations that doubled down on their investment in AI during these times of uncertainty will come out ahead. Why? Because they will have trained and upskilled their people to accelerate their work with the support of data and AI. They will have the processes in place to ensure that people are bringing human-in-the-loop value. And they will have the right tools and technology to support their journey. How will you enable your business to emerge ahead?