As we mentioned recently, IT leaders have the capacity to be the heroes of enterprise AI scaling — so long as they are able to find the right balance between speed and control. Keeping control over the development of AI models in the enterprise is not an easy task. All analytics and AI projects must be monitored because no one wants a model in use that is flawed, low-performing, or undocumented.

IT leaders have legitimate expectations about what models are in use, for what purpose, if they’re business critical, if they’re risky, who approved them, and so on (now more than ever in the era of Generative AI). In the Gartner report, “Applying AI — Governance and Risk Management,” Gartner outlines several resources related to AI Governance and risk management, a few of them which we will highlight here (and also share how Dataiku can help).

1. Organize People and Process to Support AI

According to Gartner, “There are three primary reasons why AI risks are not adequately addressed in most organizations:

- Organizational fragmentation: Ownership of this domain is spread across functions such as legal, compliance, AI development, enterprise architecture, privacy, and security.

- Production-first mentality: Most enterprises focus to get their AI models into production; building trust and risk management into AI lifecycles is an afterthought.

- Enterprises are not convinced it’s needed: Typically, there is no readily available proof of adversarial attacks or benign mistakes causing suboptimal or ineffective AI model performance. Most enterprises are not actively managing and monitoring AI models and data integrity after deployments, so they don’t even know if these issues exist.”*

CIOs and IT leaders are focused on driving down infrastructure costs, navigating questions about self-service analytics, data quality, and ROI, and now have Generative AI to wrap their arms around. On their already busy plates, IT teams play a big role in rallying stakeholders across the company to implement AI — not in a silo but comprehensively, with risk management included. They are tasked with enabling the entire organization with both tools and skills to adopt and scale analytics and AI, which can be daunting.

Dataiku’s platform for Everyday AI provides a single place to orchestrate data, analytics, and AI under one roof, leveraging underlying cloud resources. IT teams can simultaneously manage risk and ensure compliance at scale across the organization with advanced permissions management, SSO and LDAP integration, audit trails, secure API access, and more.

2. Manage AI's Societal and Ethical Enterprise Risks

According to Gartner, “Any organization that invests in AI will face a certain amount of ethics, fairness and bias risk — and eliminating them completely is impossible.”* Further, Gartner states, “IT leaders must anticipate these risks and balance the trade-off between systemwide AI risks and the benefits of implementing AI. Rather than only reactively mitigating risk, deliberate deployments of AI should primarily serve a detailed purpose. All deviations can be monitored in context of missing that original purpose, or slowly deviating from it, such as data drift and model drift, leading to unintended outcomes.”*

With the rise of Generative AI, more than ever before, organizations — with IT leaders at the helm and data practitioners executing in practice — need to think about building AI systems in a responsible and governed manner. Dataiku’s RAFT (Reliable, Accountable, Fair, and Transparent) framework for Responsible AI highlights the following tenets:

- Reliable and Secure: AI systems are built to ensure consistency and reliability across the entire lifecycle. Data and models are privacy-enhancing.

- Accountable and Governed: Ownership over each aspect of the AI lifecycle is documented and used to support oversight and control mechanisms (which is particularly useful for IT teams).

- Fair and Human-Centric: AI systems are built to minimize bias against individuals or groups and support human determination and choice.

- Transparent and Explainable: The use of AI is disclosed to end users and explanations for the methods, parameters, and data used in AI systems are provided.

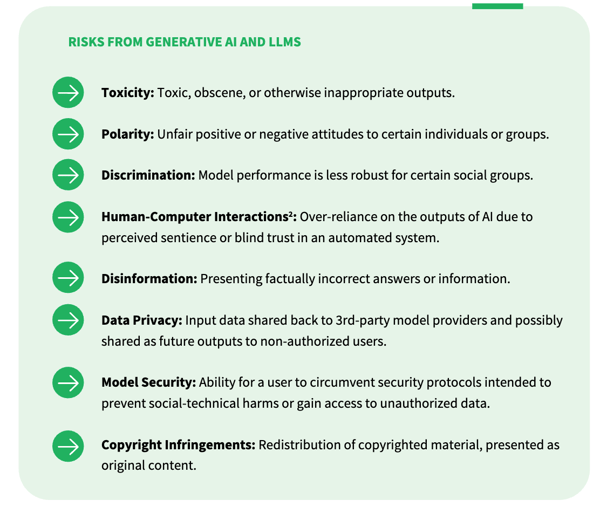

In addition to the socio-technical risks from Generative AI, there are also emerging legal considerations around privacy and copyright infringements. Below, we list a number of risks that may arise in the use of Generative AI in the enterprise. These risks are common across various types of Generative AI technology but will surface in different ways across use cases:

It’s important to note that these harms are not exclusive to language models, but they are heightened by the use of natural language processing techniques to analyze, categorize, or generate text in a variety of business contexts. Understanding and addressing these risks before implementing a large language model or other Generative AI techniques into an AI system is critical to ensure the responsible and governed use of the latest technology.

To go further on this topic, check out the full ebook “Build Responsible Generative AI Applications” ebook here.

3. AI Explainability

According to Gartner, “The lack of model ‘understandability’ among model users, managers, and consumers who are impacted by models’ decisions severely limits an organization’s ability to manage AI risk.”*

It’s critical for organizations to understand how their models make decisions because:

- It gives them the opportunity to further refine and improve their analyses.

- It makes it easier to explain to non-practitioners how the model uses the data to make decisions.

- Explainability can help practitioners avoid negative or unforeseen consequences of their models.

Dataiku helps organizations accomplish all three of these objectives by striking the optimal balance between interpretability and model performance. Dataiku is built for best practice methodology and governance throughout a model’s entire lifecycle. Check out the video below for a seven-minute summary of how teams can implement responsible, explainable AI systems with Dataiku:

As you can see, managing AI risk can sound overwhelming, particularly for IT leaders (and considering the potential unintended consequences that can pop up during any given AI lifecycle). At Dataiku, we believe that tooling makes the difference so in order to mitigate risk (and simultaneously combine Responsible AI, MLOps, and AI Governance), a centralized tool for both development and monitoring of AI is necessary.

*Gartner - Applying AI — Governance and Risk Management; Avivah Litahn, Bern Elliot, Svetlana Sicular, Shubhangi Vashisth, Gabriele Rigon, 3 May 2023. GARTNER is a registered trademark and service mark of Gartner, Inc. and/or its affiliates in the U.S. and internationally and is used herein with permission. All rights reserved.