According to the 2023 Dataiku-sponsored IDC InfoBrief “Create More Business Value From Your Organizational Data,” “Although [AI] adoption is rapidly expanding, project failure rates remain high. Organizations worldwide must evaluate their vision to address the inhibitors for success, unleash the power of AI, and thrive in the digital era.”

One of the most important takeaways when it comes to overcoming analytics and AI project failure is that there’s never just one repeat offender — there are various points of AI project failure across both business and technical teams. The interactive microsite above visually displays the most common failure points across the AI project lifecycle and shares solutions on how data, analytics, and IT leaders can quickly address them with Dataiku.

On the other side of the coin, this article will address some of the most common organizational reasons fueling AI project failure (and tips for navigating them).

The AI Talent Gap (People!)

Two of the top blockers for scaling AI are hiring people with analytics and AI skills and identifying good business cases. Unfortunately, hiring hundreds or thousands of data scientists is not realistic for most organizations and the people who can address both issues (those with AI and business skills) are often so rare that they’re called unicorns.

To actually address both of these issues at once, then, organizations should “build unicorn teams, not hire unicorn people.” This means they should build teams made up of both data and domain experts, while also aiming to evolve their AI operating model (which will simultaneously boost their AI maturity) over time. This works: 85% of companies that have successfully scaled AI use interdisciplinary development teams, according to the Harvard Business Review.

💡Tip From IDC: “Consider the role of data scientists along with knowledge workers and industry expertise. Empowering knowledge workers will accelerate time to value.”

Lack of AI Governance and Oversight (Processes!)

What team’s can’t afford in this macro-economic climate is for AI budgets to be reduced or cut entirely. What would lead to this happening, you might ask? Time wasted building and testing machine learning models, so much so that they never make it into production to start generating real, tangible value for the business (such as money made, money saved, or a new process established that couldn’t be done today).

The good news: There are strategies and best practices analytics and AI teams can implement to safely streamline and scale their AI efforts, such as establishing an AI Governance strategy (inclusive of operational elements like MLOps and value-based elements like Responsible AI).

The bad news: Oftentimes, teams either don’t have these processes set up prior to deployment (which can lead to many compounding issues) and don’t have a way to clearly move forward with the right projects that do generate business value and deprecate the underperforming ones.

AI Governance delivers end-to-end model management at scale, with a focus on risk-adjusted value delivery and efficiency in AI scaling, all in alignment with regulations. Teams need to make distinctions between proof-of-concepts (POCs), self-service data initiatives, and industrialized data products, as well as the governance needs surrounding each. Space needs to be given for exploration and experimentation, but teams also need to make clear decisions about when self-service projects or POCs should have the funding, testing, and assurance to become an industrialized, operationalized solution.

💡Tip From IDC: “Establish clear policies for data privacy, decision rights, accountability, and transparency. Have proactive and ongoing risk management and governance performed jointly by IT and those in business and compliance.”

Not Taking a Platform Mindset (Technology!)

How can teams pinpoint the right technologies and processes to enable the use of AI at scale?

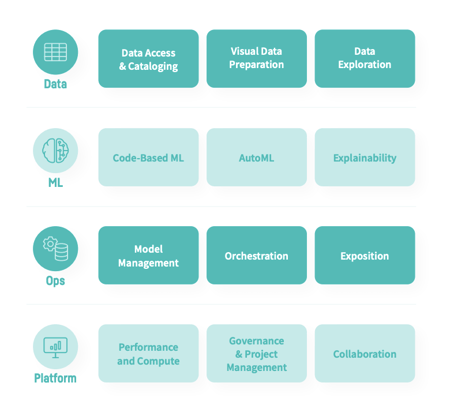

An end-to-end platform (like Dataiku) brings cohesion across the steps of the analytics and AI project lifecycle and provides a consistent look, feel, and approach as teams move through those steps.

When building a modern AI platform strategy, it’s important to consider the value of an all-in-one platform for everything from data prep to monitoring machine learning models in production. Buying separate tools for each component, conversely, can be tremendously challenging as there are multiple pieces of the puzzle across different areas of the lifecycle (illustrated below).

In order to get to the stage of long-term cultural transformation via an AI program, it’s important to be sure that IT is involved from the very beginning. IT managers are essential for effective, smooth roll-out of any technology and — from a more philosophical perspective — are critical for instilling a culture of access to data balanced with the proper governance and control.

💡Tip From IDC: “Instead of implementing distinct solutions to handle small tasks, embrace the platform approach to support consistent experiences and standardization.

Looking Ahead

Scaling analytics and AI efforts take a significant amount of time and resources, so the last thing you want to do is fail. At the same time though, a bit of healthy failure during experimentation is valuable, as long as teams can fail fast and implement their learnings. They should be sure to focus on upskilling and training (i.e., getting business practitioners more and more involved), democratize AI tools and technologies, and put the right guardrails in place to ensure responsible AI deployments.