MLOps is one of the new hot buzzwords in the data science community. As with pretty much any buzzword, it reveals some new advances and concerns but also replaces a number of previous names or ways to frame some of the same issues. In this digestible post, we confront very close concepts we have often encountered in the past few years — “MLOps” and “operationalization” (O16N). First, we will provide some generic definitions:

- O16N is about the very wide range of work and the required structure and management that an organization needs to put in place to draw value from AI in a sustainable and scalable way (i.e., training this high performance deep learning model can be hard, but it's a small part of the journey).

- MLOps, as its predecessor DevOps, is a movement that promotes collaboration between stakeholders to efficiently and safely put models in production. MLOps isn’t only between developers and operations people but also across a wider range of teams involved in Enterprise AI.

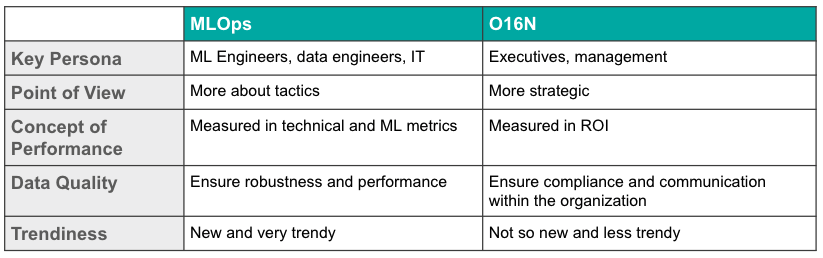

These high-level definitions are hard to distinguish and for good reason: There is a large overlap between these considerations, but the focus will typically be quite different. We'll outline some of the key differences below.

Varying Focuses and Profiles Involved

The focus of O16N is on business value and change management and it emphasizes the management’s perspective. Some of the key aspects include structuring teams and hiring planning, ensuring the appropriate communication channels are in place, that risks are identified and mitigated, ROI is measured, and, ultimately, ensuring that the organization has tooling that supports its strategy and constraints.

On the other hand, MLOps starts with a more technical approach. How can organizations remove obstacles to deploying projects to production and put together the means to operate and update these projects while controlling risk? Data quality and monitoring are major concerns in MLOps discussions.

The difference is mostly a matter of personas involved. Typically, senior executives and CEOs will have considerations that draw them towards the O16N kind of language, whereas the technical staff, technical line management, and people who have experience with DevOps practices will be drawn towards the somewhat more technical framing of this common goal: using AI to create value for the organization at scale. O16N naturally has a more strategic and business orientation, while MLOps focuses on tactics and technology. In a large organization, both kinds of discussions will organically occur.

Data quality is at the heart of embedding machine learning into an organization. The O16N type of conversation will typically focus on compliance and personal identifiable information, lineage, fairness, etc. Discussions on the MLOps side will focus more on making sure collected data ensures the best possible machine learning performance, which may include high quality labeling, drift detection, deployment strategies such as A/B testing, etc.

So in short, “MLOps” brings a significant evolution in the domain of practical machine learning but does not replace all the discussions that happened. A common language is valuable, but to address different persona concerns — including management and engineers — the focus and vocabulary may vary.