Today I'm going to tell you about a project that was inspired by an overheard conversation during lunch: Alivia Smith (whom you'll already be familiar with if you're an avid reader of our blog) was struggling with the schedule of the Avignon Festival, a French theater festival; struggling because there are so many plays and events happening, but no real guide or documentation to help her decide on her schedule. (Note: the 2016's Avignon Off festival regroups over 50 different plays, both French and foreign, for about 300 representations in an 18-days period.)

Since we're a great big loving family at Dataiku and we’re always enthusiastic about playing with data, a couple of us data scientists figured we could use machine learning to build a play recommender for Alivia so she could have insights regarding which plays she might like, based on her tastes and the theater community's appreciation. By the way, if you want to follow along, download our how-to guide for building your own recommendation engine, complete with code samples and tips.

How Did We Build a Theater Recommendation Engine?

We computed several recommendations for Alivia using a method known as collaborative filtering. Essentially, she gave us several plays she had already seen and liked, and from those we deduced a list of other plays she may like with a score (i.e., an estimation of how much she would like them). We did this by comparing the plays she liked with how other theater goers had rated the play, and what other plays they had seen and liked.

In order to suggest consistent plays to Alivia, we had to start with:

- getting the plays’ data from the web,

- building a collaborative filtering recommender system,

- asking Alivia for a couple of plays she liked with how she rated them on a scale of 1 to 10,

- computing the score of the plays that she might like.

Collecting the Data

First of all, we needed to get all the plays that were announced at the festival. So we scraped the web using BeautifulSoup and urllib, collecting all the data we could about those plays (title, genre, actors, stage director, ratings etc.).

We then gathered data about all the users who rated those plays, and then extended our search radius to every play those users had ever rated in order to have a steadier, more consistent recommender system (the more data, the better).

Once we cleaned everything we had, we moved all our datasets on our Vertica server and we moved on to building the recommender.

A few figures about our data:

- 700 plays scheduled for the festival

- 70,000 ratings on those plays

- 1,200,000+ ratings gathered in total

Collaborative Filtering Based Recommender System

What's That?

A recommender is a system, often an algorithm, whose goal is to predict a user's preferences in a given environment (just like Netflix suggesting TV shows based on those you said you liked). Collaborative filtering is one technique for building a recommender. It computes two items’ similarity using information from the community (i.e. their ratings). This is similar to getting recommendations from a friend who has similar movie tastes.

A different method, known as content-based, states two items are similar if they share common attributes (like, in our case, the same actors, for instance). This is like going to a movie because it is starring the same actors that were in the last movie that I saw - and I'm not going to a movie starring Nicolas Cage because I liked Sailor&Lula!

We decided to go with collaborative filtering because it tends to give better recommendations (after all, who is better to recommend movies than friends with similar tastes?).

There are two main types of collaborative filtering similarities: item-based similarities and user-based similarities. The item-based method would start by computing the similarity of every possible pair of items (typically, if they have the same ratings from several users, their similarity will be high) and then inferring the user's taste by matching their data to the similarity matrix. The user-based method on the other hand would look for users who share the same rating patterns with the active user, and then use the in-base users' ratings to compute the active user's prediction.

We used an item-based recommender.

Now that you have the basics, let's see the math:

Computing the similarities in an item-based paradigm

We computed the similarities over all pairs of items using the cosine of their rating vectors.

The formula we used is:

This expresses the idea that the similarity between 2 items .svg)

.svg)

Let's make an example:

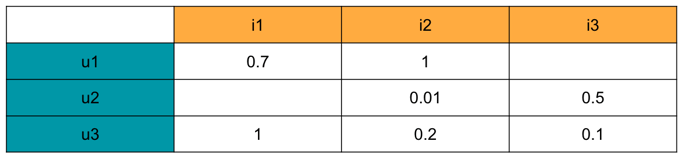

Here, we have 3 items (.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

You will notice that the marks are between 0 and 1 in the example. In our data, they're between 0 and 10. However, an important part of the similarity computation is the Normalization of data. This method takes into account the fact that, for some people, an 8/10 is a very, very good mark, while others are likely to mark a play they like with a 10. We chose a basic mean-centering for this part:

However, we felt it was not enough to compute a similarity based on marks. So we decided to implement a similarity that would only consider the fact that 2 plays were similar if they had a significant enough amount of users in common. Not only does it gives one more clue regarding the relevance of the prediction, but it also is a measure of how much of a must-see the play is, and this does not depend on how it has been rated. We can easily imagine that there are some plays that you are going to find interesting to see, even if you happen to kind of dislike it in the end. Typically: maybe you were disappointed with the last Star Wars, but of course you had to see it first. The formula of this similarity is the Jaccard index formula:

Where .svg)

.svg)

Yep, we do care about our marketers!

Scoring the Plays

The scoring of the item is the estimation of how much the user will like the recommendation we made. We used this formula:

Where .svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

Our play recommendations

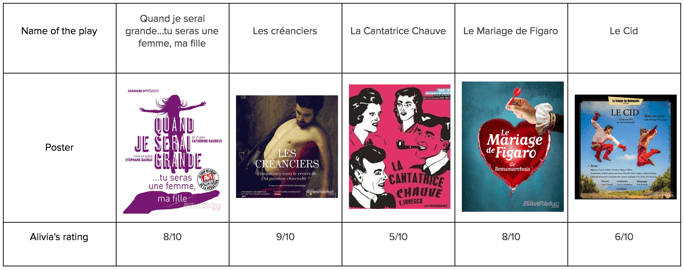

In our case, we wanted to compute personalized play recommendations for Alivia, who gave us 5 plays she rated:

We picked the plays that satisfied the following criterion:

Where .svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

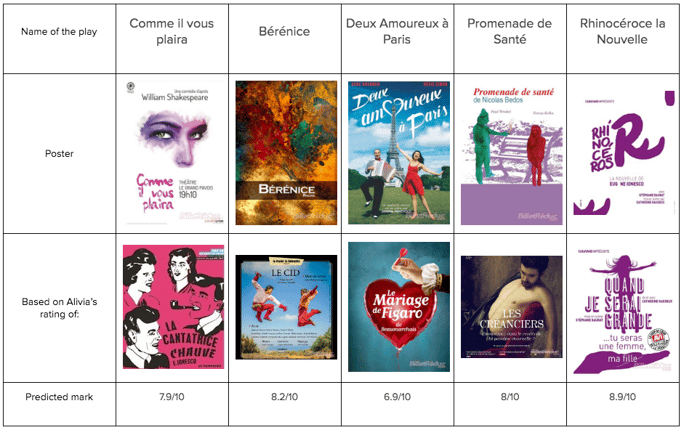

In the end, we advised her to go see the following:

Note that I used all of the 5 input items in order to score the similar plays. I then selected the play that had the highest similarity with the input and put it in the same column.

Now if you know a bit about French theater, you can tell the recommendations are on spot (yes, I'm proud):

- "Deux amoureux à Paris" and "Le Mariage de Figaro" are both romantic comedies,

- "La Cantatrice Chauve" and "Le Rhinocéros" have both been written by the same author (Eugène Ionesco) even though the recommender is not content-based,

- "Le Cid" and "Bérénice" are both classics of French theater.

.svg)

.svg)

.svg)

.svg)