CIOs and IT leaders are constantly seeking ways to leverage cutting-edge technologies to drive innovation and efficiency in their organizations. The rapid advancement of large language models (LLMs) presents an unprecedented opportunity to transform how we build and deploy AI-powered applications across the enterprise.

In the technical guide we’re producing in partnership with O'Reilly Media, "The LLM Mesh: A Practical Guide to Using Generative AI in the Enterprise," we explore a groundbreaking architecture paradigm that promises to accelerate AI adoption while maintaining control, security, and scalability. We announced chapter one over the summer and are excited to share the key takeaways from chapter two, the next early release chapter that is now available.

The Challenge: Scaling LLM-Powered Applications

The potential of LLMs in the enterprise is vast, but realizing this potential requires more than just access to powerful models. As our research suggests, a large corporation might benefit from hundreds of custom LLM-powered applications across various departments and functions. However, building and maintaining such a large number of applications poses significant challenges:

- Complexity: Each application requires integration with multiple components, from LLM services to data retrieval systems.

- Consistency: Ensuring uniformity in how these applications are built and interact with enterprise systems is crucial.

- Governance: Maintaining oversight of data usage, model access, and compliance across numerous applications is daunting.

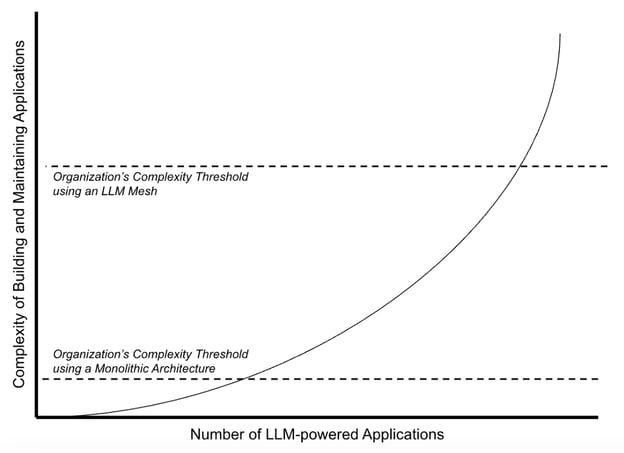

- Scalability: Traditional monolithic approaches to application development quickly hit a "complexity threshold," limiting an organization's ability to deploy more AI-powered solutions.

Enter the LLM Mesh: A New Paradigm for Enterprise AI

As the book states, “Chapter 1 covered the LLMs and the various services that host and serve them. While those models and services are at the heart of an LLM-powered application, more is needed, especially if the developer hopes to build a custom application that will stand apart from the competition and deliver better and more valuable performance. This requires integrating the LLMs with various objects unique to the organization.”

The LLM Mesh architecture addresses these challenges by providing a structured, modular approach to building LLM-powered applications. Key components of this architecture include:

- Abstraction Layer: Standardizes interactions with various AI services and tools, simplifying integration and enabling easy swapping of components.

- Federated Services: Centralized control and analysis capabilities ensure governance and optimize resource utilization.

- Object Catalog: A central repository for discovering and documenting all LLM-related components, promoting reuse and standardization.

Be sure to check out this full chapter for a detailed overview of the objects of an LLM Mesh, including agents, prompts, tools, LLM services, retrieval services, and more. By adopting an LLM Mesh architecture, organizations can accelerate development with reusable components and standardized interfaces to speed up the creation of new AI-powered applications.

With enhanced governance via centralized control and documentation, IT leaders can continuously improve compliance and security management. Further, better resource allocation and reuse of components will lead to more efficient AI deployments (and optimized costs). Finally, teams can break through the aforementioned "complexity threshold" to deploy more AI solutions across the enterprise.

5 Strategic Considerations for IT Leaders

As you consider implementing an LLM Mesh in your organization, keep these five key points in mind:

- Start With a Clear Strategy: Identify high-impact areas where LLM-powered applications can drive significant value.

- Invest in Infrastructure: Ensure your organization has the necessary compute resources and data management capabilities to support an LLM Mesh.

- Focus on Governance: Develop clear policies for data usage, model access, and compliance within the LLM Mesh framework.

- Cultivate AI Expertise: Build a team with the skills to develop and maintain LLM-powered applications using the mesh architecture.

- Promote a Culture of Innovation: Encourage experimentation and rapid prototyping within the structured environment of the LLM Mesh.

The LLM Mesh represents a paradigm shift in how enterprises can harness the power of AI at scale. By providing a structured yet flexible framework for building and deploying LLM-powered applications, it enables organizations to overcome the complexity barriers that are limiting AI adoption.

As CIOs and IT leaders, embracing this new architecture could be the key to unlocking the full potential of AI across your organization, driving innovation, efficiency, and competitive advantage in the years to come.