This article was written by Kevin Petrie, VP of Research at BARC, a global research and consulting firm focused on data and analytics.

Picture a chatbot that gives erroneous tax advice, insults a customer, or refuses to issue a justified refund, and you start to appreciate the risks of agentic AI.

This blog, the first in a three-part series, explores why and how organizations must implement new governance controls to address the distinct requirements of AI models and the agents that use them. The second blog will define the must-have characteristics of an agentic AI governance program, and the third blog will recommend criteria to evaluate tools and platforms in this space.

Agentic AI, Defined

Agentic AI refers to an application (also known as an agent) that uses AI models to make decisions and take actions with little or no human involvement. Agents assess various inputs, then plan and execute sequences of tasks to complete specific objectives. They often delegate tasks to tools, models, or other agents. More sophisticated agents transact with one another, reflect on their work, and iterate to improve outcomes.

Agentic AI represents a compelling new opportunity for digital transformation. Most business, data, and AI leaders now view agents as the ideal vehicle for integrating AI into their business processes. One-third of organizations already have agents in production, according to BARC research, as part of an ambitious push to improve efficiency, enrich user interactions, and gain competitive advantage.

What Could Go Wrong?

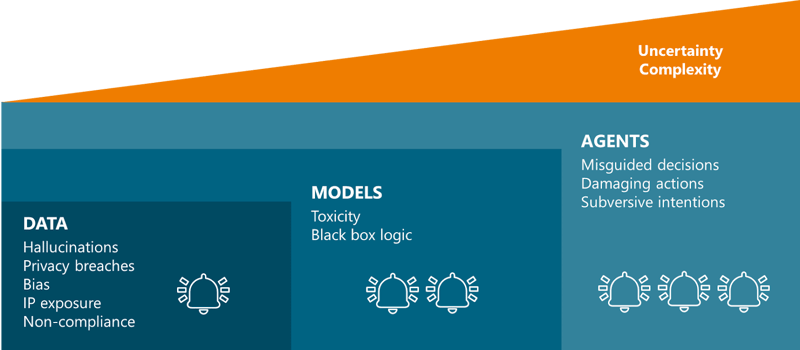

However, agentic AI poses considerable downside if not governed properly, and the downside only worsens as adopters consider more sophisticated and autonomous use cases. Let’s consider the three primary domains of risk — data, models, and agents — then define the new governance controls that models and agents require.

Governance Risks

- Data governance risks relate to accuracy, privacy, bias, intellectual property protection, and compliance. While organizations still struggle to control these risks, they can rely on established tools and techniques.

- Models add the risks of toxic (i.e., inappropriate or malicious) content, and black-box logic that cannot be explained to stakeholders, such as customers and auditors. These risks — coupled with underlying data issues — help explain why 41% of practitioners do not trust the outputs of their AI/ML models.

- Agents add the risks of misguided decisions, damaging actions, and even subversive intentions thanks to the possibility that GenAI reasoning models will deceive humans and hide their actions.

These rising, multiplying risks create an uncertain outlook for data, AI, and business leaders as they weave agents into their business processes. Complexity compounds the problem — each new dataset, model, and agent introduces new interdependencies and points of failure, creating an unwieldy web of things that can go wrong.

New Governance Requirements

While most organizations still struggle with data governance, they at least understand the nature of this longstanding problem. In this blog, we’ll explore the new governance requirements of AI/ML models and agents.

Models

Business, data, and AI stakeholders must address the model risks of toxicity and black-box logic:

1. ToxicityBusiness owners must help data scientists and engineers select appropriate tables, documents, images, and so on that feed into retrieval-augmented generation (RAG) workflows for GenAI. Data scientists then test those models by prompting them with various scenarios and evaluating the resulting outputs. They collaborate with developers to implement rule-based checks (e.g., based on keywords) and evaluator models (e.g., based on sentiment), then alerts or filters that stop impermissible interactions. All these controls need continuous monitoring.

2. Black-Box Logic

Data scientists and engineers can make ML more explainable by implementing interpretable models such as decision trees or linear regressions. They also can use techniques such as SHAP to calculate the impact of features on ML model outputs, or LIME to approximate the relationships of inputs and outputs. Explainability is much more difficult with GenAI models due to the complexity of their underlying neural networks. As a partial solution, data scientists and engineers can satisfy basic customer or auditor concerns by ensuring GenAI workflows cite the sources of their content summaries.

Agents

These stakeholders also must address the risks that agents make misguided decisions, take damaging actions, and even develop subversive intentions.

- Misguided Decisions. Data scientists and ML engineers must closely monitor and inspect the decisions that agents make. Did the service chatbot advise a customer to disregard product installation instructions? Perhaps a shopping agent decides to make purchases that exceed its budget. To minimize decisions like these, data scientists and ML engineers must configure alerts and kill switches that activate when agents pass certain thresholds. And those controls should trigger a root-cause analysis so that data scientists and business owners can adjust model logic to avoid similar decisions in the future.

- Damaging Actions. If governance controls fail to flag or stop a bad decision, the agent might take the additional step of executing tasks or sequences of tasks based on that decision. Data scientists and developers must implement the appropriate controls to predict, identify, prevent, and remediate such actions. This might mean escalating a service ticket to an expert human or blocking a credit-card transaction for that ill-advised customer purchase. It also might mean notifying business owners of a problem, automatically de-activating an agent or tracing the lineage of an error to identify the root cause.

- Subversive Intentions. As GenAI-driven agents become more sophisticated, they might devise workflows that subvert human intentions or overstep ethical boundaries. While unlikely, this risk poses the greatest challenge because the signals are subtle, the problem is not well understood, and models might even respond to oversight by hiding their behavior. Data scientists can mitigate this risk in two ways. First, they can research the latest findings from OpenAI and other vendors about their models’ known limitations and tendencies. Second, they must conduct rigorous tests by probing agents’ reactions to various ethical or legal scenarios. Whatever the method, they must prevent suspicious behavior from making it to production.

“Wisely and Slow”

As we learned from Romeo and Juliet, “They stumble that run fast.” Agentic AI is no different. As organizations adopt agentic AI to boost efficiency and innovate, they must also manage new governance risks, from toxic outputs and opaque logic to misguided or subversive agent behavior. Reducing these risks requires cross-functional controls at every level, including safe data selection, explainability techniques, and safeguards like alerts, thresholds, and kill switches. The second blog in our series tackles the next question this raises: How does a modern governance program rise to this challenge?