The last couple of years have been anything but boring in the field of natural language processing, or NLP. With landmark breakthroughs in NLP architecture such as the attention mechanisms, a new generation of NLP models — the so-called Transformers — has been born (no, not the Michael Bay kind).

The main advantage of Transformer models is that they are not sequential, meaning that they don't require that the input sequence be processed in order. This innovation allows Transformers to be parallelized and scaled much more easily than previous NLP models. What’s more, Transformer models have so far displayed better performance and speed than other, more traditional models. Due to all these factors, a lot of the NLP research in the past couple of years has been focused on them, and we can expect this to translate into new use cases in organizations as well.

For a more detailed and technical breakdown of Transformers and how they work, check out this Data from the Trenches blog post. Today, we'll focus on one specific Transformer model called BERT (no, not the Sesame Street kind) and the fascinating new use cases that it's unlocking.

BERT Who?

BERT (Bidirectional Encoder Representations from Transformers) is a new model by researchers at Google AI Language, which was introduced and open-sourced in late 2018, and has since caused a stir in the NLP community. The key innovation of the BERT model lies in applying the bidirectional training of Transformer models to language modeling.

As opposed to directional models, which read the text input sequentially (left-to-right or right-to-left), the Transformer encoder reads it bidirectionally, meaning the entire sequence of words at once. The results demonstrated by the BERT model show that a bidirectionally trained language model can have a deeper sense of language context and flow than single-direction language models.

Variants of the BERT model are now beating all kinds of records across a wide array of NLP tasks, such as document classification, document entanglement, sentiment analysis, question answering, sentence similarity, etc.

Given the rate of developments in NLP architecture that we’ve seen over the last few years, we can expect these breakthroughs to start moving from the research area into concrete business applications.

Understanding Search Queries Better Than Ever Before

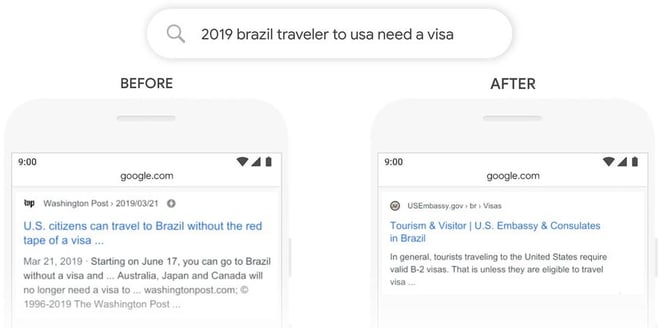

Since introducing the BERT model in 2018, the Google research team has applied it to improving the query understanding capabilities of Google Search. BERT models can therefore consider the full context of a word by looking at the words that come before and after it — particularly useful for understanding the intent behind search queries, and especially for longer, more conversational queries or searches where prepositions like “for” and “to” matter a lot to the meaning. By applying BERT models to both ranking and featured snippets in search, BERT can help search better understand one in 10 searches in the U.S. in English.

The Google researchers’ decision to open-source their breakthrough model has spawned a wave of BERT-based innovations from other leading companies, namely Microsoft, LinkedIn, and Facebook, among others.

Image source: The Keyword, Google blog

Fighting Online Hate Speech and Bullying

Facebook, for instance, took and developed its own modified version of the BERT model. The result was a model named RoBERTa which tackles one of the social network’s thorniest issues: content moderation.

Facebook took the algorithm and instead of having it learn the statistical map of just one language, tried having it learn multiple languages simultaneously. By doing this across many languages, the algorithm builds up a statistical image of what hate speech or bullying looks like in any language. This means that Facebook can now use automatic content monitoring tools for a number of languages. Thanks to RoBERTa, Facebook claims that in just six months, they were able to increase the amount of harmful content that was automatically blocked from being posted by 70%.

While recent technological breakthroughs such as the Transformer models, BERT, and its variants are already being implemented in business by leading tech companies and are surely going to see an even wider span of applications in the near future, companies of various technical maturity could stand to benefit from an array of NLP use cases. Learn more about the latest developments in NLP techniques and how to derive business value from them in this ebook.