Even though machine learning (ML) has been around for decades, it seems that in the last year, much of the news (notably in mainstream media) surrounding it has turned to interpretability - including ideas like trust, the ML black box, and fairness or ethics. Surely, if the topic is growing in popularity, that must mean it’s important. But why, exactly - and to whom?

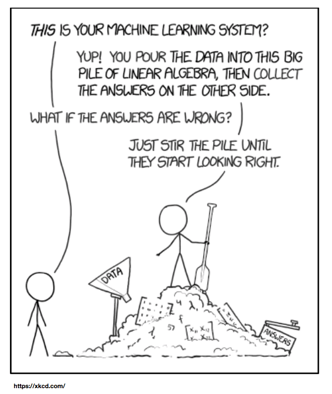

As machine learning advances and becomes more complex (and especially when employing deep learning), outputs become increasingly more difficult for humans to explain. So interpretable machine learning is the idea that humans can - and should - understand, at some level, the decisions being made by algorithms.

People Care

One of the reasons that the issue is coming in to the mainstream in the first places is because more and more, algorithms are making decisions that truly affect lives. Ultimately, most people don’t care if it was a person who made a decision or a computer - they want to know why. That means, by extension, people care about ML interpretability.

For example, one of the most promising use cases for AI in health care is for clinical trial participation selection. People who are not selected - or, for that matter, people who are selected - reasonably want to know why. Another example is in financial services. Equifax recently debuted ML-based credit scoring, but that doesn’t quell the concerns of people who want to know why they were given a certain score.

If anything, these developments bring up another concern that often goes hand-in-hand with model interpretability, and that is trust - more specifically, blind trust. If ML interpretability doesn’t get pushed to the forefront, and if developers and regulators decide it’s not a priority, a dangerous trust in ML decisions could develop that doesn’t allow any questioning of algorithms’ decisions at all. And this is how AI could end up taking one big wrong turn.

Governments (Are Starting To) Care

The European Union’s General Data Protection Regulation (GDPR) was the first of probably many regulations to come in this domain, and though it largely focused on privacy, did also touch the topic of interpretability:

“Organizations that use ML to make user-impacting decisions must be able to fully explain the data and algorithms that resulted in a particular decision.”

In addition to the impact on people, regulations are just one more sign that model interpretability matters more and more in this day and age. For those outside of the EU that still don’t think this applies to them, think again - many states in the US have already introduced similar data protection laws, and this will only continue into 2019 and beyond.

Data Scientists, Engineers, Etc., Should Care

At the end of the day, people (customers) and government (regulations) should be enough to make anyone who works on the development of ML models and systems care about ML interpretability. But there are still some skeptics that say only those in very highly regulated industries should have to be concerned with it.

Yet in fact, everyone should care about ML interpretability, because at the end of the day, it builds better models - understanding and trusting models and their results is a hallmark of good science in general. And additional analysis to help understand decisions is just another check to ensure that models are sound and performing the way that they should be. That is, really knowing why a model does what it does can help eliminate things like bias or other undesirable patterns and behaviors.