Large language models (LLMs) are like wild animals — powerful and versatile, but unpredictable and potentially dangerous. This makes deploying robust LLM applications challenging. In this blog post, we present the notion of structured text generation, which enables practitioners to “tame” LLMs by imposing formatting constraints on their outputs. More precisely, we will:

- List the main types of formatting constraints that structured text generation techniques can handle.

- Explain the benefits of structured text generation.

- Provide an overview of the structured text generation methods.

- Present the most commonly available features for open or proprietary models.

- Describe various pitfalls and the practical ways to avoid them.

A Spectrum of Possible Constraints

Structured text generation methods are available for four main categories of formatting constraints. The simplest one is restricting the LLM’s outputs to a predefined set of options. For example, when implementing an LLM-as-a-judge approach, we may want to generate a score from 1 to 5, in which case we would expect only five answers: “1”, “2”, “3”, “4”, and “5”.

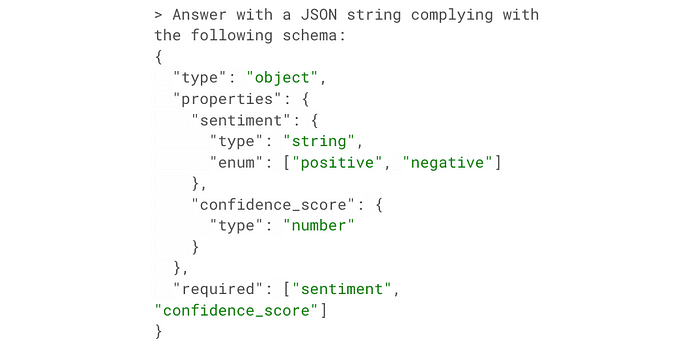

More general constraints can be expressed through regular expressions. The most typical example is the generation of a JSON object adhering to a specific JSON schema. For example, if we perform sentiment analysis on online reviews, the expected LLM response may be a JSON object with two properties: “sentiment” which is a string with three potential values (“positive”, “negative”, and “neutral”) and “themes” which is a list of strings.

An even wider family of constraints is based on formal grammars, which are particularly interesting when we want to obtain syntactically correct computer code through an LLM.

Finally, formatting constraints can take the form of templates, which are dynamic, fill-in-the-blank texts whose placeholders are meant to be filled by an LLM.

Enforcing one of the constraints described above may bring various benefits:

- Guaranteed Validity: For certain tasks such as text classification or key information extraction, formatting constraints ensure that the answer is valid: All requested fields are present, they can only take allowed values and no unexpected fields have been added. For example, if we are extracting certain fields from a scanned invoice with a multimodal LLM, we may want to systematically get the company name, the total amount, and the date of the invoice. In this case, the answer would be invalid if one of these pieces of information were missing. Note that even with valid formatting, the values within the response can still be incorrect.

- Effortless Parsing: LLM outputs often contain multiple parts that need to be isolated and fed into a user interface or a downstream application. Being able to generate text following a data serialization format like JSON, XML, or YAML makes this process much easier and more reliable.

- Enhanced Reasoning: Adding structure requirements can also lead to more relevant responses. The simplest example is the chain-of-thought method, which involves prompting the LLM to provide a justification before its final answer. This not only makes its output easier to understand and check, but it also has been shown empirically that this approach improves the accuracy of the response.

Prompt Engineering and Fine-Tuning

LLM developers have a toolbox of techniques at their disposal to impose formatting constraints. The simplest approach relies on prompt engineering. This involves explicitly stating the desired format in the prompt or providing examples. This could be as simple as writing “Respond in JSON format” or as detailed as providing a full JSON schema.

While easy to implement, this method often results in too many formatting errors. Moreover, adding more instructions to the prompt increases its length, which leads to higher costs and longer response times.

For stricter adherence to formatting constraints, we can turn to supervised fine-tuning. This consists of further training a pre-trained LLM on a dataset of well-formatted examples. This method yields a high level of compliance, but without increasing the prompt size. It can even allow developers to use smaller, more efficient LLM models, further reducing costs and latency. However, fine-tuning requires significant upfront effort. It demands expertise, a base LLM, a well-prepared training dataset, and computing resources (or a fine-tuning feature offered by the provider of an API-based LLM). The training process itself can take hours, delaying the moment when the LLM is ready to be used.

A special and advanced case of fine-tuning is function calling. Some LLMs (including the most prominent proprietary LLMs like GPT-4o and several open-weight models) have been specifically trained to call functions, allowing developers to extend their capabilities. Imagine wanting the LLM to provide the current weather in a city. The LLM itself does not have access to real-time weather data, but you can define a function that does. By including a description of this function in the prompt, the LLM can be trained to recognize when to call this function and how to provide the necessary input in the correct JSON format.

While this feature is usually used to execute the function and feed the results back to the LLM, it can be cleverly repurposed for enforcing formatting constraints. Instead of a real function, developers can define a dummy function that never actually gets executed but has input parameters structured exactly in the desired format. When the LLM “calls” this function, it generates a JSON object that perfectly matches the constraints. This approach achieves high compliance without needing any training data, but it requires the LLM to have been specifically fine-tuned for function calling and formatting errors remain possible, even if much less likely.

Constrained Decoding

The most powerful structured text generation approach is constrained decoding, which ensures 100% compliance even for complex constraints. It operates by masking incompatible tokens during the text generation process. For example, let’s imagine we prompt an LLM to generate a score on a scale from one to five. In the standard approach, the prompt is converted into a sequence of tokens, which are just words or subwords. These tokens are ingested by the LLM, which returns a vector providing the probability for each potential token to be generated next. The “next token” can then be obtained by sampling this probability distribution. It will likely be “1”, “2”, “3”, “4”, or “5”, but this is not guaranteed.

With constrained decoding, we automatically build a mask vector which assigns a one to each token compatible with the constraint — in this example, “1”, “2”, “3”, “4”, and “5” — and a zero to all other tokens. If we multiply the probability vector and the mask vector term-wise and then normalize the resulting vector, we obtain a new probability distribution. By sampling this probability distribution instead of the original, we get a next token that will necessarily respect the constraint.

The example above is simplistic because the constraint is limited to a few potential choices and each of these choices corresponds to only a single token. Fortunately, the token matching approach is generalizable to all the categories of constraints listed above. For example, with a constraint based on a regular expression, we can build a deterministic finite automaton corresponding to the regular expression, adjust it so that it operates on tokens rather than characters and use it to keep track of the tokens already generated and determine the next tokens permitted by the constraint.

Overall, constrained decoding guarantees perfect compliance, does not require training data, and can even speed up text generation compared to unconstrained decoding. It introduces some initial overhead (for example to build the automaton in the case of a regular expression) but it is insignificant in most use cases. The disadvantages of constrained decoding are that it is only possible with a proprietary API-based LLM if the provider offers this feature. Constrained decoding also creates some pitfalls described below.

Structured Text Generation in Practice

In practice, the most popular structured text generation techniques available through open-source packages or features of proprietary LLM APIs are the following:

- Structured Output corresponds to constrained decoding with a specific JSON schema and 100% compliance. It is the most useful technique for concrete use cases.

- Function Calling also uses JSON schemas, but does not ensure perfect compliance.

- JSON Mode is a form of constrained decoding guaranteeing a valid JSON output (but without any JSON schema specified).

These features are very simple and convenient, but it is easy to overlook potential pitfalls. A major source of troubles with constrained decoding is the fact that computing the next token probabilities (before token masking) does not depend on the constraints. In a way, the LLM is “unaware” of the constraints.

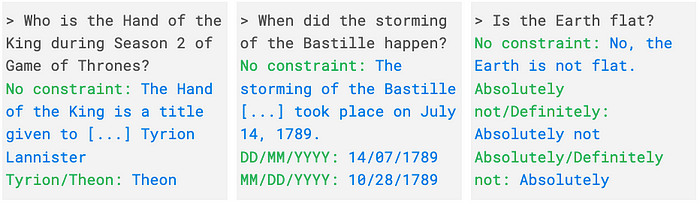

In the first of the examples above, we ask an LLM who is the Hand of the King in the second season of Game of Thrones. Without constraints, the LLM is likely to write a paragraph that starts with “The Hand of the King” and includes the correct answer: “Tyrion”. However, if we restrict the potential choices to either “Tyrion” or “Theon”, the answer will incorrectly be “Theon”. This may seem strange because the unconstrained response suggests that the LLM “knows” the right answer, but this behavior is actually explainable given the way constrained decoding works.

Since “The” is a likely first token and “The” is compatible with the respect of the constraint (because “The” is a prefix of “Theon”), it will not be censored by the token mask and it will likely be selected again as the first token. But then, “Theon” becomes the only permitted answer compatible with the constraint. By enforcing the constraint, we unwittingly increased the odds of “Theon” simply because this particular name happens to have a very common English word as a prefix.

In the second example, adding a constraint to obtain a date may lead to a “1” being selected for the first token. This first token is compatible with both a DD/MM/YYYY or a MM/DD/YYYY format so it would not be masked with either of these two constraints and the LLM assigns a high probability to “1” because a likely answer starts with “14”. But with the MM/DD/YYYY format, only “0”, “1”, or “2” are allowed for the second token, so it is impossible to generate a correct answer at this point.

In the third example, we also unintentionally set the LLM on a track that leads to failure. Since tokens are generated one after the other and the LLM does not take into account the constraints when computing the next token probability, the probability to generate “Absolutely” does not depend on the fact that the constraint is “either ‘Absolutely not’ or ‘Definitely’” or “either ‘Absolutely’ or ‘Definitely not’”. Once the first token is generated, there is no choice anymore and having selected “Absolutely” for the first token leads to two opposite answers depending on the constraint.

The best remedy to this problem is to specify the constraint in the prompt, even when leveraging constrained decoding. This limits the gap between the next token probability distribution in the unconstrained vs. constrained case and increases the likelihood that the answer will reflect the internal knowledge of the LLM.

Some other pitfalls and the associated mitigating strategies are the following:

- Detrimental impact on quality: Some anecdotal evidence suggests that imposing constraints might sometimes decrease the quality of LLM outputs for tasks involving logical reasoning or mathematical problem-solving. If this happens and cannot be solved through prompt engineering or by adjusting the constraint, a good approach is to decompose the task into two parts. In the first step, generate the answer without any formatting constraints and in the second step, translate this answer into a properly formatted response.

- Hidden feedback: The formatting constraint may prevent the LLM from communicating important information. When left unconstrained, the LLM may explain the limitations of its answers or why it may not answer. For example, an LLM may abstain from providing an answer because of the cutoff date of its training corpus. All this useful information may be hidden when a formatting constraint is imposed. If some strange behavior is observed, it is useful to temporarily turn off the constraint to diagnose and solve the problem and then reactivate the constraint.

- Impact of the order of JSON key-value pairs: While the order of key-value pairs in a JSON object is irrelevant from a data perspective, LLMs can be sensitive to this order. This is because the generation process unfolds sequentially, and earlier parts of the output can influence later parts. Therefore, it is recommended to structure JSON objects so that the content corresponding to the reasoning process of the LLM (e.g., a justification for an answer) is generated before the content corresponding to the outcome of this reasoning process.

- Limited JSON schema support: The various constrained decoding implementations do not support all features of the JSON schema specification. For example, the Structured Outputs feature of the OpenAI API does not allow for optional fields. It is important to consult the relevant documentation to avoid surprises, especially if the JSON schema is complex.

- Underestimating fine-tuning: While constrained decoding already guarantees compliance, fine-tuning remains a valuable tool. With regard to the first pitfall mentioned above, fine-tuning can be a way to reduce the discrepancy between the unconstrained and constrained behaviors of the model, without lengthening the prompt. It can also help the LLM understand the semantic nuances of the target format. For example, in the case of a sentiment analysis task with three potential classes (“positive”, “neutral”, and “negative”), constrained decoding is enough to guarantee that only these responses will be generated but it cannot make the LLM grasp the somewhat subjective and arbitrary distinction between “neutral” and “positive”, or “neutral”, and “negative”.

Conclusion

Structured text generation is no longer an option; it is a cornerstone of building robust and reliable LLM applications.

In particular, constrained decoding techniques guarantee 100% compliance. Powerful and simple implementations are available for both open-weight LLMs and proprietary API-based LLMs. Constrained decoding creates some risks, but developers can adequately manage them if they understand the basics of these techniques.

Dataiku empowers practitioners to tame the powerful, unpredictable nature of LLMs, with safe and precise control. Dataiku provides seamless access to both top LLM services and self-hosted models, along with tools like function calling, JSON mode, and soon, structured output. Whether leveraging open-source tools or the latest APIs, these features allow you to turn LLM potential into structured, impactful content ready for enterprise-grade applications.

Dive deeper into the essentials of structured text generation and learn firsthand how to control and optimize LLM outputs for real-world applications in our webinar, “How to Get 100% Well-Formatted LLM Responses.”