The use of data science, machine learning, and ultimately AI within the public sector — including defense — is on the rise, and the opportunities for even greater adoption appear boundless.

But not all AI is created equal, and there are a few best practices that public sector entities can adopt to improve their ability to execute on AI initiatives (to get the full story, grab a copy of the just-released ebooks on AI in the Public Sector and AI in Federal Defense).

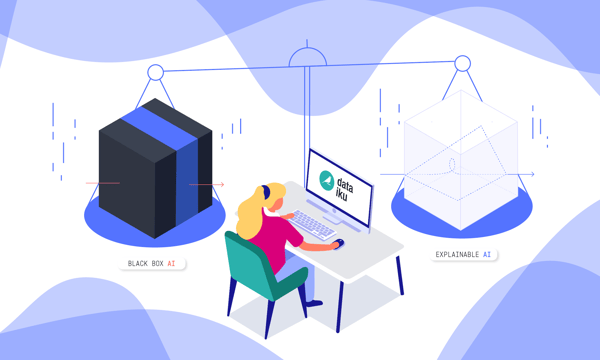

White-Box Strategies for Explainable AI

Indeed, as machine learning advances and becomes more complex (and especially when employing deep learning), outputs become increasingly more difficult for humans to explain. Interpretable (or white-box) AI is the idea that humans can — and should — understand, at some level, the decisions being made by algorithms, and this is critical in the world of defense and civilian agencies alike.

Though deterministic decision models based on static rules (e.g., If X, then Y) are comforting in their simplicity, they lag far behind sophisticated AI and machine learning, which can weigh a host of variables and concerns in order to make recommendations. Probabilistic modeling and partial dependency plots provide weighted recommendations and demonstrate the extend to which specific variables contributed to that recommendation, providing transparency into elusive mathematical models.

Dataiku helps customers balance interpretability and accuracy by providing a data science, machine learning, and AI platform built for best-practice methodology and governance throughout a model’s entire lifecycle — read more about the white-box AI approach with Dataiku.

Embracing Open Source

Open source technologies in data science, machine learning, and AI are state-of-the-art and that organizations that adopt them signal that they’re dynamic and future-minded. In fact, the bleeding edge of data science algorithms and architecture is only about six months ahead of what is being open sourced.

The other advantage to open source is that it solves part of the hiring challenge — open source can act as sort of “technological honey” that can be used to attract the best talent, allowing users to hone their skills on tools that will become more widespread in coming years. Those that use proprietary solutions can only recruit people who know (or are willing to learn) that specific solution.

But open source often lacks a layer of user friendliness, which can limit its adoption to only the most technical members of an organization. Proprietary solutions (like Dataiku, which — bonus — supports open-source tools, adding a layer of orchestration on top) have the advantage of being usable right out of the box for all profiles, and when it comes to operationalizing projects, they can make it easier to execute on use cases.

Investing in Tools that Empower

The good news is that for public sector entities looking to spin up data expertise, it’s not just a matter of hiring the best external data scientists that money can buy. It’s also a matter of leveraging existing staff from across the organization, whether or not they have formal training in data science. In many ways, especially in the public sector, upskilling existing staff is preferred. Hiring people - no matter how skilled in machine learning - that are disconnected from the central mission of the organization and from its existing processes might result in AI solutions that don’t make sense or align with larger objectives.

Therefore, the right data tools demystify AI and enable everyone to understand how AI systems work. That means data experts working in code alongside data beginners, who can use visual, point-and-click interfaces to get up to speed. With everyone working from one central environment, auditing becomes easier, enabling engagement without compliance risk (with the records to support any actions in the event of an audit).