$301 billion. That staggering number represents what the worldwide AI spend will exceed by 2026 — a statistic that signifies that AI adoption and spend will continue the upward trajectory that it’s been on for the last few years. Despite this rapid expansion, though, AI project failure rates remain high.

The Dataiku-sponsored IDC InfoBrief “Create More Business Value From Your Organizational Data” reveals that the primary reasons behind these failures include reasons such as AI technology not performing as expected or promised, lack of production-ready data pipelines for diverse data sources, and results that were too disruptive to current business processes.

The Dataiku-sponsored IDC InfoBrief “Create More Business Value From Your Organizational Data” reveals that the primary reasons behind these failures include reasons such as AI technology not performing as expected or promised, lack of production-ready data pipelines for diverse data sources, and results that were too disruptive to current business processes.

Let’s dig into that a little further. In order to reach AI project failure, something must be going awry earlier in the process. In this blog post, we’ll dig into the top challenges for AI deployments as revealed by the IDC InfoBrief, along with solutions for navigating them.

AI Challenge #1: Costs

According to the IDC InfoBrief, “Organizations are investing in AI technology to permanently reduce the cost of doing business, future-proof their business, and become a digital business.” But how do they get to the point where they can actually leverage AI to cut costs?

In order to generate short-term, material impacts on one’s profit and loss, it’s imperative to find a way to lower overall analytics and AI project costs in order to make sure the benefits are delivered more efficiently. In order to do so, organizations need to:

-

Make sure they can push to production quickly: Packaging, release, and operationalization of analytics and AI projects is complex and time consuming. Without a way to do it consistently, costs are tied up across person hours and revenue lost for the amount of time the model is not in production and able to drive tangible cost savings for the business.

-

Avoid counteracting cost savings with high AI maintenance costs: Getting a model in production will be for naught if a robust MLOps strategy doesn’t exist to control the cost of AI project maintenance.

-

Facilitate more cost-saving use cases for the price of one: Teams can do this by empowering anyone (not just data experts) to leverage the work done on existing projects to spin up new ones, potentially revealing previously untapped cost-savings use cases.

-

Reevaluate their data and analytics stack with an eye for cost: Ask yourself critical questions about your existing stack, such as any challenges or inefficiencies it brings to uncover any missed opportunities across all steps of the lifecycle.

According to the IDC InfoBrief, “Look for a flexible AI platform that can quickly scale up and down based on current demand. Instead of implementing distinct solutions throughout the organization to handle small tasks, embrace the platform approach to support consistent experiences and standardization.”

AI Challenge #2: The Talent Gap

What’s fueling data and AI talent challenges, which in turn hinder AI deployments? According to the InfoBrief, “The great resignation/reshuffle along with existing shortage of AI skills — not just the data scientists and ML developers, but also business practitioners who understand the implications and use of AI — remain to be a big gap. Reskilling and training is an investment priority, along with the demand for democratization of AI tools and technologies.”

According to a McKinsey survey, nearly all (87%) of companies are not adequately prepared to address these tech talent skill gaps. What’s worse is that many companies make the mistake of thinking that intuitive tools can reduce (or even replace) the need for proper training and continuing education — for different competency levels — around analytics and AI initiatives.

As upskilling is fundamentally critical to AI staffing, organizations need to be tremendously intentional about embedding formal active continuous learning on AI into employee education programs so teams can access high-performing talent and shape the talent into the emerging needs associated with scaling AI. Further, helping staff both understand how AI, data science, and ML fit into the company’s overall strategy can be just as critical as educating people on the concepts and technology themselves. So by clearly communicating the value, existing employees can more easily see how upskilling fits into the mix.

At Dataiku, we firmly believe in democratization, whereby the work of developing and using AI is shared among people with different and valuable skills and expertise. In addition to setting up formal upskilling and continuous education programs, organizations can also provide tools that support those initiatives and allow domain experts to participate in the data science process — such as via features that support AutoML and augmented analytics, data cleaning, or exploration without code.

AI Challenge #3: AI Governance

According to the IDC InfoBrief, “Upcoming regulations to ensure responsible AI deployments have increased the need of AI Governance offerings. Adequate volumes and quality of training data continue to be an inhibitor.”

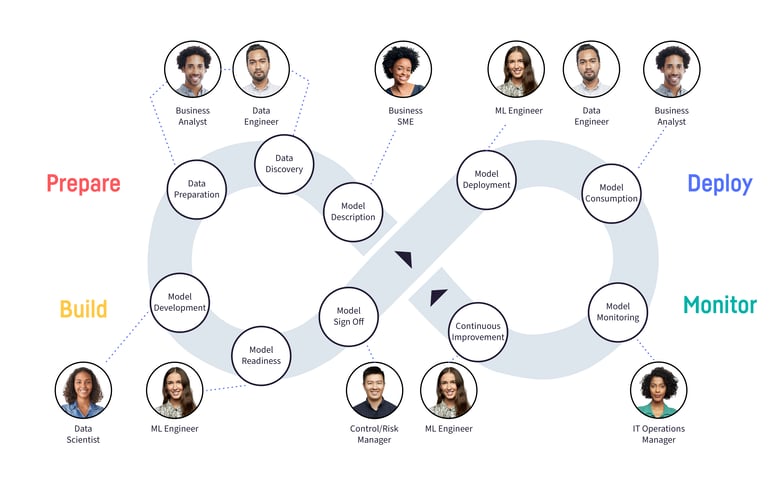

At Dataiku, our take is that as organizations continue to see AI as a differentiator, the desire to scale AI impact remains high. And to scale requires building repeatable, robust MLOps processes that close the loop all the way from data prep and modeling to deployment and monitoring, keeping humans in the loop along the way.

And not just MLOps alone, but in tandem with Responsible AI (which ensures that end-to-end model management addresses the specific risks of AI) and AI Governance (which delivers end-to-end model management at scale, with a focus on risk-adjusted value delivery and efficiency in AI scaling, in alignment with regulations). So how can organizations go from theory to practice and actually implement sustainable AI Governance programs? A strong AI Governance framework should:

-

Centralize, document, and standardize rules, requirements, and processes aligned to an organization’s priorities and values.

-

Inform operations teams, giving build and deployment teams the right parameters to deliver.

-

Grant oversight to business stakeholders so they can ensure that AI projects (with or without models) conform to requirements set out by the company.

Looking Ahead

If there’s one thing that we know, it’s that from the mundane to the breathtaking, AI is already disrupting virtually every business process in every industry. Around 50% of respondents to the two surveys highlighted in the InfoBrief plan to use AI across business functions in the next 12 months, so as that adoption and integration rises, organizations can use these insights to accelerate productivity, efficiency, and time to insight.

IDC InfoBrief, sponsored by Dataiku, "Create More Business Value From Your Organizational Data, US49981822-IB, February 27, 2023