OpenAI’s ChatGPT, Microsoft’s Bing, and Google’s Bard are products built on top of a class of technologies called Large Language Models (LLMs). Using an LLM in the enterprise, beyond the simple web interface provided by products like ChatGPT, is possible. However, before diving in, it’s important to first take a step back.

.png?width=287&height=165&name=DKU-GENAI-dataikupage-genai%20use%20case%20(2).png) What are some useful, realistic applications of LLMs like ChatGPT for business that go beyond virtual assistants and chat bots? And how can you choose a use case for ChatGPT technology? If you’re interested in testing the usefulness of an LLM inside your organization, this blog post will detail key factors to consider.

What are some useful, realistic applications of LLMs like ChatGPT for business that go beyond virtual assistants and chat bots? And how can you choose a use case for ChatGPT technology? If you’re interested in testing the usefulness of an LLM inside your organization, this blog post will detail key factors to consider.

ChatGPT Use Cases Require a Certain Risk Tolerance

If this is the first time you’re using LLM technology, choose a domain where there is a certain tolerance for risk. The application should not be one that is critical to the organization’s operations and should, instead, seek to provide a convenience or efficiency gain to its teams.

No one likes to think about artificial intelligence (AI) projects failing before they begin, but that’s the reality we live in. Recent research from IDC shows 28% of AI projects still fail. And the top reason for failure (cited among 35% of respondents) is because AI technology didn’t perform as expected or as promised.

Given that our understanding of how and why LLMs work is still only partial, it is important to evaluate the consequences if the use case you’re testing does not go as expected.

- Will there be damage to brand reputation or customer trust?

- Will there be damage to the level of organizational trust in AI projects?

- Are there risks regarding regulatory compliance or customer privacy?

These questions are good practice when evaluating the use of AI for any use case, but they’re especially important when it comes to the technology behind ChatGPT and use cases for business. LLMs are complex models that should still be leveraged somewhat cautiously in an enterprise context.

Keep Humans in the AI Loop

There is a lot of discussion around whether ChatGPT is trustworthy. Once you understand how LLMs actually work, you can see why ChatGPT provides no guarantee that its output is right, only that it sounds right.

After all, LLMs are impressive not because they recall facts. For example, ChatGPT is not looking up anything about the query when you ask it a question. The strength of LLMs is in generating text that reads like it was written by a human. In many cases, the text that sounds right will also actually be right — but not always.

A law firm has been quoted as saying that they are comfortable using LLM technology to create a first draft of a contract, in the same way they would be comfortable delegating such a task to a junior associate. This is because any such document will go through many rounds of review thereafter, minimizing the risk that any error could slip through.

You can imagine lots of use cases like this, where technology makes the first pass and a human comes in later. From generating responses to customer service inquiries to product descriptions and content creation, there are a wide range of possibilities.

Again, the idea of keeping a human in the loop is a general best practice for any AI project. But it’s especially important when leveraging powerful LLM models. Ultimately, we need people to do the hard stuff that machines can't — like set goals and guardrails, provide oversight, and develop trust.

Leverage LLMs for Text- (or Code-) Intensive Tasks

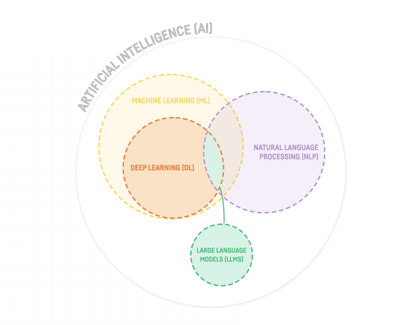

It’s important to lean on the strengths of generative AI and LLMs in particular when looking to leverage the technology behind ChatGPT for enterprise use. That means setting them to work on text-intensive or code-intensive tasks — in particular those that are “unbounded,” such as generating sentences or paragraphs of text. This is in contrast to “bounded” tasks, such as sentiment analysis, where existing, purpose-built tools leveraging natural language processing (NLP) can provide excellent results at lower cost and complexity.

It’s important to lean on the strengths of generative AI and LLMs in particular when looking to leverage the technology behind ChatGPT for enterprise use. That means setting them to work on text-intensive or code-intensive tasks — in particular those that are “unbounded,” such as generating sentences or paragraphs of text. This is in contrast to “bounded” tasks, such as sentiment analysis, where existing, purpose-built tools leveraging natural language processing (NLP) can provide excellent results at lower cost and complexity.

For example, as an internal test of the technology, Dataiku used OpenAI’s GPT-3 model to provide full text responses on the full contents of Dataiku’s extensive public documentation, knowledge base, and community posts.

This was an appropriate use case for leveraging a third-party model as all of the data is public already, and the large third-party model will be well-suited to responding to the many different ways that users may phrase their questions.

The results have been impressive, providing easy-to-understand summaries and often offering helpful context to the response. Users have reported that it is more effective than simple links back to the highly technical reference documentation.

With LLMs As With All AI: Business Value First

As always, and perhaps especially when there is a lot of excitement around a technology, it is important to come back to basics and ask whether the application is actually valuable to the business. LLMs can do many things — whether those things are valuable or not is a separate question.

Consider whether the anticipated benefits in using an LLM for a given use case justify the time and investment required to replace that existing process. If using AI wouldn’t provide any larger value or return on investment (ROI), perhaps it’s not the best investment for that particular use case. Always ask: How will this use case for LLMs help me make money, save money, or do something I can’t do today?

For example, a Dataiku partner has developed a demonstration project that aggregates and summarizes physicians notes within an electronic medical record system.

Using the open source BART model that has been trained on medical literature, the system is able to automate the drudgery of summarizing the copious physician’s notes that make up unstructured enterprise data in heath care into short patient histories. Importantly, the detailed notes remain in the system, allowing a consulting physician to get the full detail when needed. This is an example of a ChatGPT business use case with clear value, something that is tedious and expensive for a human to do today.

Bonus: In this use case, the a selection of the summaries will be reviewed by a human expert to ensure that they are functioning as expected and capturing the most relevant information. That means keeping humans in the loop before use in any production context.