As organizations begin to monitor their unique recovery process and understand the shifting dynamics of the economic landscape following the global health crisis, many face the reality, albeit daunting, that their business was not equipped to pivot their operations in the face of mass disruption.

In a new IDC Infobrief, a survey revealed that 87% of CXOs are prioritizing becoming a more intelligent enterprise over the next five years. How are they planning to do that? The answer is simple: data initiatives. In the economic environment of today, organizations are beginning to view data as the “new water,” meaning it’s not just a valuable asset, but an essential ingredient for survival.

This way of thinking can be critical for data leaders considering implementing a data science platform to help streamline and accelerate these data efforts. As part of this process, though, organizations no longer have the luxury of gradually deducing if the total cost of ownership (TCO) and overall value align with their greater business objectives and financial position. In order to achieve success using data, it has to — and has to be done in a way that is well defined and clearly reduces TCO. We’ll give a few examples on how this can be done in the next section.

Capitalization and Reuse for Enterprise AI

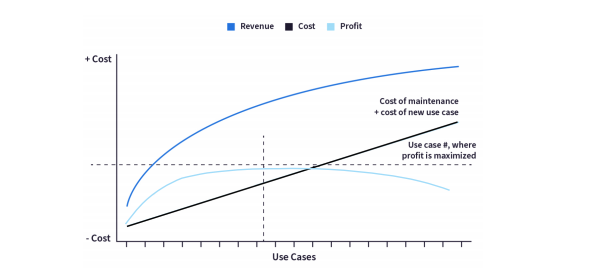

Regularly maintaining and monitoring AI projects is a task that cannot be ignored (or at least not without a financial impact). Because data is constantly changing, models can drift over time, causing them to either become less effective or, worse, have negative implications for the business. Further, the more use cases the company takes on, the harder it is for maintenance to be properly addressed, not to mention the rising costs involved.

In order to reduce costs associated with various steps of the data-to-insights process (from data prep to model maintenance), teams can leverage reuse and capitalization. Reuse is the concept of avoiding rework in AI projects, such as making sure two data scientists from different business units aren’t duplicating work on a project that both are involved in. Capitalization takes reuse a step further in order to make sure the costs incurred from an initial AI project are shared across other projects.

The notion of reuse and capitalization can be seen through the following example. If a company is working on four primary use cases to jumpstart AI efforts, the organization can also work on other smaller use cases by reusing various parts of the four main ones, therefore eliminating the need to start from scratch with data cleaning and prep, operationalization, monitoring, and so on. As an added bonus, this approach can also help teams uncover hidden use cases that can drive more value than originally anticipated, opening up new pockets of potential profit or cost savings. To learn more about how to efficiently leverage Enterprise AI, check out the full white paper, The Economics of AI: How to Shift AI From Cost to Revenue Center.

Before we give specific examples of how Dataiku is useful for optimizing the TCO for a data science platform, it is important to remember that each organization is in a different stage of their journey to attaining this organizational, data-driven culture that transcends teams, processes, and technology. Some organizations may have fundamental use cases in place that allow the business to optimize costs and accelerate its ability to execute on mission-critical business functions, while others may not be in this position.

Reducing the TCO for a Data Science Platform With Dataiku

When organizations are running a TCO to determine the optimal data science and machine learning platform for their organization’s needs (and look beyond the value that is being promised), there are a few things to keep in mind.

First, it is important to compare apples to apples. Are the solutions comparable from a capabilities perspective? Next, the costs involved with each platform should be properly scoped. What should be included? What existing costs will be impacted by the platform? What costs will remain the same? Finally, run different customer scenarios, taking into account team size, data needs, and complexity levels of varying analytics projects. Below is a non-exhaustive round-up of how we believe Dataiku can drastically reduce the TCO for data science initiatives. The end-to-end platform:

1. Enables data prep and AutoML, removing the cost of licensing, enablement, and integration for separate data prep and AutoML products

2. Is completely server-based, removing the cost of maintenance of desktop-based products

3. Enables the management of Spark clusters, removing the overhead of paying for a Spark management solution

4. Comes with a strong operationalization framework, removing the need to build a fully-fledged CI/CD code-based framework (on top of the existing solution)

5. Provides a clear upgrade path with no need to transition platforms or migrate in the future

6. Minimizes the overall number of tools by offering one truly comprehensive platform, avoiding the need to cobble together multiple tools for ETL, model building, operationalization, and so on

7. Promotes reuse via capitalization, allowing the organization to share the cost incurred from an initial AI project across other projects, resulting in multiple use cases for the price of one, so to speak

8. Is future-proofed and technologically relevant, helping teams avoid significant upgrade costs or lock-in when faced with limited infrastructure options that can hinder growth

Dataiku manages the entire data science pipeline for teams in a way that is flexible, collaborative, and governable. Now is the time for organizations to reassess their AI use cases (or figure out which one to begin with if they are just getting started) in order to maximize ROI and maintain new value, making sure that reuse and capitalization is cornerstone across each use case. By finding ways to generate efficiency gains and cost optimizations, organizations will be able to leverage data science and AI as the gateway to becoming a smarter organization.