Ever wondered if your model could improve from a performance score of .38 to .89? At Everyday AI NYC 2023, Emma Huang and Cassie Chuljian, senior data scientists at Dataiku, unraveled the secrets behind optimizing model metrics. This blog will go over the seven-step journey they presented to help elevate your data science game.

First Things First

Picking the Right Project

Emma and Cassie’s adventure began by choosing a project that would challenge their data science prowess. Riding the coattails of a global phenomenon named "Barbenheimer," they decided to predict the box office performance of the movies Barbie and Oppenheimer. Armed with a dataset that contained metadata on over 45,000 movies, they aimed to predict movie ratings categorized into low, medium, and high values. Their metric of choice for optimization was the F1 score.

After uploading the movie data, cleaning out invalid records, and training a multi-class classification model, they were met with disappointment — a model score of 0.38. Unacceptable for production deployment. The goal was clear — enhancing the model's predictive power.

Setting the Stage With Classification

The next step is to understand the nature of the chosen use case. In Emma and Cassie’s example, they decided on classification, categorizing box office scores into low, medium, and high. After this, you are ready to start thinking about model metrics.

Where Things Get Serious

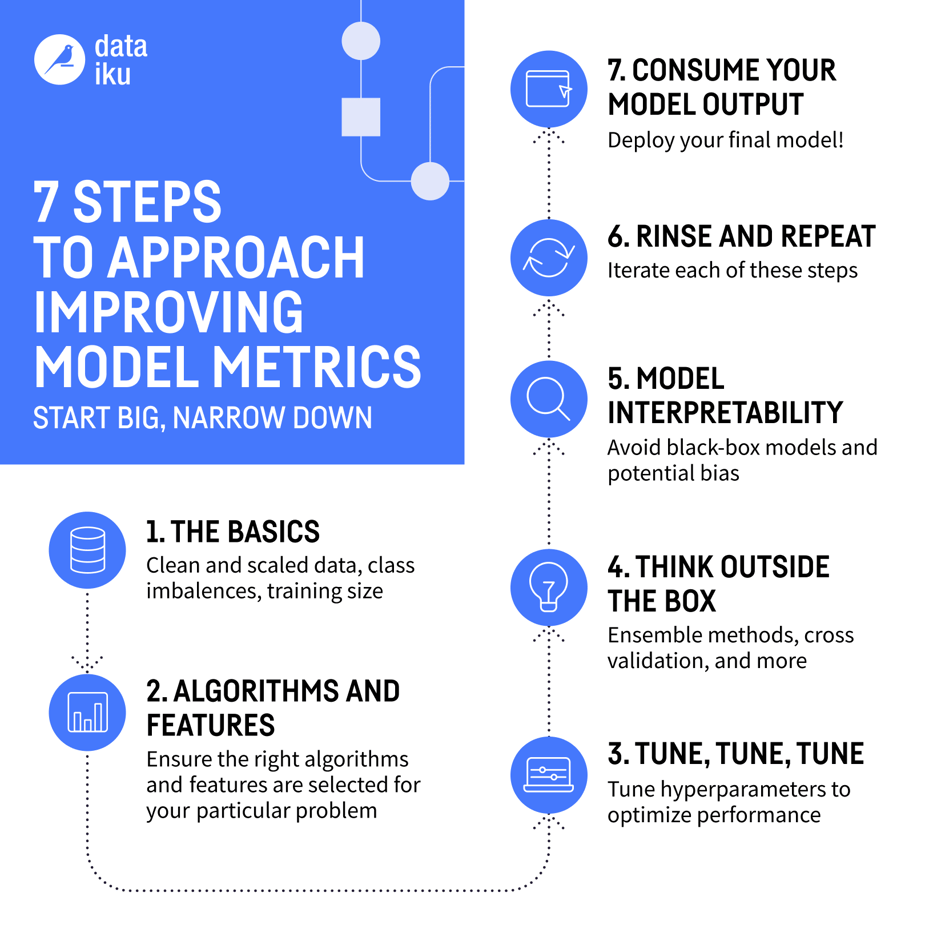

Step 1: The Basics - Ensuring a Solid Foundation

Garbage in, garbage out — the importance of clean data cannot be overstated. The next step is data cleaning, handling missing values, and scaling numerical features. Balancing classes and optimizing training data size can help further refine the project’s foundation. For Emma and Cassie, this step resulted in a significant jump in the model score from 0.38 to 0.48.

Step 2: Algorithm and Feature - Making the Necessary Adjustments

Choosing the right algorithms and features is pivotal. This can require some experimenting with various algorithms and balancing computational resources and performance. You then dive into feature selection — eliminating irrelevant features and engineering new ones. In this example, this step led to the adoption of gradient boosted trees and a boost in their model's performance.

Step 3: Tune, Tune, Tune - Refining the Model

The next step to further optimize the model is hyperparameter tuning. Using a systematic approach, Emma and Cassie generated hyperparameter search 1D plots, to evaluate trends in the hyperparameters. These were used to update the ranges for the training of future models.. Strategies like grid search and random search optimized the hyperparameter tuning, which led to a refined model with new hyperparameters and a higher F1 score.

Step 4: Think Outside the Box

When conventional methods fall short, explore alternative approaches. Ensembling techniques like random forests and gradient boosting can help bring fresh perspectives. Emma and Cassie considered switching problem framing between regression and classification and explored cascading models. They then used cross-validation and testing to enhance hyperparameter selection, ensuring robust model performance.

Step 5: Model Interpretability - Understanding the Model's Decisions

The final tangible step involves understanding your model's decisions. Model interpretability plays a crucial role, offering insights into feature importance, biases, and potential areas for improvement. Techniques like feature importance analysis, partial dependence plots, and error analysis can help provide a deeper understanding of your model.

Step 6: Rinse and Repeat - The Art of Iteration

Iteration is the heartbeat of data science. The journey through these seven steps is not a one-time affair. It's an ongoing process of experimentation and refinement. Revisiting combinations, tweaking algorithms, and re-evaluating features ensure continuous improvement.

Step 7: Consume Your Model Output - A Model Ready for Action

After tirelessly iterating through these steps, your model will have evolved. From an initial score of 0.38, Emma and Cassie witnessed a remarkable transformation, culminating in a performance exceeding 0.89. It’s now your time to prove your models can achieve a performance score of 0.89.