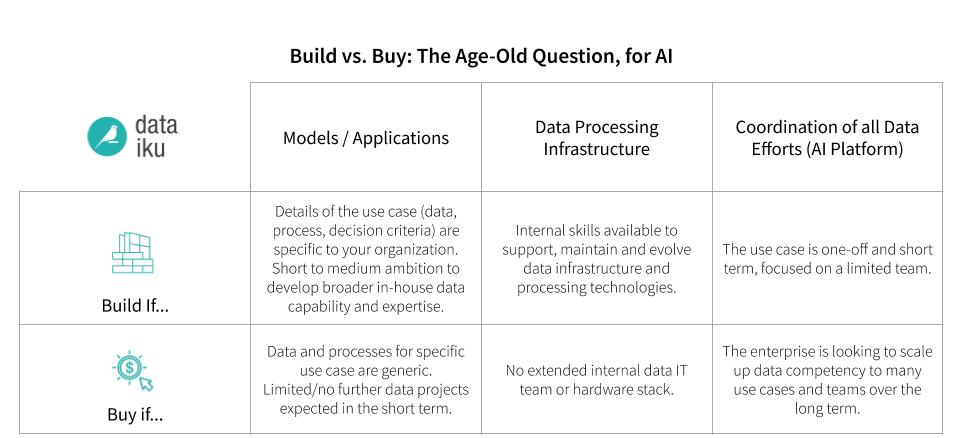

In the world of data science, machine learning, and AI, there is no shortage of tools - both open source and commercial - available. This inevitably spurs the age-old software question of build vs. buy for AI projects and platforms.

Build vs. buy is usually a trade-off between cost, risk, customization, and lock-in; however, in the age of AI, even this traditional question takes on a different dimension.

Is the question to make or buy models?

Data processing tools?

Or the platform that holds it all together?

Actually, all three are relevant, and here are some considerations to account for when deciding what’s best for your business.

Make or Buy: Models and/or Applications

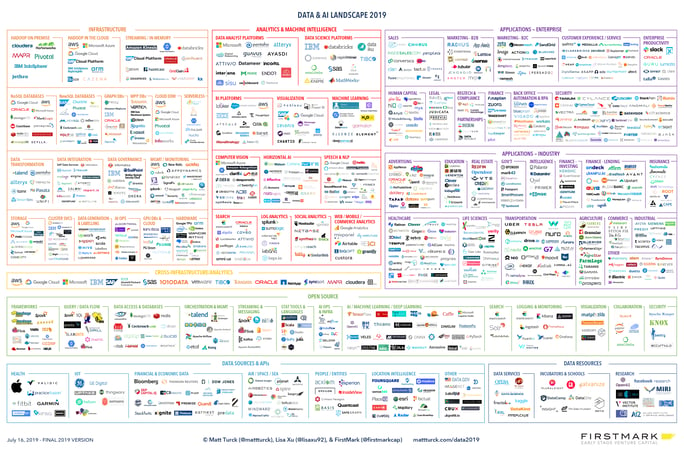

Take a look at the Data & AI Landscape 2019 chart . A large part of it is dedicated to industry-specific applications and models. Predictive maintenance, customer segmentation, and fraud detection now all have very credible players that have developed and tuned packaged solutions pertaining to clearly identified use cases.

Image source: Matt Turck, A Turbulent Year: The 2019 Data & AI Landscape

Image source: Matt Turck, A Turbulent Year: The 2019 Data & AI Landscape

These pre-packaged solutions may present a significant time and effort saving potential, but with two potential drawbacks:

- Efficiency often comes at the expense of developing internal expertise on critical subjects.

- Being boxed into the specifics of the selected solutions, unable to expand easily to other use cases.

The key elements to assess make vs. buy in this scenario typically are:

- Is this use case industry generic? Buying a ready solution requires the data and process currently used in the organization to be highly compatible with the expected input and behavior of that solution. If a use case’s details, data, or processes are fairly specific to your organization, the energy spent adjusting internal operations to match the packaged solution’s specifications may obliviate the benefit from buying something ready made. If it is truly generic, there can be great benefit from a packaged solution.

- Is this use case a key part of a global data initiative? The benefit of work done on deploying a specific AI use case goes way beyond that use case itself. Often, first use cases are the start of a full data initiative. As a result, an application make or buy decision should also take into account the global company’s data strategy: sometimes, the acquisition of expertise and technology are actual ends in and of themselves.

Make or Buy: Data Processing

Data infrastructure and processing technologies have evolved (and are evolving) at an alarming pace. Traditional vendors, open source, and cloud-based solutions compete with each other on every segment of the data processing and storage stack. Make or buy has taken a slightly different meaning and is usually more centered around delegating responsibility:

- Make or buy support and/or maintenance (typically full open source vs. independent software vendor)

- Make or buy operations (cloud vs. on-premise)

- Make or buy accessibility (packaged cloud tools vs. native cloud tools)

When it comes to data processing, the main decisions drivers are no longer about use cases but instead about internally available skills and lock-in risk.

Make or Buy: Data Science, Machine Learning, and AI Platforms

People, data infrastructure, models, and business value all evolve at very different paces in many different ways. Orchestrating everything together to ensure sustainable success in AI requires significant effort, which can lead to another make or buy decision.

But again, here, the decision drivers are different, this time based on the fundamentals of the organization’s data approach:

- Is it a long-term or one-off data initiative? AI technologies come and go with no sign of reduction in the pace of innovation. Setting up Hadoop or Pig clusters are examples of sound technological decisions just a few years ago that are no longer so. Technologies become obsolete in about as much time as is required to properly set them up - case in point with just a few key technologies of the big data, then data science, and now AI era.

If the initiative is meant to be long term, acquiring a full platform able to accommodate the fast-evolving data technologies may be relevant, while for short term initiatives, it may be possible to assemble based on today’s components. - What is the expected scale of the data initiative? On small-scale AI projects, little overarching cohesion or coherence is required, which leads to a more natural “make” scenario. If the broader vision is to expend data to augment a significant part of the organization’s activity, it will require the inclusion of many different skills and company processes to support the design an operationalization of projects. In this case, a buy approach is likely more relevant.

For example, commercial AI platforms not only allow teams to complete one data project from start to finish one time, but introduce efficiencies everywhere to scale. That includes features for:

- Spending less time cleaning data (and doing other parts of the data process that don’t provide direct business value).

- Smoothing production issues and avoiding reinventing the wheel when deploying models on a daily basis.

- Building in documentation and best practices sharing for reproducibility (and, in some cases, to speed up regulatory requirements).

Time is money: Introducing efficiencies everywhere at scale is one advantage of a "buy" approach to AI platforms.

Time is money: Introducing efficiencies everywhere at scale is one advantage of a "buy" approach to AI platforms.

Addressing the Fear of Lock-InNot choosing the right vendor to provide AI solutions can mean being locked in to a tool that is slow to innovate, doesn’t provide the right security controls, lacks features the business needs down the line, and a host of other possibilities.For example, steer clear of those that don’t allow the use of open source technology, that make users learn an entirely new language (which means slow onboarding and barrier to entry), or that locks the business into using one kind of data storage, one kind of architecture, etc., and that doesn’t value and pursue a vision of sustainable AI. Other small but helpful features to look for to minimize fear of vendor lock-in include tools where models and code can all be exported if necessary.

As Usual: Time is Money

There is no question that businesses able to successfully leverage AI to improve processes, provide new services, increase revenue, or decrease risk will be the ones to get ahead. That means time is of the essence.

So when it comes to build vs. buy (whether for models/applications, data processing, or AI platforms), one of the key questions is: how long will it take to not just get up and running to minimum viable, but to really be able to execute at a level that will take the organization from one model per year to hundreds (or thousands)?