In the weeks before Christmas, I was privileged to open a full day workshop with industry leaders from across the Netherlands to explore the intricacies of AI Governance. The session in Amsterdam brought together organizations from the banking, telco, automotive, and manufacturing sectors, amongst others, to share and explore challenges and best practices for building AI Governance at the heart of business operations.

Assembling a group of people excited to dedicate a full day to AI Governance is always a moment to be savored, but the sheer diversity of this group, representing a broad spectrum of industries, struck me as particularly special.

Industries were always likely to move at different paces in their adoption of AI Governance — some being naturally more accustomed to adapting business processes to ever-evolving regulations than others. The mood in the room, however, and indeed the quality of the discussion, painted a different picture. Each participant was acutely aware of the importance of AI Governance and the myriad of risks they may be exposed to if they fail to get it right.

In this post, we will examine what a recent crisis of AI Governance may have to teach us, how executives and team leads can prepare for the approaching developments in the regulatory landscape, and the likely costs of compliance.

When Risk Hits Close to Home

What was the cause of this coordinated push for better AI Governance? Was there something in the water? As it turns out, there was — in the Netherlands it is known as the “toeslagenaffaire” or the child care benefits scandal.

The case broke in 2019 when it was revealed that, for over six years, the Dutch tax authorities had used a fraud detection algorithm to assign risk categorizations to child care benefit claimants. With seemingly no human-in-the-loop for oversight, families were penalized for benefits fraud, based solely on the AI system’s risk indicators, which were demonstrably biased. Tens of thousands of families hit with exorbitant tax bills were pushed into poverty, often being denied payment arrangements. More than a thousand children were taken into foster care. The Dutch government was eventually forced to resign.

There are countless other examples of algorithmic bias, from the U.K. Government’s misguided attempt to simulate exam results during the COVID pandemic to Amazon’s sexist hiring algorithm. The common factor in all of these examples, and many like them, is a fundamental failure to implement robust AI Governance, but the “toeslagenaffaire” stands apart as a scandal which struck at the heart of government with devastating effects to families and to society.

Regulation and the Race to Define When AI is "High Risk"

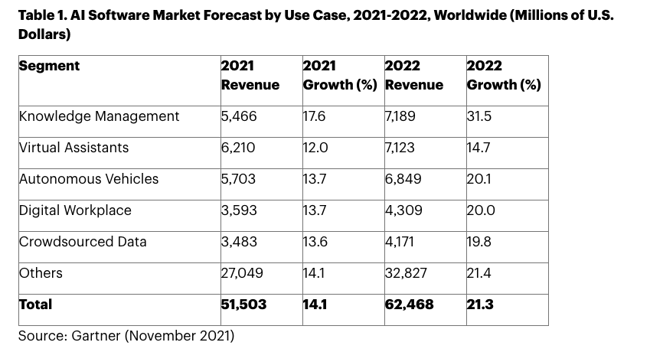

The global AI market has grown from $5 billion in 2015 to an estimated $62 billion in 2022 and PwC predicts that, by 2030, the contribution of AI to the global economy will be $15.7 trillion, producing a 14% increase to global GDP as a result.

While guidance and some legal structures do exist to address how algorithms are designed and used in society globally — the Dutch courts in 2020 ruled that the algorithm in toeslagenaffaire was a violation of human rights and GDPR — the extraordinary growth of AI as it enters mainstream adoption has continued largely unregulated.

Globally, the regulatory landscape for how companies design and monitor their AI systems is shifting. The European Union (EU) has often been at the forefront of regulating technology markets on issues of digital privacy and competition law, and the proposed EU AI Act again has the potential to shape markets beyond Europe’s borders.

Since the introduction of the draft text in April 2021, everyone from academics, to think tanks, to tech companies, to churches want a say in how the EU regulates AI and, crucially, how it defines which systems are considered “high-risk.” With possible penalties of up to 6% of global annual turnover, the stakes are high.

While some AI systems will be banned outright, it is currently the definition of which AI systems are considered “high-risk” which appears fundamental in shaping the policy implications of the legislation. The EU AI Act takes a two step, pragmatic approach to how companies should govern their AI: First, assign a risk category to the AI system and then develop, deploy, and monitor that system according to the requirements of the assigned risk category.

The “high-risk” classification of AI systems carries the most rigorous (and currently most clearly defined) requirements for compliance of each of the permitted categories. The draft also recommends that for the avoidance of doubt, companies may wish to comply with the requirements for “high-risk” AI systems whenever possible. It remains to be seen how national and industry regulators will interpret this recommendation.

Practical Steps for Compliance and the Cost to Companies to Continue Safely Scaling AI

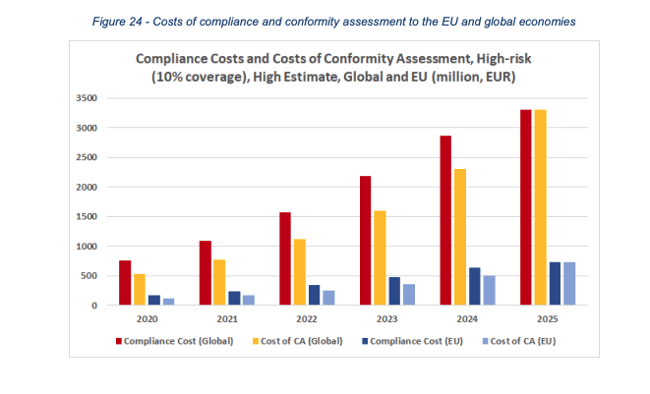

Within this evolving regulatory landscape, the global AI Governance market size is predicted to grow from $50 million in 2020 to over $1 billion by 2026. The EU’s impact assessment estimates the cost of compliance, including conformity assessments, at EUR 750 million by 2025.

Source: Study to Support an Impact Assessment of Regulatory Requirements for Artificial Intelligence in Europe, p. 156

The response in the Dutch market to the benefits scandal has been swift and decisive. Robust AI Governance can only be implemented with buy-in from senior stakeholders and companies may need to reallocate or add human capital to support the development of new oversight structures to govern how AI systems are developed, deployed, and monitored. By starting these initiatives early, companies may be able to better identify and prepare relevant teams to address these new requirements in-house, reducing external costs.

Another key step will be to acquire and socialize an understanding of how the practical steps to compliance for the relevant risk category will impact established development and monitoring workflows for AI systems. The draft EU AI Act’s requirements associated with high-risk AI systems are not linear and embed checks and reporting throughout the development lifecycle. Any iterative or CI/CD-based development workflows should be supported by effective tooling to maintain an auditable log of decisioning throughout the development lifecycle and to support effective monitoring once the system is deployed.

How Do You Estimate Your Own Cost of Compliance?

The workshop in Amsterdam was a helpful window into how context, no matter an organization’s industry, can motivate interest and commitment to AI Governance. If your organization hasn’t yet started planning for and adapting to more robust governance practices don’t worry — you are not alone, and there is still time. When working to establish the United Nations, Winston Churchill famously warned that we should “never let a good crisis go to waste.” While no crisis is ever a good one, the only tragedy would be to let them continue to occur. It is in our interest to build effective AI Governance structures now — the risks to profits, reputation, and to society are too great not to.