As organizations scale their data science, machine learning (ML), and artificial intelligence (AI) efforts, they are bound to reach the impasse of learning when to prioritize white-box models over black-box ones (because there is a time and a place for those) and how to infuse explainability along the way.

Here’s why black-box models can be a challenge for business (and a legitimate concern):

- They lack explainability (both internally at an organization as well as externally to customers and regulators seeking explanations and insight into the decision making processes.

- They create a “comprehension debt” that must be repaid over time via difficulty to sustain performance, unexpected effects like people gaming the system, or potential unfairness.

- They can lead to technical debt over time, as the model must be more frequently reassessed and retrained as data drifts because it may rely on spurious and non-causal correlations that quickly vanish (which, in turn, drives up operating expenses).

- There are problems for all types of models (not just black-box ones) but things like spurious correlations are easier to detect with a white-box model.

As we mentioned above, while black-box models lack explainability, they can still be held to the same rigor and standards as any other AImodel, especially with the robust set of tools that Dataiku incorporates across the board for Responsible AI and explainability. Practitioners can also extend the explainability of their white-box models with the right tools in Dataiku and, in turn, develop them in a responsible way. This blog post highlights what exactly explainable AI is (including helpful language for non-technical experts), why it's especially important with the rise of Generative AI, and how it can be achieved with Dataiku.

Implementing an Explainable AI Strategy

Creating transparent, accessible AI systems is important not only for those behind the curtain developing the model (such as a data scientist), but for end users not involved in model development (such as lines of business who need to understand the output and how it translates to other stakeholders in the company).

Don’t fret — there are ways to build models in a way that is trusted and reliable for the business. Enter: explainable AI. The rise of complex models over the last decade has led organizations to realize that there are consequences to AI that we don’t understand (that have real-world impacts), which, in turn, spurred many breakthroughs in the space, conferences dedicated to the topic, leaders in the field discussing it, and a need for tooling to understand and implement explainable AI at scale.

Explainable AI, sometimes abbreviated as XAI, is a set of capabilities and methods used to describe an AI model, its anticipated impact, and potential biases. Its objective is to address how black-box models reached their decisions and then make the model as interpretable as possible.

There are two main elements of explainability:

- Interpretability: Why did the model behave as it did?

- Transparency: How does the model work?

Ideally, every model — no matter the industry or use case — would be both explainable and transparent, so that, ultimately, teams trust the systems powered by these models, mitigating risk and enabling everyone (not just those involved) to understand how decisions were made. Examples where explainable and transparent models are useful include:

- Critical decisions related to individuals or groups of people (e.g., healthcare, criminal justice, financial services)

- Seldom made or non-routine decisions (e.g., M&A work)

- Situations where root cause may be of more interest than outcomes (e.g., predictive maintenance and equipment failure, denied claims or applications)

Why Explainable AI Matters More in the Age of Generative AI

As Generative AI continues to evolve and proliferate across various domains, the need for explainability becomes even more pronounced to ensure responsible and ethical deployment of these powerful technologies.

- Understanding the Output: Generative AI models, especially in domains like natural language processing or image generation, produce outputs that can be complex and nuanced. Understanding why a model generates a particular output is essential for trust and reliability, especially in critical applications like healthcare or finance.

- Detecting Bias and Fairness Issues: Generative AI models can inadvertently learn and propagate biases present in the training data. Explainability mechanisms help in identifying these biases and ensuring fairness and equity in the generated outputs.

- Debugging and Improvement: Explainable AI tools aid in debugging and improving Generative AI models by providing insights into how the model makes decisions or generates outputs. This feedback loop is crucial for refining and enhancing the performance of the model over time.

- Regulatory Compliance: With increasing regulations around AI ethics and transparency, explainability becomes a legal requirement in many contexts. Generative AI systems must comply with these regulatory requirements to ensure accountability and mitigate potential risks.

- User Trust and Adoption: Users are more likely to trust and adopt Generative AI systems if they can understand the reasoning behind the model's decisions. Explainable AI provides transparency and helps build trust between human users and AI systems.

- Ethical Considerations: Understanding the inner workings of Generative AI models is essential for addressing ethical concerns, such as the potential misuse of AI-generated content or the impact on privacy and security.

Explainable AI with Dataiku

- It’s critical for organizations to understand how their models make decisions because:

- It gives them the opportunity to further refine and improve their analyses.

- It makes it easier to explain to non-practitioners how the model uses the data to make decisions.

- Explainability can help practitioners avoid negative or unforeseen consequences of their models.

Dataiku helps organizations accomplish all three of these objectives by striking the optimal balance between interpretability and model performance. Dataiku’s Everyday AI platform is built for best practice methodology and governance throughout a model’s entire lifecycle. Check out the video below for a seven-minute summary of how teams can implement responsible, explainable AI systems with Dataiku:

So, how exactly does Dataiku help builders and project stakeholders stay aligned to their organizational values for Responsible AI?

Data Transparency

Responsible AI practices include inspecting your data set for potential flaws and biases. Dataiku’s built-in tools for data quality and exploratory data analysis help you quickly profile and understand key aspects of your data. A smart assistant is available to supercharge your exploration, suggesting a variety of statistical tests and visualizations that help you identify and analyze relationships between columns. Specialized GDPR options mean you can also document and track where Personal Identifiable Information, or PII, is used, yielding better oversight of sensitive data points from the get go.

Model Explainability

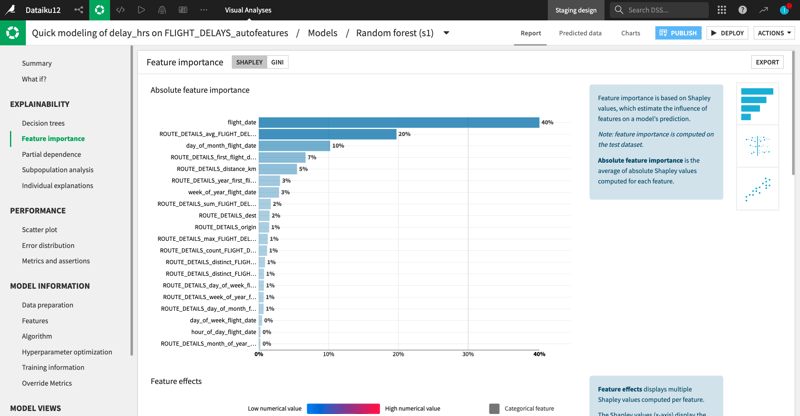

For projects with a modeling component, Dataiku’s Visual ML framework offers a variety of tools to fine tune and evaluate ML models and confirm they meet expectations for performance, fairness, and reliability.

For example, to incorporate domain knowledge into model experiments in the form of common sense checks, subject matter experts can add model assertions — basically informed statements about how we expect the model to behave for certain cases.

A full panel of diagnostics helps us perform other sanity checks by automatically raising warnings if any issues such as overfitting or data leakage are detected. Later when evaluating our trained model, we can see the test results in fact contradict our assertions, and some diagnostics triggered warnings, so these are some instant signals that we should investigate further and possibly rethink our assumptions!

Model Error Analysis is another view that delivers insight into specific cohorts for which a model may not be performing well, which enables us to improve the model’s robustness and reliability before deploying. Speaking of cohorts in the data, subpopulation analysis is a powerful tool to ensure models perform consistently well across different subgroups and help investigate potential bias. For example, after examining performance metrics broken out by race, we might choose to sample our data differently, or retrain our model with different parameters to ensure more even performance across racial groups.

Partial dependence plots help model creators understand complex models visually by surfacing relationships between a feature and the target, so business teams can better understand how a certain input influences the prediction.

Local Explainability

For AI consumers who are using model outputs to inform business decisions, it’s often useful to know why the model predicted a certain outcome for a given case. Row-level, individual explanations are extremely helpful for understanding the most influential features for a specific prediction, or for records with extreme probabilities. For business users wishing to understand how changing a record’s input values would affect the predicted outcome, Dataiku’s interactive what-if analysis with outcome optimization is a powerful tool.

Project Explainability

Finally, for overall project transparency and visibility, zooming back out you can see how Dataiku’s Flow provides a clear visual representation of all the project logic. Wikis and automated documentation for models and the Flow are other ways builders, reviewers, and AI consumers alike can explain and understand the decisions taken across each stage of the pipeline.

Recap

As you can see, no matter the model you select, Dataiku has capabilities that enable you to build it with transparent and explainable best practices. The platform, which also has robust AI Governance and MLOps features, supports teams in AI development of responsible and accountable AI systems.