There are clear gaps and persistent friction points that hamper an organization’s ability to scale and deliver value, fast. Some of them include tool and model complexity, a lack of automated workflows, and a monolithic approach. Organizations need a new and modern approach to scale and regain control over the proliferation of their models and analytics. This blog post will detail how Dataiku helps IT leaders uniquely solve the most pressing MLOps challenges.

1. Build a Unified Loop

When talking about platforms with decision makers, we are often confronted with questions — that all ultimately tie back to MLOps challenges — such as:

- In a multi-profile project, how can I ensure maximum efficiency of team members, without each expert waiting for the previous one to deliver its part?

- How can I avoid cumbersome and troublesome integrations of specialized tools tailor-made for each team?

- How can I innovate without throwing away already developed models?

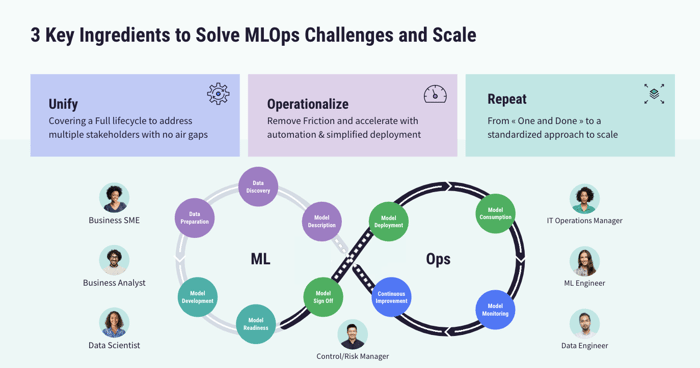

AI and analytics projects are by nature iterative, meaning they require constant refinement and improvement over time. Without a unified approach to MLOps, performance can degrade, and the benefits of AI may be lost. Successful MLOps involves the unification of people and steps involved in the AI value chain, creating a continuous loop that ensures this iterative nature of AI projects is accounted for. From data scientists and engineers to business analysts and IT operations teams, everyone needs to be on the same page to ensure a smooth and efficient workflow.

Democratization is the key to the success of a platform and it must be considered at the beginning of the investment and not afterward. What would be the point of building a scalable and future-proof platform if it is not ready to be used by all the company's employees, regardless of their level of expertise?

Dataiku offers an end-to-end environment so teams can do everything from data discovery to model monitoring in a single platform. It also provides a common grammar for everyone from advanced data experts like data scientists to low- or no-code domain experts, bringing together technical and business teams to collaborate on projects.

2. Operationalize the Loop

When it comes to MLOps challenges in this section, you might be wondering: “How can I avoid re-coding the entire project to get it into production?” or “How can I automate the delivery and quality of projects in production?”

The continuous loop is a crucial element in building and deploying machine learning (ML) models. However, it's not enough on its own. While a continuous loop can help streamline the process, it can still be done manually, introducing inconsistencies, errors, and delays. To truly respect the MLOps fundamentals of control, repeatability, and speed, the continuous loop needs to be operationalized.

This involves automating every step of the process and tracking each one. By doing so, you can significantly reduce the risk of errors, increase repeatability, and speed up the process. Operationalizing the loop is a critical step in ensuring that your ML pipeline is reliable and efficient.

As you can see in the video below (which summarizes this blog in three minutes!), what you design is what you operationalize. Dataiku offers seamless automation with the Flow, from prep to retraining. Teams can safely operationalize with guardrails, with built-in testing and model sign-off to enforce compliance. Last but not least, production can be supervised with monitoring and alerting.

With MLOps in Dataiku, seamlessly deploy, monitor, and manage ML models and projects in production.

3. Repeat the Loop to Scale

In the first two steps, we created and operationalized a virtuous loop capable of iterating through the creation and deployment of an AI application safely and efficiently. However, our business requires us to create thousands of AI applications and, thus, we need to be able to repeat this process many times.

To achieve this, Dataiku allows us to materialize the loop in the Govern module using a blueprint. This blueprint serves as a template for other projects, enabling us to safely accelerate the number of projects we can deliver.

Within each project, we assemble a range of artifacts, including datasets, models, code notebooks, and plugins, using the Flow feature. These artifacts are cataloged in the platform and can be searched and reused in other projects. The more components that become available on the platform, the easier and faster it becomes to build new projects.

By leveraging Dataiku's capabilities, we can efficiently and safely repeat the loop for multiple projects, accelerating our ability to deliver a vast number of AI applications. The platform's cataloging and reuse features further enable us to streamline the process and increase our productivity. Finally, executives can oversee value across all business initiatives via a model registry and related analytics projects.

Unify, Operationalize, Repeat

The road to sound MLOps requires ensuring deployed models are well maintained, perform as expected, and do not adversely affect the business. IT leaders can use Dataiku’s future-proof platform to:

- Cover the full project lifecycle (from data preparation to model performance and monitoring) while addressing multiple stakeholders

- Remove friction and accelerate with automation and simplified deployment

- Move from a “one and done” setup to a standardized approach to scale