As with all other key aspects of the economy, the global health crisis we are going through is having a deep impact on AI developments in organizations. In an environment compatible with remote work, COVID-19 acts as a catalyst for proper data usage: many companies need to develop a strong data-supported understanding of the new normal — and react accordingly. Yet, this shouldn’t overshadow other structuring trends happening in the background, starting with the emergence of a new regulatory framework which will deeply reshape how AI is scaled.

This blog post is the first of a series focusing on these directives, regulations, and guidelines — which are on the verge of being enforced — and their key learnings for AI and analytics leads. Today, we are zooming in on the recent guiding principles document released by the French Financial Services Regulator (ACPR*).

*ACPR: the Autorité de Contrôle Prudentiel et de Résolution” (Prudential Supervision and Resolution Authority) is the independent administrative authority responsible for ensuring financial stability and consumer protection in France. It regulates all banks (French and foreign) active in the French market.

With AI Regulation Around the Corner, What Can We Expect?

After two years of consultations around guidelines and discussion papers on regulating AI, the European Commission is finally moving forward with a regulatory proposal expected at the end of Q1 2021. As explained in the white paper from February 2020, all member states will be expected to align their own national regulations on the proposal once it is validated. Therefore, while the proposal is constructed, national regulators across the European Union (like ACPR) are working on scoping these topics for their own perimeters.

In June 2020, the French Financial Services Regulator (ACPR) published a unique reflection document titled “Governance of Artificial Intelligence Algorithms in the Financial Sector.” From explainability standards to governance protocols, the 80-page document provides concrete insights into what will be asked from banking and insurance organizations operating in France in terms of compliance. Let’s dissect them together!

The ACPR Paper Suggests a Radically Different Approach to Governing AI

ACPR’s publication is the result of two years of research with French financial actors and their use cases (e.g. Anti-Money Laundering/Combating the Financing of Terrorism [AML/CFT], credit scoring, etc.). It is divided into two main chapters: the assessment of AI algorithms on the one hand, and their governance on the other. Simply put, how can we best assess AI risks and leverage the assessment outputs to address these risks?

I’ll give you the answer right away: AI risks should no longer be understood as standalone technical anomalies but as core business issues. Governing AI risks thus requires an update to the traditional risk and compliance corporate expertise.

If you remember three things from the ACPR paper, keep in mind the ones below:

1. Companies need to build capabilities for AI regulation now by assessing and managing the risks of their AI projects.

2. AI risk assessment is the translation of core principles (e.g., explainability) into risk metrics and scores. These metrics cover not only technical elements of the AI lifecycle but also business and organizational elements.

3. AI risk management or governance is using the risk assessment outputs to address the risks. Yet, before you can do that you need to have established roles and responsibilities, a risk framework, and governance protocols.

Let’s Review the Assessment Piece First

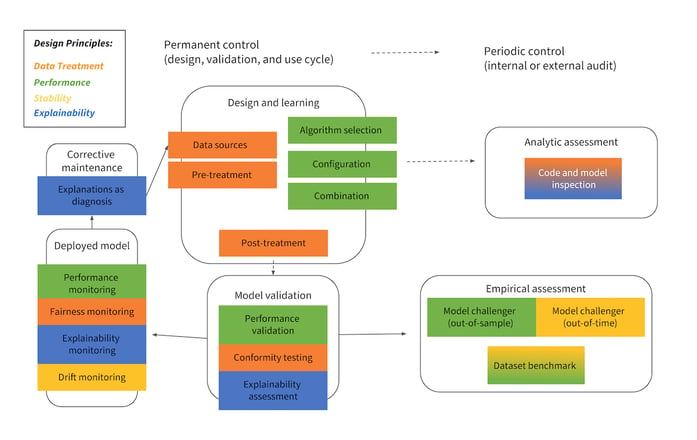

Similar to the European approaches to regulatory framework building, the paper starts by recalling that principles are fundamental to drive any assessment. Here the four main risk criteria listed are:

- Data management (ensuring the quality of the data)

- Performance (ensuring the quality of the model)

- Stability (ensuring the consistency of the model)

- Explainability (ensuring the transparency of the model and its outputs)

While the first three criteria make sure the assessment is reliable, the last criterion makes sure anyone can conduct and/or understand the assessment. This is the shift between technical and business approaches to AI governance.

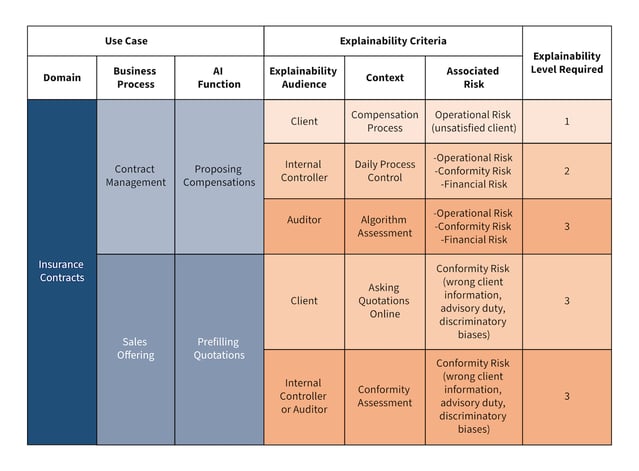

That was a first key takeaway but here’s more: the last risk criterion, explainability, is not a binary concept (is your model explainable or not?) but a continuous concept (i.e., to what extent is your model explainable?). Moreover, the explainability concept is dependent on external factors like the audience (e.g., explainability cannot be delivered the same way to auditors, internal controllers, or consumers) and the risk (e.g., the severity and likelihood of the risk of not explaining well is different from a risk to another). In a nutshell, explainability is not a one-size-fits-all concept and requires in-depth work to understand and successfully operationalize for business and compliance value.

Figure 1. Table from the ACPR paper listing the different levels of explainability for the key external factors (i.e. the audience and the business risk). The translation to English was done by Dataiku.

Moving Forward to the Governance Piece

Distinctively from the European approaches, the paper tries to tie the assessment to the governance in a very concrete manner to encourage organizations to start updating their risk approaches now:

- First, by formalizing the roles, responsibilities, and means to carry them out. Who is responsible (and qualified) to assess AI and develop the governance protocols to address AI risks? The ACPR paper suggests it is the role of internal control, and the governance protocols should have at least three different control levels.

- Second, by updating the risk framework regularly to enable a coherent and consistent view of risks across the organization. Once the risk framework has been set, the ACPR suggests building appropriate training to ensure the risk analysis is transversal and prospective.

- Third, by developing and testing an audit methodology. This is a fundamental element of any future AI regulation. The ACPR suggests taking into account the development context of the algorithm as well as of the business lines impacted.

Figure 2. Table from the ACPR paper sketching the cycle of assessment and governance for AI algorithms, with a color code for each main risk criteria. The translation to English was done by Dataiku.

What Does This Mean for Organizations Scaling AI?

The ACPR document nicely fills the applicability gap between regulator debates and organizational reality and provides quick wins for any organizations wishing to accelerate their AI governance journey. Implementing the ideas outlined above can be time consuming and resource heavy. Building a sense of control over the risks of an increasing number of AI projects can seem like a nearly impossible task. The role of platforms like Dataiku is to make it easier.

In this case, not only by centralizing data access, modeling techniques, deployment APIs, and collaborative features but also by providing a strong oversight over existing AI risks and available controls. At Dataiku, we look at AI governance as an opportunity to build AI resilience by developing an operational model to let AI grow organically, eliminate silos between teams, and have a high-level view of what is going on.

Dataiku DSS allows users to view the projects, the models, and the resources that are being used and how. You have model audit documentation to help understand what has been deployed. Plus, there is full auditability and transferability of everything from access to data to deployment, for each and every project everyone works on.

As a supplier of an AI solution, we leave it to our customers to define and develop their own AI frameworks, but at the same time, we provide the technology and tools to govern AI and comply with upcoming regulation.