MLOps is changing, but you might be surprised to learn how. It's not about technology; it's about people. If MLOps is going to move beyond simple deployment and management of a few projects, it must evolve into a multi-persona approach that includes data scientists, engineers, and business stakeholders. When it does evolve, MLOps can finally become the lever for the industrialization of AI programs that we have all been waiting for.

2022: AI Use Cases Are Booming, More Departments Are Impacted

According to McKinsey and their annual state of AI 2022 survey, the use of AI has more than doubled. In 2017, 20% of respondents said they had implemented AI in at least one business sector; today, that number is 50%.

A few years ago, use cases were focused on risk and manufacturing, and they are now spreading across all business functions. Today, marketing and sales, the creation of products and services, and strategy and corporate finance are some areas with significant reported revenue effects.

Simply put, AI initiatives are gaining traction, investments are paying off, and business departments have never been more eager to reap the benefits of AI.

MLOps: More People Than Ever Are Part of the Loop

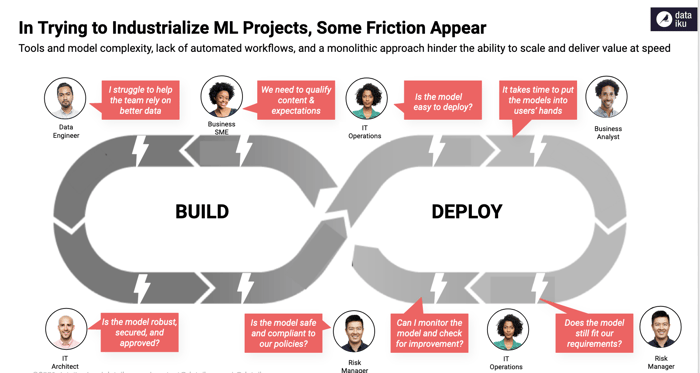

MLOps has never been more critical. Without an established approach to smoothing processes, chaos is likely to set in as the models develop. The issue is especially the people dimension: As AI creates more value than ever in all departments, more people from multiple backgrounds are now involved in operationalization cycles.

To name a few:

-

Business SMEs who want to ensure that the models developed meet their initial expectations.

-

Risk Managers who want to ensure that model deployments remain compliant, transparent, and operate in accordance with the rules dictated by the governance framework.

-

Business Leaders who now want to have an overview of the models that impact their value chain.

And of course, each of them comes with their own challenges, their own expectations, and their own frustrations. And there is a reason for that: There are still many obstacles that need to be fixed by the current MLOps approach that prevents organizations from scaling AI.

This illustration sums it all up.

Figure 1: Barriers & frictions along the AI lifecycle

Feel free to view our latest webinar, where François Sergot had the opportunity to discuss the frictions that MLOps players could indeed encounter.

Among the legitimate questions they all have:

-

How to ensure that model development is based on a clear understanding of the needs and expectations of domain experts?

-

How and what criteria should be used to warn business users of a model's underperformance?

-

How can we ensure that the models developed meet the transparency requirements as well as the regulatory requirements defined by the company or the sector?

-

How can we make model performance visual enough to know when to replace one model with another?

AI as a Team Sport: Collaboration Is Paramount to Operationalization

To make MLOps a virtuous circle, data and AI teams need to evolve their MLOps processes to welcome new players with more diverse AI expertise.

As Gartner mentioned in the Hype Cycle™ for Data Science and ML, “MLOps is neither technology nor procedure, as is commonly assumed by organizations; rather, it is a new way of working that entails bringing together several personas to productionize an ML workflow.“*

To overcome this, siloed approaches must give way to unified, collaborative initiatives where everyone works together around the AI project lifecycle. Companies need to focus more on ease of use, automation, and collaboration if they want to expand and make MLOps the universal engine that drives the lifecycle of their AI project.

That’s critical to cover all the stages of industrialization from data discovery through model monitoring and re-training.

How Do Our Customers Scale With a Collaborative MLOps Approach?

Another example of MLOps in practice comes from ZS, an Indian management consulting and technology firm focused on transforming global healthcare and beyond. ZS partnered with a life sciences and pharmaceutical organization to help orchestrate 100+ data science models projected to bring in annual incremental growth of $200 million.

MLOps came into the picture when Dataiku was chosen as the central platform for all model deployments. The decision was taken notably because of Dataiku’s user-friendliness for multiple personas, from data scientists and business analysts to domain subject matter experts. Today, an ever increasing number of models has been deployed on the Dataiku platform with added MLOps utilities like model health monitoring, data drift analysis, and many more.

Where Is MLOps Going in 2023?

More controls, better notification, more autonomy: Identifying bottlenecks, monitoring activities in real time, and receiving notifications and alerts throughout the AI project lifecycle are all new intelligent features that will enrich MLOps to industrialize AI on a large scale.

Covering all MLOps activities means also getting the big picture: With AI proliferation, it is more than necessary to keep a monitored global view of all projects. Companies that are starting to operationalize AI and analytics projects are already using data and AI control towers that allow them to centralize and control the performance of their models.

If we want MLOps to bring AI to the next level, it should continue eliminating complexity and strive for simplicity. Even if data and AI teams need all the technical capabilities to develop robust pipelines, industrialization will begin when this new way of working becomes simple enough to onboard everyone, including the domain experts consuming AI performance in the business trenches.

*Gartner - Hype Cycle for Data Science and Machine Learning, 2022. 29 June 2022, Farhan Choudhary, Peter Krensky. GARTNER and Hype Cycle are registered trademark and service mark of Gartner, Inc. and/or its affiliates in the U.S. and internationally and are used herein with permission. All rights reserved.