When it comes to safely scaling AI, Dataiku prides itself on the variety of features and tools available for users who wish to build pipelines in a governed and responsible way. This is particularly relevant as the motivation and need for Responsible AI changes from a “nice to have” to being a key driver of AI adoption across enterprises. This requires a path towards education and socialization of Responsible AI, especially in the context of models that have tangible effects for individuals and people.

When speaking with clients and Dataiku users, one of the most common questions I get is, “How do I actually do Responsible AI? What features do I use, and when?” Many organizations have high-level principles and criteria mapped out for AI Governance, but they struggle with creating best practices for data analysts and scientists to stay aligned with those principles.

Without the right education and training upfront, builders and practitioners can not integrate Responsible AI values into their workflows, leading to either less performant systems or a lengthy review process before deployment. What these organizations need is a way to bring all data practitioners up to speed on core aspects of Responsible AI, teach them the basics of executing on principles, and make it tangible with real-world use cases.

To that end, we are excited to announce the release of our first ever “Responsible AI in Practice” training series, available now on our Dataiku Academy. This training series is a deep dive into the basics of reliability, accountability, fairness, and transparency from a high-level and hands-on approach. The training series will walk you through how to build a responsibly-minded AI pipeline from start to finish, all using the variety of data discovery, modeling, and reporting features Dataiku offers. Read on for all of the details!

What Does the Dataiku Responsible AI in Practice Series Cover?

The four-part course series covers the foundations of Responsible AI, as well as how users can act on these principles while building pipelines in Dataiku. The courses are:

Session 1: Introduction to Responsible AI - Designed for practitioners, product managers, and business users, this session introduces participants to the concept of Responsible AI and how it relates to bigger areas of AI Governance and MLOps. They will also learn about the dangers of irresponsibly built AI — such as reinforcing existing inequalities and deployment biases. Lastly, it will cover a brief introduction to how Responsible AI principles are put into practice during the machine learning lifecycle.

Session 2: Understanding and Measuring Data Bias - This session, oriented toward data practitioners, is the first of three hands-on tutorials on building Responsible AI pipelines. It covers the basics of bias in social and statistical settings, with an emphasis on biases coded into data. It makes use of a practical example related to churn prediction to demonstrate the impact of sensitive attributes on outcomes, as well as how to measure and report on bias in datasets. The hands-on includes building a wiki for documenting data bias, a dashboard of bias measurements and statistical analysis, as well as review of some methods for bias mitigation in datasets.

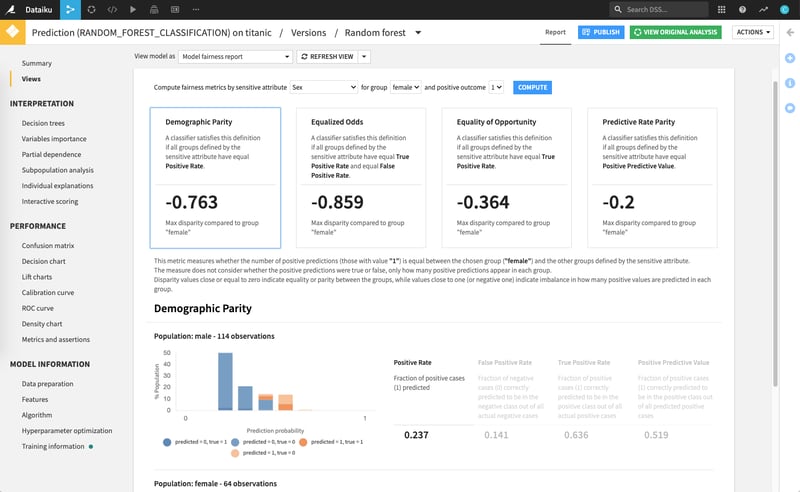

Session 3: Model Fairness, Interpretability, and Reliability - Also geared toward data practitioners, this session continues to build on the churn example for a hands-on approach to responsible model building. It covers different types of fairness metrics and a review of how to determine which metric and threshold is right for the given task. Further, it highlights how to create assertions, model quality checks, and custom metrics in Dataiku to ensure reliable and robust results. Finally, it covers the difference between interpretability and explainability and methods available in Dataiku to support interpretable model results.

Session 4: Reporting and Explainability - The final session, also for data practitioners, covers issues around different kinds of bias when it comes to reporting on and deploying AI pipelines. It covers the bias lifecycle, different types of reporting for business users versus technical consumers of pipelines, and a finalization of the hands-on project and associated documentation.

Why Should You Care About Responsible AI?

Responsible AI is only one dimension of how Dataiku supports safely scaling AI with AI Governance and MLOps. We often observe that the challenge of implementing Responsible AI practices sits between data science teams and oversight or governance teams. Governance teams often have a set of criteria that need to be made tangible and measurable so data experts can align their work with those expectations.

It’s important to note that the content covered in the training series is meant to serve as a baseline for understanding Responsible AI in practice. The field of AI is constantly developing, so it’s only natural that how we approach its responsible and governed use should also evolve. We encourage our users to use this training as a starting point for deeper exploration into areas they are most interested in — whether it’s statistical methods to uncover bias in datasets, in-model fairness processing, or even customized documentation and templates for reporting purposes.

Additionally, these trainings cover Responsible AI supervised learning techniques, which may not always be applicable for generative AI models. However, the core concepts around understanding bias, being transparent with AI, and checking outputs for consistency will apply no matter the underlying model in use.

With the Responsible AI in Practice training series, organizations can ensure they align the output of AI with their values by proactively building systems that are reliable, accountable, fair, transparent, and explainable. Dataiku is excited to share this training with all users, so that they can start moving from theory to practice!