We are taking a break on the AI regulation series to take a step back and clarify the connection between AI Governance, MLOps, and Responsible AI. These three concepts fall off the tip of the tongues of industry practitioners, researchers, and regulators alike, all without having clearly defined connections or boundaries. At Dataiku, we believe that these concepts are central pieces of being able to scale with AI.

AI Governance, MLOps, and Responsible AI can be perceived as adding new noise rather than clarity to the field of AI

Why Bother?

Without understanding or articulating these concepts properly, scaling with AI is nearly impossible. As organizations wish to scale with AI by multiplying (and sometimes complexifying) the number of projects going to production, they face new challenges:

1. Inefficiency - A rapidly growing stack of projects requires managing more people, more processes, and more technology. While AI talent is scarce, the technology stack is ever expanding and the processes to manage them seems to create more inefficiencies. Pushing a project to production shouldn’t take months.

2. Risks - Pushing projects into production involves a new set of risks that are very different from development. Data protection, opacity, or biases become tangible issues that can harm an organization’s reputation and financial performance.

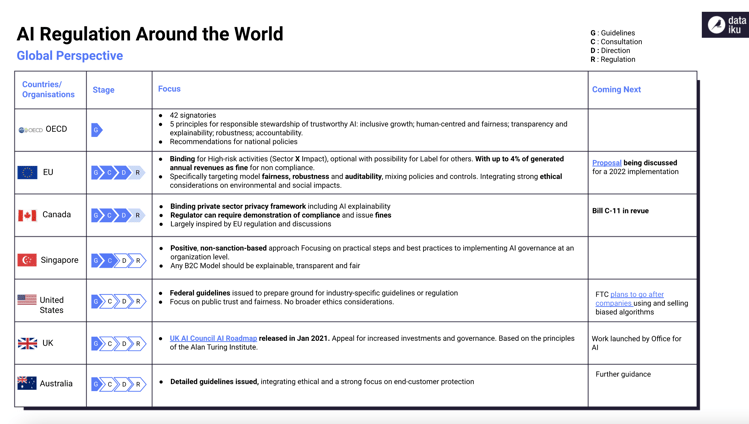

3. Legal Pressure - These risks have been identified and discussed by regulators around the world for the last four years. Today, a growing number are proposing regulations to set new standards for AI Governance for the private sector, with potential fines for non-compliance.

AI Regulation status around the world. Source: Dataiku

All these dynamics invite organizations to start thinking about AI Governance, Machine Learning Operations (MLOps), and Responsible AI today to sustain the growth of their AI projects. Similarly to data governance, addressing the issue too late in one’s maturity cycle will require more time and money to catch up with accumulated debt.

Dataiku’s Enterprise AI maturity models that highlights when management emerges as a critical topic for organizations. Source: Dataiku

Now that you know why this is important, let’s try to understand what these concepts mean and how they relate to each other.

Let's Start With AI Governance

Oh wait...no, we have to think about these three concepts altogether! Here’s the catch: AI Governance is a framework (enabling processes, policies, and internal enforcement) tying together operational (MLOps) and values-based (Responsible AI) requirements to enforce consistent and repeatable processes aiming at delivering efficient AI at scale. More specifically, it helps manage operational risks and maintain legal compliance for AI and advanced analytics projects.

In other words, these three concepts constitute a consistent approach to make AI project steps efficient, repeatable, and reusable across your organization. This is what we mean by scaling!

AI Governance sits at the intersection of value-based (Responsible AI) and operational (MLOps) concepts

See the short definitions below for further context:

- MLOps: focuses on end-to-end model management, from data collection down to operationalization and oversight

- Responsible AI: ensures end-to-end model management addresses the specific risks of AI (i.e., discriminatory biases and lack of transparency)

- AI Governance: delivers end-to-end model management at scale, with a focus on risk-adjusted value delivery and efficiency in AI scaling, in alignment with regulations

The conceptual triangle that is needed to scale with AI; every concept relates to and builds on the other

Let’s take a concrete example to clarify this vision. AI Governance policies will document how AI projects should be managed throughout their lifecycle (MLOps) and what specific risks should be addressed and how (Responsible AI). Analytics teams will then have to meet governance requirements like:

- MLOps: Limiting access to production AI projects, logging all activities on a project, keeping prior versions of models, etc.

- Responsible AI: Using explainability techniques to provide interpretability for internal stakeholders, test for biases in their data and models, document their decisions, and more

Not Convinced? Let's Take Another Example!

Imagine working in a bank’s fraud department, trying to protect your customers from being scammed by nuisance callers. There is an onus on the institution to use all available means to minimize the risk to customers and financial loss and do so in a responsible manner. A proactive measure could be to identify vulnerable customers based on their demographics or transaction history or raise awareness on scams and how to handle them.

An interventionist one could assess the risk of outgoing bank payments being fraudulent based on the transaction’s characteristics (e.g., amount, location). An ensemble of two such models combining the customer and payment profiles could be implemented to block suspicious outbound transfers unless a customer agent verifies the intent with the account holder. A seemingly simple scenario such as this is riddled with complexities which span the MLOps, Responsible AI, AI Governance themes.

Let’s start with AI Governance. Before experimenting with the data or attempting to build the models, there might have been multiple steps completed by multiple people within the bank to target and qualify the project. In this case, the need for this project has emerged within the fraud department and was brought to the attention of the Advanced Analytics (AA) team.

Depending on the maturity of the bank, the AA team might not have all the guidelines and frameworks needed to appropriately qualify the project and address questions like “What value does this project bring for the bank? Do we have the resources to do this project (i.e. talent, data, infrastructure)? What risks are involved in this project (for the bank, the customer) and are they acceptable?” Without such principles, or the roles identified to implement them, it would be difficult for them to assign the right resources to the project and identify risks as the project progresses. In other cases, where the guidelines are established, the AA might need to coordinate the qualification with other teams for approval (i.e., Risk & Compliance).

When it comes to MLOps and Responsible AI, we have to be careful at every step of the life cycle, starting with the data. Creating a holistic customer profile and suspicious transaction model typically involves collating and processing information from many different systems to produce useful datasets. Without the ability to discover and understand the provenance, freshness, facets, and permissions associated with the information, data science teams can struggle to even begin experimentation due to insufficient data quality and completeness — remember that flagging fraudulent transactions is akin to searching for needles in a haystack!

A point of concern when it comes to personal information is the potential for embedded bias against underrepresented, or otherwise disadvantaged populations. Many fraudsters will target individuals over the age of 65 under the assumption that these individuals are unlikely to question whether or not they are being defrauded. From the perspective of Responsible AI, we should measure and account for differences in model performance among various age groups. Since the data is likely to skew towards more fraud cases for older populations, data scientists should test whether their models are able to correctly detect incidents for age groups that are underrepresented in the dataset. This may mean creating multiple models tailored to different populations, especially those that are more vulnerable to fraud.

AI Governance, MLOps, and Responsible AI complement each other to add consistent safeguards to scaling AI

When it comes to modeling, the high probability of false positives, which would block a remittance and unnecessary customer frustration, underlines the importance of reproducible and explainable models. Such models enable the AA team (and ultimately the business owners) to have confidence in the methodology used when they tune the model’s sensitivity based on the risk/reward appetite of the bank.

Explainability is critical when it comes to sharing results, both internally and externally. In the case of a false negative — that is claiming a true transaction is actually fraudulent — clients and their payees will need an explanation for why their payment was flagged. Providing that explanation presents the opportunity for real-time feedback on the model, generates more trust in the decision-making process, and can serve as education for those that are victims of fraud to be able to better identify signs of fraud in the future.

Once an ensemble is eventually validated for deployment, the complexity continues as there can be a substantial lag between processed payments and the identification of fraudulent transactions, not to mention evolving customer and criminal behavior. All of these considerations lead to the need for input drift and performance monitoring based on a feedback loop for continuous improvement of the ensemble. The coupled nature of the two profiling models may require independent updates, with careful management and evaluation to ensure that incremental developments are in fact improvements!

As the project reaches its conclusion, the AA would enable the fraud team to oversee the model monitoring even as new risks arise. AI Governance comes again to make sure that all the projects are well documented according to company guidelines for the project handover. The fraud team needs to know where the models are, how they were built, and what assumptions were made. They need to have this information in order to address concerns of their own, from risk and compliance, and from regulators.

If this pipeline works as intended, then it should be harder for criminals to defraud customers. As a result, they will change their behaviors to avoid detection, meaning that over time the model’s efficacy will decrease in the face of changing behaviors. To stay up to date, the life cycle will restart, reinforcing the connection between AI Governance, MLOps, and Responsible AI as an iterative and living process.

Now Let's Get You Geared Up!

After defining AI Governance, MLOps, and Responsible AI, why they are important for any AI scaling journey, and how they fit operationally within an organization, you must wonder how to bring this into practice. To start your journey, you might want to look for best practices personalized for your location. Please click here for advice for French companies, here for European-focused advice, North American advice here and here, as well as some Southeast Asian recommendations here.

When it comes to tooling, Dataiku brings together AI Governance, MLOps, and Responsible AI capabilities to manage AI at scale, in one single platform. We thus make sure any contributor to the scaling journey cannot only learn from data but also build new insights. Everyone can:

- Ensure the data or the model does not carry any discriminatory biases

- Understand the outputs of a model and why it made such decision

- Inform model retraining strategy based on data

- Document model understanding in a couple of minutes

- Review project activity and past model versions

- and more!

Dataiku has you covered for even the most complex AI projects. If you're ready to dive into Generative AI application. Check out our LLM Mesh and our RAFT framework that will help you responsibly and effectively scale Generative AI throughout the enterprise. Our role is to make your life easier as you embark or sail away on your AI journey, at your own rhythm and on your own terms.

Learn More About Enterprise Generative AI