When building an AI strategy that is fit to carry the business through economic highs and lows, it’s critical to have systems for deploying, monitoring, and retraining models in production and to be able to quickly introduce, test, train, and implement new models in order to shift strategies or adapt to changing environments on a dime. Enter: MLOps. Now, we'll define it. What is MLOps?

What Is MLOps and Why Do We Need It?

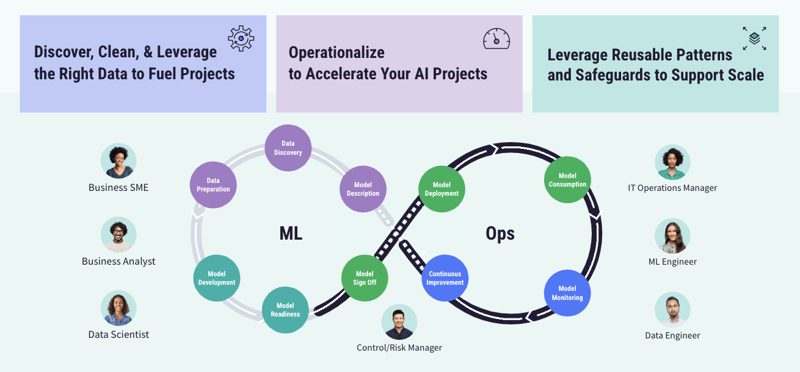

Model-based machine learning and AI are rapidly becoming mainstream technologies in all large enterprises. To reap the full benefit, models need to be put into production; but doing that at scale presents new challenges. To create a definition of MLOps, we should understand that existing DevOps and DataOps expertise is not enough, as the fundamental challenges with managing machine learning models in production are different.

That’s where MLOps, which is the standardization and streamlining of machine learning lifecycle management, comes in — it aims to remove friction from that lifecycle. However, the answer to the question "What is MLOps?" is not just a simple application of DevOps practices to machine learning; in fact, there are three key reasons that managing machine learning lifecycles at scale are challenging:

- In contrast to DevOps, the AI tools landscape is highly fragmented and constantly changing, involving many heterogeneous technologies and practices.

- Operating AI at scale is a team sport and not everyone speaks the same language. Even though the machine learning lifecycle involves people from the business, data science, and IT teams, none of these groups are using the same tools or even — in many cases — share the same fundamental skills.

- (Most) data scientists are not software engineers nor data engineers. Most are specialized in model building and assessment, and they are not necessarily experts in deploying and maintaining models. However, they often have no choice but to go for it.

Building Efficient ML Operations

A robust machine learning model management program building strong ML operations would aim to answer questions such as:

- What performance metrics are measured when developing and selecting models? How do we make sure our models are robust, bias free, and secured?

- What level of model performance is acceptable to the business?

- Have we defined who will be responsible for the performance and maintenance of production machine learning models?

- How are machine learning models updated and/or refreshed to account for model drift (deterioration in the model’s performance)? Can we easily retrain models when alerts come in?

- How do we enhance models and machine learning projects' reliability for deployment?

- How can we swiftly deploy machine learning projects to address the ever-changing business needs in the fast-paced AI environment?

- How are models monitored over time to detect model deterioration or unexpected, anomalous data and predictions?

- How are models audited, and are they explainable to those outside of the team developing them?

- How are we documenting models and projects along the AI lifecycle?

These questions span the range of the machine learning model lifecycle, and their answers don’t just involve data scientists, but people across the enterprise, illustrating how MLOps is not about just tools or techniques. It's about breaking down silos and fostering collaboration to enable effective teamwork on machine learning projects around a continuous, reproducible, and frictionless AI lifecycle. Answering these questions is not an optional exercise — it’s not only efficiently scaling data science and machine learning to enable Everyday AI, but also doing it in a way that doesn’t put the business at risk.

Teams that attempt to deploy data science without proper MLOps practices in place will face issues with model quality and continuity, especially in today’s unpredictable, unprecedented, and constantly shifting environment. Or worse than poor quality, teams without MLOps practices risk introducing models that have a real, negative impact on the business (e.g., a model that makes biased predictions that reflect poorly on the company).