If you had asked me 5 years ago if a vaccine could be developed and approved (for emergency use) in less than a year using mRNA technology I would have woman-splained (my husband calls it “XX”plaining) to you that the discovery and development process just doesn’t work like that. The last three years have rocked the healthcare and life sciences landscape like never before. In the aftermath of a global pandemic, there is now a spotlight on the need for more resilient, adaptive healthcare systems and an imperative to rapidly accelerate innovation and discovery in life sciences.

Looking ahead, as we consider where acceleration and new innovation will come from, it often feels like science fiction. The promise of AI in life sciences has been a theoretical discussion point for years now. Today I want to focus on how that vision might realistically take shape in 2023.

Demand for Health Equity

Covid-19 outcome disparities (both deaths and hospitalizations) came to light quickly; but corrective action has not yet been delivered. For example, despite the data clearly showing racial and ethnic inequities in disease outcomes, outpatient treatment during the summer of 2022 with the antiviral Paxlovid was 36% and 30% lower among Black and Hispanic patients compared to White/non-Hispanic populations. What’s worse, this disparity was even higher in high-risk and immunocompromised subpopulations (the largest relative treatment difference of 44% occurred between Black and White patients aged 65–79 years).

2023 needs to bring actionable efforts into improving health equity. This will rely on broadening the approach to data; and understanding and collecting the right sources to incorporate social and behavioral signals into patient care and therapeutic development. Health care providers will increasingly focus on value-based care, health insurers on preventative community services to promote precision health instead of just prescriptive precision medicine, and drug manufacturers and biotech on value-based pricing and incorporating new social/behavioral data sources into therapeutic development.

With both regulatory pressure and an increasing focus on ESG initiatives, the incentives are there for a renewed effort in data science applications to tackle, for example, how we can increase diversity and representation in clinical trial patient recruitment and criteria protocols. We have already begun to see such considerations incorporated into market access and therapeutic access equity in drug launch, as well as in commercial analytics in our interactions with life science organizations.

Real World Data Driving New Machine Learning Paradigms

Incorporating social and community data into patient care or therapeutic development contributes to the growth of health-related data. Real world data (RWD) or real world evidence (RWE) has been a go-to trend for several years. Five years ago, 90% of surveyed biopharma companies were investing in RWE, but only 45% felt they had the maturity or capabilities to do so. Anecdotally, several years ago I remember asking a friend working in clinical research how many of their trials incorporated RWE, and he answered less than 2%. In a review of NDA/BLA applications from 2019 to 2021, 85% of applications had “incorporated RWE for any purpose.”

I would say RWD is real now and here to stay, which impacts both the data architectures and modeling paradigms in order to extract real insights.With RWD pervasive, expect to see emerging focus on two key areas in machine learning:

- Multimodal data collection and corresponding multimodal AI (machine learning models that integrate multiple types of data/modalities, a rich research area in cognitive AI)

- Moving beyond association with causal inference/machine learning (for example, using uplift models to determine the impact of community preventative health programs, or of clinical interventions leading to disparate patient outcomes)

The inadequacy of machine learning to provide robust insights that hold up under the level of scrutiny needed in medical care (such as diagnostics) or are reliable for quality/risk decisions in therapeutic development and delivery is a common criticism. Consider three examples of how we usually make informed decisions:

- When a doctor makes a medical diagnosis (such as identifying a tumor in an MRI-scan), using all of the information at hand: patient demographics, history, behaviors, visit notes, vitals, labs, images, corroboration with other professionals

- When we incorporate patient population characteristics, individual genomic signals, known biological underpinnings of disease/drug targets, signals from both clinic-collected data and connected devices, and patient-reported outcomes into clinical research decisions for new therapeutics

- How we approach health care providers and patients with a given treatment based on multiple facets of doctor/patient characteristics, behaviors, and novel at-home or virtual care models (image/video/text) to ensure better health outcomes

We (humans) have the capacity to use all the data available to us to make these decisions. Why should the models that aid us in decision-making only use a slice of that?

Essentially, both multimodal and causal models will be increasingly relied upon for building decision intelligence that takes into account all the data (EHRs, clinical research, images, text, multi-omics data, digital biomarkers from wearables, population/community health metrics), and that allows us to move from understanding to attribution.

At a technical level, multimodal machine learning applications in healthcare are feasible through feature engineering practices — for example, creating patient embeddings (e.g. neural networks) on each data modality. This technique may also alleviate concerns about the integration of patient data sources needed to fuel multimodal models: it obscures protected health information with the corresponding lower dimension representation when necessary. The work involved to collect and process varied data requires additional emphasis on data science beyond just model building but the entire end-to-end process of data engineering, data manipulation, and delivery for decision support.

One of the challenges to be faced here will be the battle of increasing “black-box” model complexity versus simpler, interpretable models. As a consequence, we will continue to see a greater emphasis placed on the use and development of explainable and Responsible AI in order for such models to be trusted.

ChatGPT: The Results Aren’t in — Yet

Speaking of new data sources, responsibility, and trust — I don’t think I’m allowed to write a 2023 trends blog without mentioning ChatGPT, am I?

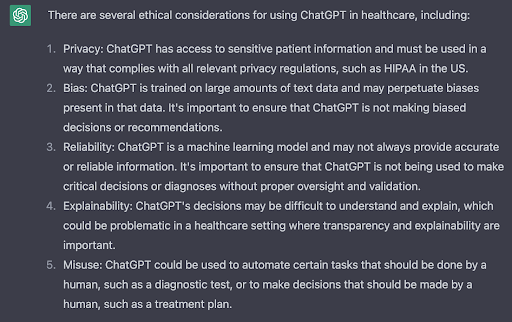

There is no doubt that generative AI is going to impact the healthcare industry. The biggest immediate effect will be questions raised about the ethical use of such technology. This can already be seen, for example, in the backlash to a mental health company experimentally using GPT-3 chatbots to help develop responses in their platform.

I could elaborate, but actually ChatGPT does a pretty good job on this point.

Much like the complexity that comes with dealing with multimodal data models, companies will need to continue to focus on how to build governance in their data and AI practices — especially if insights from these data sources are to be ethically embedded into business decision-making.

Implications for Composable Data Architectures

A final trend is a direct implication of all of the above. The root of RWD is building a better context for informed data insights at the appropriate decision points. ChatGPT appropriately highlighted some of the privacy concerns to keep in mind as we collect and allow broader access to patient health-related (and potentially identifying) data; a point that not only applies to generative AI but also cognitive AI (like multimodal machine learning applications). Ensuring patient de-identification is increasingly difficult and often stymies data science initiatives. This is why we’re seeing increased investment into synthetic data generation, federated learning (two additional topics we will see a lot of buzz about in 2023) and composable data architectures.

Data with varied modalities becomes increasingly hard to manage in a single centralized location (and invites data privacy concerns), whereas a composable data architecture accommodates different structures/locations/ownership. With more and varied data sources there will be continued emphasis on modular data pipelines, whether it’s conversations of a data fabric (more popular on the discovery and clinical development side where single source of truth and centralization is key) or a data mesh (adoption growing in teams relying on disparate hospital/health systems data with decentralized ownership). Regardless of the type of material with which you characterize your data, in 2023 there will (need to be) a continued focus on both data interoperability and metadata collection/management to support composable data.

Much as RWD is required to bring context to patient outcomes, a focus on metadata is paramount to characterizing the semantic meaning of data and its validity/suitability in data science applications supporting patient outcomes. Moreover, when these applications are built based on composability, the entire data value chain can be componentized and delivered in a future-proof, flexible design to truly scale analytic insights.

Much like real-world data, these examples of advanced analytics and AI considerations are here to stay with respect to driving life sciences innovation. And it’s time for data, domain, and business experts to collectively embrace them.