I had a great time earlier this week talking to the EGG audience at Dataiku about the past and future of algorithmic mistakes and harms. Broadly speaking, I introduced the concept of a “Weapon of Math Destruction” (WMD) as a predictive algorithm that is high stakes, opaque, and unfair. I gave examples from public education, hiring, the justice system, and credit.

One of the main takeaways from these myriad examples of what can go wrong is that there wasn’t one mistake that came up for each example; indeed, in each example, a different thing had gone wrong, from bad statistics to answering the wrong question, from biased data to missing data, and finally to explicitly embedding an illegal mental health exam inside a hiring filter. For that matter, it was also a different set of stakeholders that were harmed in the different examples.

So what do we do about algorithmic harm, if problems can come from any direction and flow towards any target? That when I introduced the concept of the Ethical Matrix (EM)[1], the goal of which is to work out who the stakeholders are in a given fixed algorithm context - i.e. who will be impacted by this algorithm one way or another - and to invite them into a conversation about what it would mean to them for the algorithm to succeed or fail.

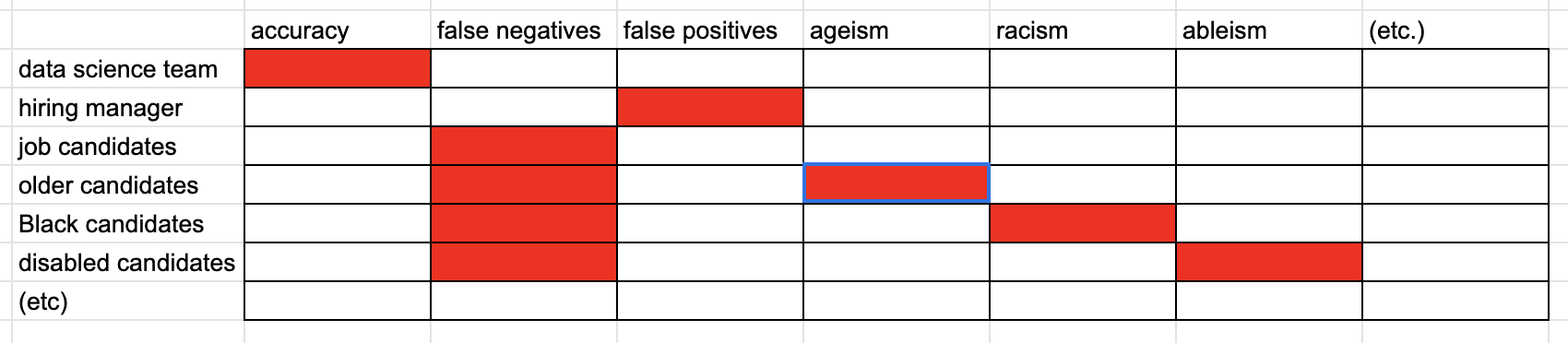

The stakeholders make up the rows of the matrix, while their concerns make up the columns. Moreover, if a given stakeholder group (say, job candidates in the context of a hiring algorithm) naturally subdivides into subgroups based on different concerns, then you give them separate rows. So, for example, older job candidates will be concerned about ageism, and candidates with veteran status or with a mental or physical disability will likewise constitute protected classes. They should each be given their own rows.

A sample (incomplete) ethical matrix for job candidates in the context of a hiring algorithm. This matrix can — and should — be leveraged by any teams building AI systems.

Next, ideally, representatives from those stakeholder groups should be invited to the conversation to express their concerns, both positive and negative, about the algorithmic context. For example, older candidates might think they have a better shot with an algorithm if it doesn’t take into account age.

Once we’ve constructed the stakeholders and their concerns, we consider each cell of the matrix individually, and consider things like, how important is this concern for this stakeholder group? How likely is it to go badly, and how bad would it be if it did go badly?

Of course, this cell-by-cell set of conversations is subjective, and probably will be led differently by different choices of stakeholders. That’s ok, this matrix is not a perfect tool, nor is it meant to be algorithmic itself! It is simply a tool to make sure that no obvious problems have been ignored or even created by the careless application of technology.

In other words, we can see this is a litmus test, a way of asking, for whom does this fail in a structured way. It’s also a great way to bring stakeholders into the conversation, before the algorithm is finalized and deployed, ideally in the design stage. I’m imagining that, if IBM, Microsoft, and Amazon had worked out an ethical matrix before deploying their facial recognition technologies, it would not have been up to Gender Shades to notice their algorithms work much less well on women and on people of color[2].

Beyond its utility in designing software, I am hoping that regulators will use the ethical matrix process to figure out how to explicitly define compliance with a given existing law[3]. I also imagine that arguments can and should be made for new laws in the context of AI by building an EM and seeing what could go wrong and for whom.

[1] I co-wrote a paper which introduces the Ethical Matrix, called “Near-Term Artificial Intelligence and the Ethical Matrix” and published in the volume “Ethics of Artificial Intelligence”, edited by Professor Matthew Liao.

[2] Thanks to the seminal work of Joy Buolamwini and Timnit Gebru, Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification

[3] As I mentioned in my talk, even the notion of what constitutes racism in crime risk scores is currently an open question.