With the provisional agreement on the EU AI Act reached last week, it is tempting for companies to take the easy path and tailor their response precisely to the law. However, this narrow approach does not prepare the organization for additional rules and regulations in other jurisdictions or unforeseen circumstances. The best strategy for global organizations is to create a resilient system which allows for adaptation while ensuring trust in AI models, projects, and programs. In addition to compliance, Dataiku's AI Governance can increase the value of AI programs through increased acceptance, adoption, and trust.

By 2026, AI models from organizations that operationalize AI transparency, trust, and security will achieve a 50% improvement in terms of adoption, business goals, and user acceptance.

Source: Gartner®, Tackling Trust, Risk, and Security in AI Models, by Lori Perri, Sept. 5, 2023

In this article, we’ll cover key AI risks companies face and what it takes to build a trusted framework that can cope with a wide range of regulations.

Organizations Struggle to Grow With AI & Face Challenges

To achieve broad acceptance and to drive value, AI projects face many challenges across teams and systems. Here are a few:

The Challenge of Explainability: Navigating the AI Maze

Some of the most frequently asked questions include:

- How do we make model operations understandable and transparent?

- How do we align the models with our strategic initiatives?

Organizations grapple with the "dark AI" phenomenon — a lack of explainability. The challenge lies in demystifying AI for varied stakeholders, including executives, users, and AI model consumers, with audience-specific explanations of AI models, highlighting their strengths, limitations, predictive behaviors, and possible biases.

The Accountability Dilemma: Cultivating Collective AI Governance

Addressing the challenges of AI accountability involves tackling questions like:

- How do we make sure everyone feels part of it during the development and execution of AI projects?

- How do we ensure that actions and decisions are well-coordinated throughout the AI lifecycle?

Questions of accountability in AI Governance revolve around inclusive participation in AI project development and decision-making and harmonizing actions across the AI lifecycle. Companies often form governance committees, yet their effectiveness is hampered by inadequate tools and workflows, leading to disengagement and weak governance.

Oversight Shortcomings: Scaling AI Without Losing Sight

We often hear from companies managing several AI projects in parallel:

- How can all projects be tracked from a single location and their performance monitored?

- How do AI projects or the related AI models tie in with the company's strategic initiatives?

Companies that manage multiple AI projects simultaneously confront oversight and performance-tracking issues. The challenge intensifies with the expansion of AI, where manual management risks control loss and regulatory non-compliance, underscoring the need for sustainable AI growth foundations.

So, how can organizations preemptively mitigate risks and establish a robust AI Governance structure that endures over time? Establishing a future-proof system that adapts to evolving regulations is essential.

3 Pillars for an Enduring Trust Framework

|

To concretely address the challenges associated with building a trustworthy framework in the realm of AI, Dataiku took a proactive step a few years ago by introducing built-in governance capabilities within our platform. Because it’s native, it dramatically makes life easier for everyone managing the data & AI risks within organizations. To discover more about Govern & Dataiku AI Governance, click here. |

Now, let's explore the three-step action plan, guiding us in building this strong trust framework. We will focus on regulatory compliance, Responsible AI practices, transparent AI operations, and the scalability to handle diverse projects universally.

1. Establish AI Resilience Amid Regulatory Complexities

Adopt a Risk-Based Model Classification: Utilize a risk matrix to categorize AI models based on criticality, aiding in prioritizing impacts and risks.

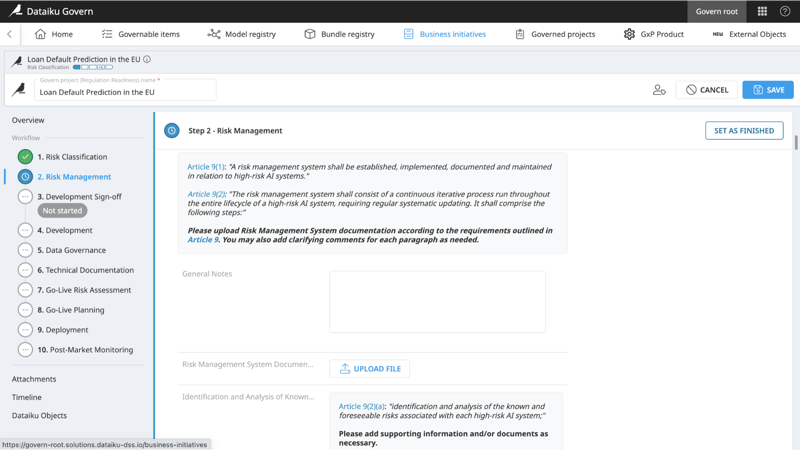

Use Compliance-Ready Templates: Accelerate compliance with templates, simplifying regulatory alignment without deep-diving into extensive AI legislation.

|

To assist you in speeding up your compliance efforts and aligning with our business solutions, Dataiku has provided several templates to facilitate your regulatory preparation. The benefit? You already have initial assistance that guides you through compliance without having to delve into 300 pages of AI regulations. Allow yourself to be guided through our approach, which incorporates the key principles of the EU AI ACT. To learn more about what Dataiku has to say on the subject, click here. |

Leverage Adaptable AI Governance Processes: Ensure the flexibility of governance workflows to accommodate various regulatory frameworks, making them more context-specific.

2. Apply AI Accountability and Transparency Across Projects

Empowerment for Accountability: Designate teams to document AI processes, fostering accountability through development and deployment.

Diverse Collaborative Review: Engage stakeholders from business, IT, and compliance for model evaluations, ensuring comprehensive scrutiny before deployment.

Transparent Audit Trails: Maintain audit trails for actions within the AI Governance framework, enhancing transparency and fulfilling compliance needs.

3. Support AI Project Growth With Centralized Governance

Visual Insights for Scalability: Employ centralized visual tools like Kanban for a cohesive overview of AI projects, enhancing efficient tracking and management.

Ongoing Monitoring of Deployed Models: Implement robust monitoring for model health and performance, ensuring accuracy and effectiveness over time.

Integration with External Systems: Equip the AI Governance framework with APIs for seamless integration with third-party tools, ensuring adaptability and scalability.

No Continuous AI Growth Without an Always-On Trusted Environment

In conclusion, regulations often serve as the final reminder, outlining necessary actions and enforcing a framework that demands compliance, potentially leading to costly fines. The true measure of success lies in a company's capability to retain control over its AI destiny, proactively anticipating regulations. Establishing a governance framework that is transparent and scalable becomes paramount in fostering the confident development and growth of AI projects. It is this forward-thinking approach that not only navigates the regulatory landscape effectively but also positions companies as leaders in the responsible and sustainable advancement of AI technologies.

GARTNER is a registered trademark and service mark of Gartner, Inc. and/or its affilliates in the U.S. and internationally and is used herein with permission. All rights reserved.