Regulating fast-moving technologies is never easy. The current U.S. Department of Justice clash with Google over anti-competitive behavior in the online advertising market is just the latest example in a string of similar antitrust cases involving big tech which have failed to yield substantive results. Questions have been asked of whether “antitrust [is] still fit for purpose in the digital age,” effectively — are our legal tools keeping up with market reality?

In this post, we will see how the pace of development in AI poses similar challenges for regulators and how the EU AI Act in particular is adapting to it. What are the latest changes, and what does it mean for analytics leads and senior leadership teams looking to deliver value from AI?

The Push and Pull of Regulation and Technology

The introduction of the automobile in the late 19th and early 20th centuries had a significant and highly disruptive impact on many aspects of the law. Traffic regulations had to be defined, vehicles had to be registered, the rise of automobile accidents led to the development of liability laws and, eventually, compulsory insurance requirements. The disruption caused by the rise of the automobile rippled through society to such an extent as to have a profound impact on zoning laws and urban planning. The concept of suburbs was born — the fabric of our cities and society were profoundly and permanently altered.

While these new laws were initially quite rudimentary, they have evolved over time to become a robust framework of rules and risk mitigation.

Just as the automobile did in the early 20th century, the disruptive force of AI in the early 21st is asking similar questions of regulators seeking to understand, and mitigate, its potential risks. Clearly, regulation must continue to adapt to the evolving landscape of challenges it seeks to address, but in an environment of fast-moving developments, the risk is that our legislative frameworks and case law will always be reactive, one step behind, and lacking the power to effect real change.

Enter the EU AI Act

In April 2021, the European Commission unveiled its ambitious proposal to regulate AI within the European Union. The Act itself was developed in response to growing concerns about the ethical and legal implications of AI systems (and their risks to consumers). The Act sets out a framework for the development and deployment of AI systems, covering areas such as transparency, accountability, and data governance, and emphasizes the importance of human oversight in protecting fundamental rights. At its core, the AI Act categorizes all AI systems into one of four risk categories, with corresponding requirements for each.

In the two years since the introduction of the first draft, however, AI has continued to evolve, particularly as the possibilities of Generative AI have become clear. The EU AI Act has adapted.

Amendments to the Draft EU AI Act

In June 2023, the Act was adopted by the European Parliament with no less than 771 amendments. Too numerous to detail individually, there are a few broad themes which stand out as requiring particular attention for organizations developing or deploying AI:

Expansion and Refinement of Regulatory Requirements

Fundamental rights: Greater protections for citizens in response to risks of AI use in biometric applications and law enforcement (likely to produce a clash with EU member states opposed to a total ban on AI use in biometric surveillance)

Foundation models, General Purpose AI systems, & Generative AI:

- A range of new obligations regarding foundation models which impact model providers and downstream users. These new obligations relate to pre-market compliance requirements; provision of information and support necessary for risk mitigation between model providers and downstream users; and extend to, amongst other things, the nature of legal agreements between model providers and downstream users.

- Introduction of a risk-based approach for AI models that do not have a specific purpose, so-called General Purpose AI, with a stricter regime for foundation models

- Introduction of mandatory labeling for AI-generated content and disclosure of training data covered by copyright

Expansion and clarification of “high risk” category: New requirements for companies developing "high-risk applications" to complete a fundamental rights impact assessment and evaluate environmental impact.

Protection of democracy: Recommendation engines, such as those on social media platforms, which could be used to influence elections have been reclassified as “high-risk”

Establishment of AI Office

The amendments establish an AI Office to support coordination on cross-border cases, and act as a centralized body to coordinate the work of the competent national authorities and the European Commission. The responsibility of settling intra-authorities disputes will be left to the Commission.

The AI Office is also scoped to play a role in implementation of the AI Act through activities including the development of standards, guidelines, and public and market engagements through consultations and alternative dialogues, for instance, with foundation model developers ‘with regard to their compliance as well as systematic that make use of such AI models.’

Introduction of General AI Principles

While the first draft of the AI Act proposed specific requirements for high-risk AI systems, limited and minimal risk AI systems faced minor or voluntary requirements, respectively. The compromise text puts forward general principles applicable to all AI systems, concerned with the development and use of AI systems or foundation models, with the ambition of ‘establishing a high-level framework that promotes a coherent human-centric European approach to ethical and trustworthy’ AI.

The principles, found below, may seem familiar as there’s fair amount of overlap with the requirements proposed for high-risk AI systems. Importantly, however, the current draft is proposing that these should not be the reserve of high-risk AI systems alone and that, whatever level of risk, AI systems should reflect:

- Human agency and oversight

- Technical robustness and safety

- Privacy and data governance

- Transparency

- Diversity, non-discrimination and fairness

- Social and environmental well-being

An Updated Approach to EU Liability Legislation

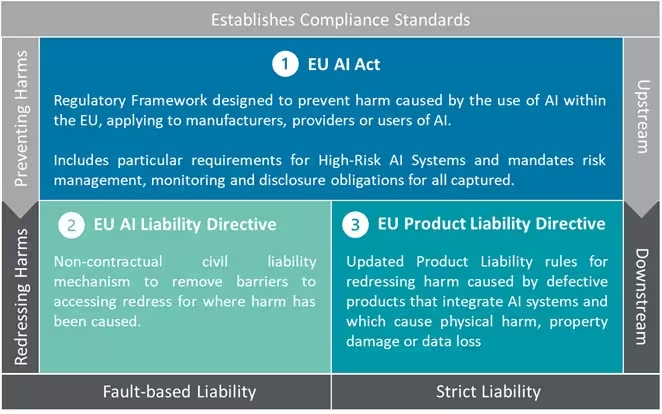

Finally, in parallel with the amendments to the AI Act, are two new Directives proposed by the Commission in September 2022 — the EU AI Liability Directive, and the amended EU Product Liability Directive. Together with the EU AI Act, these would act as a three-part regulatory system that, on the one hand, seeks to ensure the protection against harms as through the EU AI Act; and, on the other, to provide citizens a means to redress through liability claims should harms nonetheless occur.

Source: AI: An Updated Approach to EU Liability Legislation, Deloitte

Source: AI: An Updated Approach to EU Liability Legislation, Deloitte

Looking Ahead

As the EU AI Act enters its final stage of the legislative process, it is interesting to note the changes that have been made in response to evolutions in AI. With the addition of general requirements, the EU is proactively addressing an evolving threat landscape to secure the fundamental freedoms for citizens it has set out to preserve. The creation of the AI Office provides clarity on enforcement and establishes a centralized function for oversight. The addition of new directives to enforce the protections for citizens will complement the AI act itself and strengthen the legal framework for redressing harm suffered from AI.

Just as technical evolution of the automobile catalyzed regulatory evolution, we can see a clear determination in the compromise text of the Act to anticipate next expectations and establish institutional capabilities (such as the AI Office) to manage the evolving risks of Generative AI.

In Conclusion

The latest requirements provide a helpful framework to mitigate some of the risks introduced by Generative AI in an effort to protect the public but also to grow confidence and trust in the technology. With relatively low barriers to entry and increasing downstream applications, incorporating these requirements early will be important especially as new regulatory burdens on organizations are only likely to increase in the near future.

Reflecting the reality of these requirements across organizations benefits from AI Governance — setting priorities and associated rules, processes, and requirements designed to fulfill and evidence that those priorities are consistently being met. Leaning on AI Governance early means taking steps to grow confidence and trust in the technology’s use, on the one hand, but also sets your organization up for future compliance as the Act’s requirements are formalized.

The first electric traffic light was seen in London in 1868: It was manually operated by a police officer and used written signs as traffic indicators. It was not until 1920s Detroit that the three-color signal was introduced, which has become a rare example of a globally understood and agreed upon definition of risk (save the occasional blue light replacing green in Japan and South Korea).

From our perspective, whether perfect or not, the EU AI Act takes a bold and proactive approach to set regional and global expectations on new requirements that must be met to safely develop and deploy AI. The latest amendments are a welcome statement of intent to keep the pace with the technology. Regulation, along with AI, will continue to evolve and, eventually, like the traffic light, we will surely arrive at a universally agreed definition of safe and trusted uses of AI.