It was a thrilling moment in history. The Brazilian Grand Prix was the last of the F1 2012 season, and the tension was high. Only a few points separated Fernando Alonso from Sebastien Vettel. But in the first laps, a terrible accident put Vettel in last place. In one of the most remarkable comebacks in F1 racing history, Vettel surprisingly finished in sixth place, which allowed him to win the overall championship for the year.

What was the hidden secret behind Vettel’s success? He had unwavering trust in the race engineering team who analyzed his data and ran simulations behind the scenes to give him the right advice that put him in the best position over the remaining 70 laps.

The high-speed world of AI at scale is not unlike the thrill of motorsports, where driving with control and having trust in data and outputs is the key to success. There are three critical parameters that teams must keep in check to stay ahead of the competition and reach peak performance. Let’s dive into the details and discover how Dataiku can be the secret weapon for your AI race team.

#1 Robust Telemetry:

As AI continues to grow in various industries, it is becoming increasingly important for organizations to have a well-functioning monitoring system in place. The stakes are high: The consequences of inadequate monitoring can be devastating, ranging from decreased performance and downtime to revenue loss and increased costs.

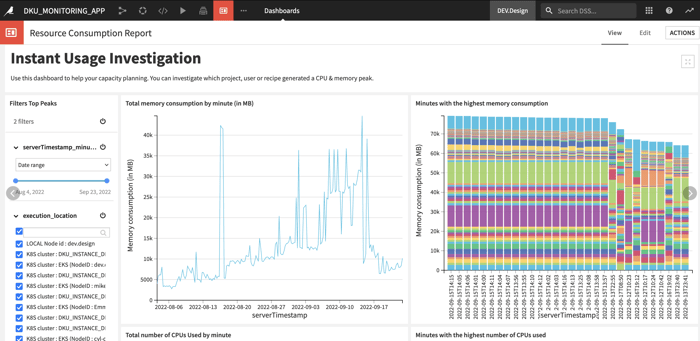

To scale and provide a reliable service, platform administrators need to understand how AI resources are used and to what end. This capacity planning is a critical part of systems administration, as it ensures that a system can handle future workloads without running into performance issues.

With Dataiku resource usage monitoring, Dataiku platform administrators have a simplified overview of the computation resources across all used infrastructures. This allows teams to immediately undertake practical steps: From consumption analysis to computation breakdown across different infrastructures, resource usage monitoring provides the telemetry needed to observe and precisely monitor AI activities.

#2 A Single View of Performance:

Controlling costs and resources is important, but successful companies also need to continuously improve their AI performance. As AI use cases and models continue to flourish, there is an urgent need for an overview of the analytics projects and models that are developed and deployed in the enterprise. Here are some of the most common challenges that organizations face when driving AI performance:

- How to track model performance and be alerted when necessary?

- How to prioritize models and analytics projects?

- How to ensure models are business driven and attached to strategic initiatives?

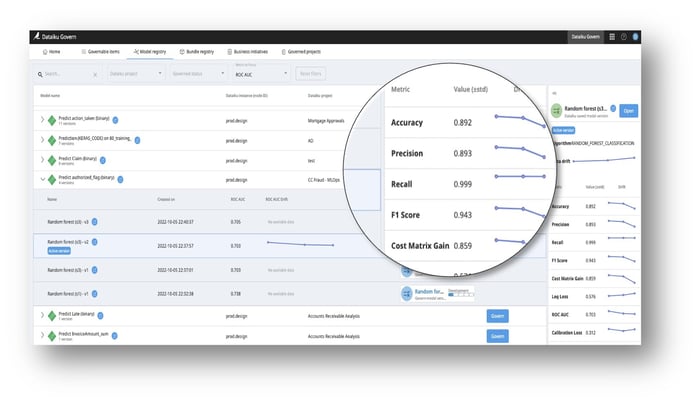

Dataiku provides key capabilities to govern the company-wide efforts to build and deploy AI. Dataiku Govern lets users, builders, leaders, and experts track the progress of AI projects in one centralized place. The model registry works as a central repository: It helps the teams to review the status and performance metrics of all models across multiple Dataiku instances and projects.

Since key business initiatives (customer retention, fraud detection, or claims analysis, for example) often require multiple analyses and pipelines, you can also choose to group related Dataiku projects under the larger umbrella of a business initiative; keep an eye on what matters most and review the business initiative progress in one place.

#3 Guardrails and Safety Belt:

Just like a Formula 1 car needs clear rules, safety checks, and approval processes before hitting the track, AI applications must also undergo a rigorous review process to ensure they are safe and reliable.

This is where the model sign-off comes in. A model sign-off is the process of reviewing, verifying, and approving a machine learning model before it is deployed into production. This process ensures that the model has been thoroughly tested and meets the required standards for accuracy, fairness, and ethical considerations.

For example, consider a financial institution that uses an AI model to make loan approval decisions. Before the model is deployed into production, the IT team performs a model sign-off to ensure that it is not biased against certain groups, such as those with lower incomes.

The IT team also verifies that the model has been tested for accuracy and meets the organization's standards for loan approval decisions. Once the model has passed the sign-off process, the institution can proceed with deploying the model into production with confidence that it will perform as intended and meet the necessary regulatory requirements.

Dataiku, with its built-in sign off capabilities, provides IT teams with the tools they need to implement a pre-defined governance framework and mandate specific stakeholders to review and approve models and analytics projects before deployment. This way, only the ones that meet the organization's requirements will be sent out to production.

.png?width=485&height=433&name=image%20(10).png)

The Race Is On

Just like a Formula 1 racing team, success is about more than the driver. Much like the engineering team and the pit crew set up the driver for success, IT teams must create the conditions for AI success. With a well-oiled system that can effectively monitor resources, provide a centralized view of performance, and enforce guardrails to keep everything on track, they can ensure that their organization is ready and able to win the AI race.