The year 2023 was one of my favorites so far at Dataiku: Never have we seen so much excitement in the market around AI. In our Forrester study from December — a survey of 220 AI decision makers at large companies in North America — 100% (literally everyone across a swath of industries working on all kinds of different use cases and business problems) said that Generative AI would be important for their organization’s business strategy in the next 12 months.

To that end, 2023 has also been a pivotal year for Dataiku. According to the same Forrester study, 83% of AI leaders are already exploring or experimenting with Generative AI. That is certainly true of our customer base, and many of them already have use cases in production. For example, LG Chem is leveraging Dataiku to release Generative AI-powered services to enhance productivity. Heraeus is actively using Dataiku and Generative AI to improve their sales lead pipeline — with many more success stories to come.

We’ve been busy making sure that our customers have all the tools they need to meet their Generative AI goals and ambitions. In case you haven’t been following along, here’s a roundup of all things Generative AI and Dataiku plus some hints at what you can expect to see more of in 2024.

The LLM Mesh

By now I hope you know the LLM Mesh, a common backbone for Generative AI applications that is reshaping how analytics and IT teams securely access Generative AI models and services.

But did you know that since its initial introduction in September 2023 that it has already received extensive updates and enhancements? For example:

(Always) More Connections & Models

We built the LLM Mesh to enable choice and flexibility for organizations among the growing number of models and providers. That means a huge (and constant) focus for us here at Dataiku is on expanding connections.

For those using Amazon Bedrock services, you can now choose from Cohere and Llama2 models, and the Hugging Face connection has expanded to include new model families. This means some of the hottest models today (e.g., Mistral, Zephyr, Llama2, Falcon) are included out of the box in the Hugging Face connection, and you also can register additional LLMs from Hugging Face from those same model families at any time — no need to wait or upgrade your Dataiku instance. As always, it’s the best of both worlds: speed and innovation, but also governance and safety.

We are constantly expanding the scope of our integrations to provide customers with the widest possible choice and most flexibility. Because, well, that’s the point — we do the work so you don’t have to. Be sure to look out for additional connections in the very near future.

Embedding Model Upgrades

The embed recipe in Dataiku uses the retrieval augmented generation (RAG) approach to fetch relevant pieces of text from a knowledge bank to enrich user prompts. This improves the precision and relevance of an LLM’s answers.

Using the embed recipe requires a connection to a supported embedding model. And when we first released the LLM Mesh, only OpenAI was supported. In December, we added support for embedding with locally-running models from Hugging Face. Now (since January), users can register embedding models in custom LLM connections for added flexibility to use a different embedding model than those we provide out of the box.

That means the LLM Mesh now supports running embedding models locally, meaning it’s possible to build a full RAG workflow completely locally. This is crucial for customers dealing with sensitive documents that cannot be sent through commercial embedding models’ APIs.

Cost Monitoring Enhancements

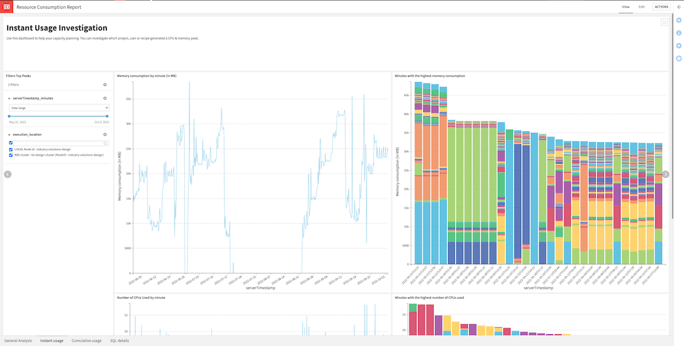

Monitoring LLM resource usage becomes crucial as projects and user numbers grow. While the LLM Mesh has always had a cost monitoring component, in January, we released an updated Dataiku Solution for Compute Resource Usage (CRU) that automatically extracts LLM cost and usage information from audit logs and presents insights in a ready-to-use dashboard.

That’s Not All…

Other important additions since the LLM Mesh launch in September include:

- The ability to import, export, and delete the weights in the Dataiku model cache for local Hugging Face LLMs.

- OAuth authentication for Azure OpenAI.

- New Public API to list LLMs and Knowledge Banks.

Generative AI Builder Tooling

In addition to the LLM Mesh, which facilitates how teams access as well as monitor services, Dataiku provides even more tools for teams that are building Generative AI use cases, ensuring transparency and control throughout:

Prompt Studio & the Prompt Recipe

While employing LLMs for individual queries holds value, the true potential for LLMs at scale emerges when integrating these models into established data pipelines and projects. However, success hinges on creating tailored AI prompts enriched with pertinent business context and explicit instructions, ensuring effective implementation and impactful outcomes.

That’s why we introduced Dataiku Prompt Studio in July 2023, a tool for designing, testing, and operationalizing optimal AI prompts to achieve business goals. Once our customers and our own data scientists at Dataiku got their hands on Prompt Studio, we got feedback and made some key enhancements at the end of 2023.

For example, previously, users found themselves wondering about the specifics of their prompts: How much data is being fed into the LLM? What is the length of the input? What format does the JSON output follow? With the latest updates, users can now peek under the hood and inspect the prompt being generated and sent on their behalf. This transparency empowers advanced users to tailor prompts according to their needs, ensuring precise and effective interactions with the language model.

After building and perfecting prompts in Prompt Studio, the final step is deploying to the project Flow in Dataiku, which saves the AI enrichment step as a visual recipe. We’ve made some enhancements here as well — notably, in addition to machine learning models and Python functions, users will also have an API endpoint in the API designer for the prompt recipe.

This will give people like machine learning engineers the flexibility they need to embed a curated LLM prompt in real-time applications with API services.

LLM-Powered Text Recipes

Since the introduction of the LLM Mesh, Dataiku includes new recipes that make it easy to perform two common LLM-powered tasks:

- Classifying text (either using classes that have been trained into the model for common tasks such as sentiment or emotion analysis, or classes that are provided by the user), and

- Summarizing text.

Since December, similar to the functionality mentioned above for Prompt Studio, we’ve added an option for LLM-based text analysis recipes to show the underlying prompt. This level of insight marks a significant step forward, enabling users to optimize their Generative AI workflows with confidence and precision as well as upskill their own prompt engineering skills.

Text Extraction Plugin Improvements

You might already know the text extraction and optical character recognition (OCR) plugin, which we released all the way back in 2020. However, it has recently been updated so that it's more readily usable for RAG by performing smarter and more meaningful chunking. Be on the lookout soon for support for PDF chapters, which will take the usability of this plugin for RAG to the next level.

New Tutorials

In addition to providing all these great features for AI builders, the team has also been hard at work developing additional training and tutorials.

For example, the LLM Mesh section of Concepts and Examples contains basic code samples to help you getting started as well as more advanced and specific use cases. The Tutorials section also now contains six Generative AI-specific examples walking through how to programmatically train, manage, and deploy LLMs in Dataiku. Be on the lookout for much more to come in terms of in-depth tutorials and LLM-specific additions to the developer guide in 2024.

Human in the Loop & Governance Tooling

Let’s turn from tools for AI builders to tools for those working on validation or QA of models or workflows. As is our philosophy for all AI, generative or not, maintaining a human in the loop is primordial. However, this is doubly true when dealing with non-deterministic models where hallucinations can be a factor. That’s why Dataiku also provides an array of tools for governance of LLMs.

Automatic LLM Tags

In January, we released an exciting new feature that is the first step in upgrades to Dataiku Govern for LLMs. Governance views now automatically display a special tag for governed projects utilizing an LLM connection, which is especially useful for organizations wishing to enforce increased scrutiny and risk practices for Generative AI-powered solutions.

From a reporting and governance standpoint, these automatic tags mean teams can quickly pinpoint which projects might need additional human-in-the-loop attention.

Managed Labeling

In addition to image classes, object bounding boxes, and text spans — all of which have existed in Dataiku for quite some time — Dataiku managed labeling can now label records (dataset rows) and text for classification use cases.

Watch this space for more updates, including free-form comments labelers can add to a record as they annotate. This will be especially useful when performing LLM-generated content validation, so subject matter experts can provide more context about why outputs were wrong and what answer would have been better.

Compare Rows

In this small but mighty upgrade, we eased the pain of looking at long text fields in Dataiku (common for LLM use cases). What you can do now is pick up to four columns that you want to look at side by side and put them into a comparison view.

So, for example, if you have a piece of text and you're asking for sentiment on it using an NLP recipe, or you're asking for summarization, or performing another general LLM task, you can look at your original, you can look at the LLM output, and you can look at a ground truth column all together instead of scrolling back and forth across a row.

Again, these are the kind of updates that can add up in terms of efficiency gains, especially as teams work more and more with LLMs and their outputs.

AI Assistants

Switching gears again from building LLM use cases to using LLMs within Dataiku itself for more productivity, Dataiku now offers three AI assistants:

AI Prepare

The first is for business users and analysts, or really anyone that wants to use natural language for data preparation to speed up time to value with data.

Simply describe how you want to clean and transform your data, and Dataiku uses Generative AI to translate your objectives into a series of preparation steps that you can easily inspect and modify if needed. Pretty powerful, and a huge leap forward when it comes to democratizing the use of data.

AI Code Assistant

Dataiku provides AI Code Assistant in Jupyter Notebooks and VS Code, which is especially useful for citizen data scientists, as we detailed on the blog in December. This handy helper addresses time-consuming tasks users might run into when building out data pipelines in code.

AI Explain

Also in the realm of code comprehension, the most recent addition to the AI assistant lineup is AI Explain. When originally launched, its purpose was to automatically generate descriptions that explain Dataiku Flows or individual Flow Zones. However, now with Dataiku version 12.5, AI Explain also extends to explaining Python code recipes.

Accessible through a panel integrated into Dataiku’s native code editor, AI Explain offers users the ability to dissect and understand code segments with ease — whether unraveling an entire script or focusing on specific snippets.

Note that in addition to differences in where they operate (AI Explain operates exclusively within Dataiku's native interfaces while AI Code Assistant works in embedded IDEs like Jupyter Notebooks and VS Code), AI Explain is powered by Dataiku-managed AI services, whereas AI Code Assistant connects to your approved LLM of choice via the LLM Mesh.

What's Next?

I’ve already given you a few nuggets throughout this blogpost of updates for which you should be on the lookout, so stay tuned! If you want more nitty gritty details, make sure you check out the release notes for Dataiku version 12.5 (and previous versions) to catch all the smaller updates I haven’t mentioned here.

Beyond those updates, we’re excited to continue to work with our customers to build out real-life use cases and continue to support all the required components to make enterprise-grade Generative AI a reality.