Dataiku recently sponsored and attended VB Transform, a three-day virtual conference hosted by VentureBeat that focuses on best practices and tangible ways that business leaders can gain and maintain a competitive edge in an increasingly AI-driven world.

In a fireside chat, Dataiku’s Chief Customer Officer Kurt Muehmel discussed how critical it is for organizations to put their data to work by building human-centric AI models, how Dataiku equips them to do so, and how to truly achieve scale once they have invested in analytics and AI. We’ve highlighted the main points below.

Bringing More Seats to the Table and Reaching the “Embed” Stage

By taking a broad, inclusive approach to data science, machine learning, and AI, organizations will be able to mitigate the negative impacts of data silos and lack of team alignment in order to, ultimately, scale more effectively. By giving more people a seat at the table — from data scientists and engineers to business analysts and marketers — and enabling them to act as both consumers analyzing results from data and creators in the AI development process, organizations can ensure that their scaled-up efforts will have the maximum positive impact.

How, though, can organizations that have moved past the conceptual phase and their proof of concepts, seen initial successes with AI, and may even have some products in production, get to scale? After they have succeeded a handful of times, how can they make this practice repeatable and sustainable for the long term?

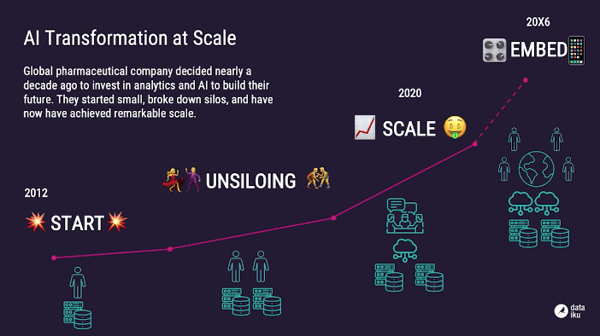

To illustrate AI transformation at scale, Muehmel narrated the story of a global pharmaceutical company that decided nearly a decade ago to invest in analytics and AI to build their future. They started small, broke down silos, and have now achieved remarkable scale. When they got started on their Enterprise AI journey, the company looked for what data sources were available, what teams needed to garner from that data, and started stitching together those data sources. A lot of the work was cobbling together what data was sitting in which data warehouse and identifying the needs and skills available at their disposal in order to effectively build out new data and analytics capabilities.

When the company got started with Dataiku, they focused on breaking down barriers formed from data as well as the people on internal teams — bringing in a variety of teams to work with the data. Today, the company has over 3,000 different data projects running in parallel, hundreds of thousands of datasets, and nearly 1,000 direct contributors to the data process. These people are using the tools best suited for them, coders and visual users alike, to untap new insights.

Once initial scale is achieved, the goal shifts to getting everything embedded — to embed the analytics, to embed the AI processes directly into the applications, to embed the dashboards — and to do all of this throughout the organization. When that happens successfully, each person can access and work with the datasets they need without having to worry about where it’s running.

Another insightful piece of advice from Muehmel was that when scaling AI projects, organizations should not attempt to predict the future, but rather build for resilience. It’s impossible to predict what the future of technology will look like as it is ever-evolving, so organizations need to be able to keep pace with the changes and swap in the most innovative solutions as smoothly as possible. This isn’t to say that organizations should drop their existing technologies whenever a new product comes to the market, but rather align themselves with the products that help them most accurately solve their bespoke business problems.

Responsible AI

Creating human-centered AI grounded in explainability and the demystification of black-box models is ultimately more equitable for users. Muehmel stated that as tool builders, Dataiku feels a great responsibility to ensure that the harms that have historically resulted from bias in AI are not perpetuated by automated AI systems. When scaling use cases, it is critical for organizations to acknowledge that, while there is tremendous business value and potential, there is a risk of negative impact.

The AI trust crisis (i.e. bias against specific populations in healthcare, job hiring, the legal system, and so on) is something that Dataiku feels strongly about — not only do we want to raise awareness around the reality of the topic, but want to educate the AI “builders” to ensure they understand exactly what is going on. To achieve success, we need to broaden the scope of people participating in the creation of these capabilities and do so in a diverse and inclusive way. Muehmel went on to say that Dataiku’s vision of Responsible AI involves:

- Explainability to ensure we don’t have impenetrable black-box models but rather models that can explain themselves and tell us what’s happening in order to present the results and allow individuals to say it’s wrong, causes harm, or involves a risk of harm

- Creating a culture of transparency

- Global governance of data and AI

When building for scale, we have a great responsibility to enable and train our users. Muehmel used the analogy of potentially dangerous tools like a power saw — the toolmaker has a responsibility to build it in a safe way, with appropriate safeguards, appropriate training material and recommendations, and failsafes so the tool can be used in a way that doesn’t cause harm. We continuously strive to further incorporate these capabilities into Dataiku and equip our customers with the services and materials they need. We acknowledge that we need more voices and eyes on these projects and wholly advocate for the inclusion of more AI builders into the picture.