Imagine if you and your team spent months putting together a powerful analytics or data science model with great insights, but nobody ever used the dashboards or automated decisions with it. It sounds demoralizing.

Getting the most impact from all your work means deploying these prototypes in production -- which means putting them in an environment where people and programs can use them simply and confidently. Here at Dataiku, we often have conversations about production. Two questions we regularly encounter are: What exactly do you mean by production? and How exactly does Dataiku enable production?

In this post, we’ll offer a definition and some explanation about production. Then, we’ll discuss the two distinct forms of production: batch and real-time. And finally, we’ll talk about how Dataiku makes it not only technically easy to deploy your projects in production, but also all the features in the product that can make production-readiness a core tenet of your team and its work.

Ready?

Production and Its Discontents

Throughout our many conversations with people at all sorts of organizations about production analytics, what’s the common thread? Well, the reality is that everyone has his or her own definition. But if we were forced to put our heads together to propose a single definition for a production environment, it would be something like this:

“An environment that is runnable whenever necessary and able to serve external consumers for their day-to-day decisions, whether those consumers are humans or software.”

This is distinct from what we call a design (or development) environment, which can crash without having any significant impact on the business. The design environment is also often referred to as a sandbox, because while it offers a place to experiment, it can’t help or hurt anything else.

How can you smoothly go from a sandbox to production and ensure that everything goes smoothly?

For some organizations, production is the realm of IT, while design is the realm of data science and analytics. In these organizations, the analytics team connects to data sources and builds models -- in R, SAS, SPSS, and other languages -- in the design environment and then hands these models over to IT, who often will recode the models in yet another language, such as Java or C, and then make them available for broader use. The IT department then manages the supervision of these models and the databases and applications they impact. Ultimately, the whole process can take weeks and even months.

For organizations on the other side of the spectrum, where the analytics team handles deployment, the complexity of managing and monitoring workflows in production can spread team too thin or call for skillsets that not all teams have.

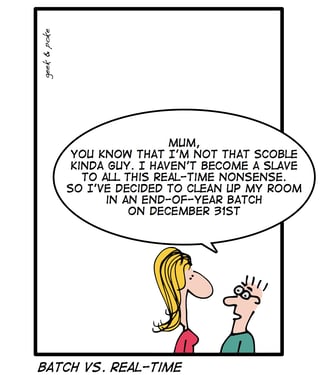

Batch and Real-Time Environments

In addition to the aforementioned differences between companies in who “owns” production, there are also different types of environments. Depending on your real-time needs, your production environment will generally fall into one of two types of architecture: batch or real-time. Let’s use some examples to define them.

In an example of a batch environment, the analytics team at a chain of gasoline stations has built and deployed a model to predict the next day’s raw materials requirements in each location across the country. The model will use previous sales data to automatically generate predictions of what each location will need, which can then trigger automatic purchasing and dispatch decisions, as well as flow into dashboards and reports.

In an example of a real-time environment, a potential buyer of a car applies for a loan, and the data from the loan application is fed into a credit scoring model by querying an API, generating a live assessment of the creditworthiness of the applicant. Now, the loan can be approved or declined, or a company representative can be prompted to ask for more information, all of this in real time.

Both batch and real-time production environments address different concerns and require different types of infrastructure. Obviously, your organization’s infrastructure will be determined by its needs, so neither is, on its own, better than the other.

How Dataiku Deploys to Production

Clearly, there is significant variation in what production analytics means between businesses (and, in some cases, the needs and expectations of different teams at the same company). But no matter what the situation, technically speaking, Dataiku makes it simple to deploy workflows to production.

Dataiku’s batch production node allows you to assemble all your code and visual components as an end-to-end pipeline in the design node, then take a “snapshot” of it via a bundle, and deploy it to the production node. Each bundle is saved and acts as an insurance policy that you can roll back to in case anything goes wrong.

For Dataiku’s real-time production node, we deploy a package (i.e., the trained machine learning model and any supporting datasets) to the production environment, which is then queried by an API in order to provide real-time scoring to individual records.

In fact, Dataiku lets you leverage both approaches, with a real-time node built on top of, or integrated with, a batch node.

Dataiku Lets You Practice the Way You Play

If you’re an athlete preparing for a competition or a musician preparing for a concert, the best thing you can do is practice with the same rigor and intensity as you will bring to your real performance. Likewise, in analytics, workflows are built in a design environment before being deployed to production -- the design environment is like the practice studio before the concert hall of production. It would be preferable to use the same instrument that you’ll use in the concert hall when you’re in the practice studio.

It's important to practice with the same rigor you'll bring to the production, whether in music, sports ... or data science.

It's important to practice with the same rigor you'll bring to the production, whether in music, sports ... or data science.

That’s why, when you use Dataiku’s design node, your product features mimic as closely as possible the ones you use on a production node. This especially relates to automation features, such as scheduling and scenarios. As a result, production-readiness is built into every workflow you create from the first step, as opposed to being something you worry about only when nearing the time for deployment.

For monitoring, Dataiku offers scenario and custom quality control metric definitions, and it will notify you by just about any means you prefer in case anything goes wrong. You can create scenarios that allow you to rebuild data and score new datasets, both at scheduled times as well as triggered by events (e.g., a new row getting added to a monitored dataset). Rolling back to earlier versions is simple, and an audit trail lets you dive into the log to identify the source of any issues.

Dataiku can also automatically retrain your model, keeping it up-to-date as your data changes over time. If your model starts to "drift" -- to make systematically worse predictions on incoming data -- you can periodically retrain it using the new data you've collected since the original deployment. On our production node, Dataiku can intelligently compare models so that you don't accidentally deploy poorly performing models -- for instance, you can specify that a new version of a model will be deployed only if it is a viable competitor (for example, with an AUC as good as or better than the existing model).

In summary

- It is vital that data science and analytic teams can deploy their workflows to production, whatever your production environment looks like.

- Dataiku provides a tool that makes it easy for data science and IT teams to collaborate on building user-friendly real-time and batch scoring systems.

- Dataiku offers almost all production-related features, such as scheduling, monitoring, and scenarios, in its design node, so that you can build production-ready workflows from the first step.

To find out more on the Dataiku approach to production and our features that facilitate it, watch our live training.