It’s easy to talk about data ethics in science-fiction hypotheticals. However, Asimov’s Laws and imagined trolley problems are only thought exercises; when building machine learning models, data scientists are presented with real ethical concerns every day, where legal compliance, civil liberties, model accuracy, and profit margins vie for dominance.

In order to build models that function well and also use data ethically, data scientists must confront their role within an organization and society at large. It’s no easy task and requires calm consideration. However, it’s the only way to embed an organization’s mission and ethics into their analysis: from the code, up.

Widespread Implications

Maryam Jahanshahi is a Research Scientist at Tap Recruit, and one of the experts featured in the Data Science Pioneers documentary. She explains that “one part of every data scientist’s role is now going to be thinking about the ethics of the data they have and the ethics of the questions that they’re answering.”

Embedding ethical analysis into data science workflows will look different on each team and in each subdomain. Concerns regarding private healthcare data are drastically different from public social media data, but by building privacy and civil liberties considerations into workflows, data scientists uphold their responsibility to build more responsible machine learning models.

Here are three ways to get started with responsible data science right away:

- See the Big Picture. Ask big questions about the context surrounding model constructions, such as “What value is this providing the user?” or, “Are they aware of how their data is being used?” GDPR restrictions require organizations to justify their usage of user data. By evaluating the value of a certain data set towards improving the user experience, (and discussing these questions with peers) data scientists demonstrate a commitment to users’ good. They may not always make the right call, but only time and persistence will further the conversation and improve their understanding.

- Subpopulation Analysis helps combat biases present in historical datasets. Even the most accurate model can perpetuate bias already present in the community it analyzes. By visualizing the potentially divergent functionality of a model on the basis of user traits like gender and race, data scientists can combat existing bias and help counter-balance their models against implicit bias.

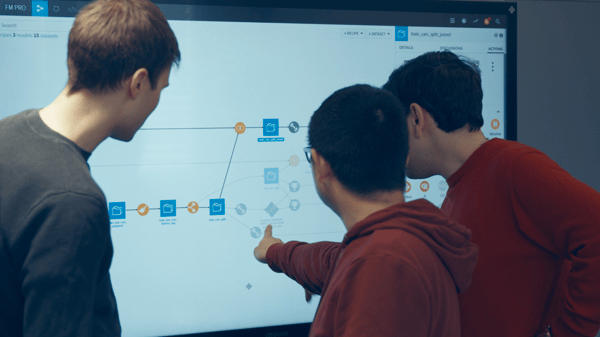

- Collaboration across teams is critical to improving model functionality in general, but especially for ensuring users’ data is explored ethically. While a data scientist on their own may not view a certain analysis as potentially damaging, another data scientist might have a different perspective. Bringing in additional teams, such as legal or user advocacy, will bring data scientists closer to compliance regulation and user wishes, ultimately leading to better models. If non-technical teammates can visualize and understand the model at hand, this collaboration will be faster and more meaningful. However, there’s never enough time to consult everyone, and too many voices would never reach a single conclusion, but a periodic comparison will help the whole organization behave more responsibly.

Next Steps

Collaboration about responsible machine learning impacts everyone who benefits from data analysis (so...everyone). While internal communication is critical, larger conversations in the data community are necessary to establish global standards. Instead of bumbling around in the dark, data scientists can band together to tackle ethical questions with real impact.