Big data. The term means many things to many people. The best definition I've heard is data that won't fit on your laptop. With 1 terabyte hard drives available by "fit" I mean it's too big to process on your laptop. A top-of-the-line MacBook Pro packing a quad-core processor and 16GB of RAM can analyze a lot of data, however it's easy to surpass even those mighty specs.

For instance, if you want to download the Airline On-Time Performance data, you'll need 12GB. Want the Reddit Comment Corpus? You'll need 250GB just to store the compressed data. Want to uncompress it? You had better purchase an external hard drive. And analyzing it? You're going to need some more power to do that.

So What About Excel?

A number of years ago datasets could easily be opened and worked with in Excel. There are a number of powerful features in Excel that allow you to clean, standardize, and munge data. However, even the mighty spreadsheet has its limitations. But what exactly are they? Sure, a 100 megabyte spreadsheet seems to take forever to open even on a Windows machine packed with memory, but what do we mean by "limitations?"

Since Excel 2007, the following have been the max capabilities of the application*:

- Worksheet size: 1,048,576 rows by 16,384 columns

- Number of worksheets: limited by available memory

- Column width: 255 characters

- Number of characters in a single cell: 32,767

- Number of characters in the header or footer: 255

What about the PowerPivot Excel add-in you ask? That supports files up to 2GB in size and enables you to work with up to 4GB of data in memory*. That's pretty good, but not good enough to handle even one of today's small datasets.

So what do we do if our dataset is larger than what Excel can handle? Or if we're trying to combine multiple datasets?

Let's Replace Excel and Keep the Familiarity

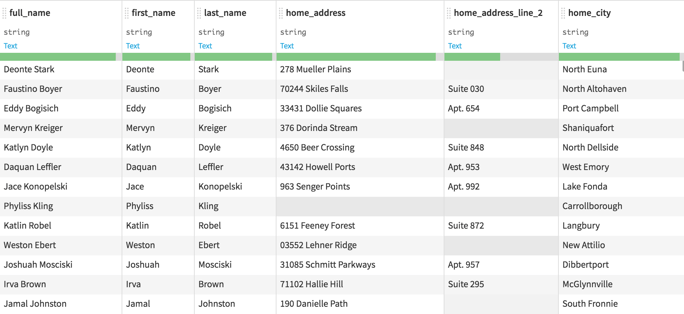

The bottom line is this — Excel is very easy to use, which is one reason so many businesses leverage it. But as we've seen it has limitations. Many of today's datasets simply won't work in it. Enter Dataiku. One of the things I love about Dataiku is how easy it is to view and work with my data, whether it's in a CSV file, a SQL database, or Hadoop cluster.

Note: This is invented data, but real address data would be classified as sensitive personal information, (SPI) and would require user identity protections before models could legally consume SPI. If you're interested in a deep-dive into analyzing sensitive data, check out our guidebook on executing data privacy-compliant data projects.

Even better than just seeing my data, is that I can see how the transformations I'm thinking of applying will affect the data, all without changing the underlying data!

How many times have you made a major update to a spreadsheet, saved and closed it, and then realized you didn't want to save the update, but it was already too late? I'll admit, I've done it. But with Dataiku, we don't have to worry about that.

What About My Macros?

More advanced Excel users have created macros with Excel VBA that help automate parts of the spreadsheet. I've seen macros that will:

- Manipulate data and text

- Apply style and formatting

- Pull data from various data sources (databases, text files, etc.).

- Create entirely new spreadsheets

- Do all of the above in any given order

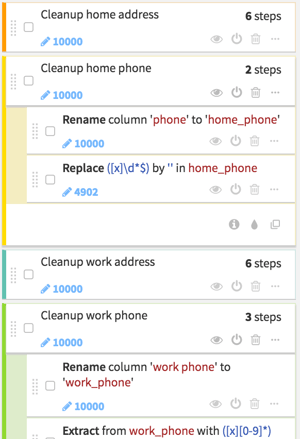

In Dataiku we have steps, which we can chain together to create recipes.

A data cleaning recipe for a full address might be composed of the following steps:

- Trim the whitespace from both ends of the string.

- Extract the zip code.

- Extract the state.

- Standardize the formatting of the state.

- Extract the city.

- Extract the apartment or suite number.

- Split the address into the house number and street name.

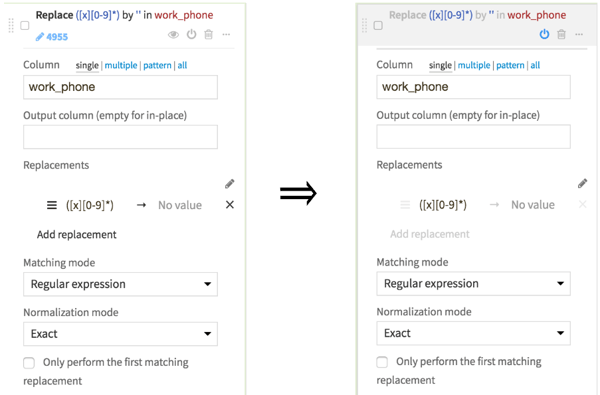

Going back to what I said earlier about saving changes you don't want, if you don't want to apply a particular step you simply turn it off.

No more try and undo!

As Easy as Excel With a Lot More Power

Excel has its merits and its place in the data science toolbox. For many companies it's the go-to tool for working with small, clean datasets. But when you're working with data that's big or messy or both, and you need a familiar way to clean it up and analyze it, that's where data tools come in.