We’ve all heard the scary stories, like the cyberattack in Atlanta (to name just one). We’ve all read the terrifying headlines:In Baltimore and Beyond, Stolen N.S.A. Tool Wreaks Havoc,I’m developing AI that can read emotions. It’s not as creepy as it sounds. Yes, it is….

We’ve all seen the movies and TV shows where the AI wins (I’m talking about 100% of Season 2, Episode 3 of The Blacklist, 24 — basically all the seasons and at least half of all episodes, Seasons 2 through 4 of Person of Interest).

I think the question on everyone’s mind — or at least, anyone who has watched Black Mirror — is: when will real life catch up to fiction? I’d argue that in some ways, if you look at the real stories and the real headlines, we’re already there. Which is partly why it’s not that difficult to imagine, if we continue down this path, what headlines might look like in 10 years (or maybe even less):

- Is AI Coercive Generated Sound a New Form of Torture?

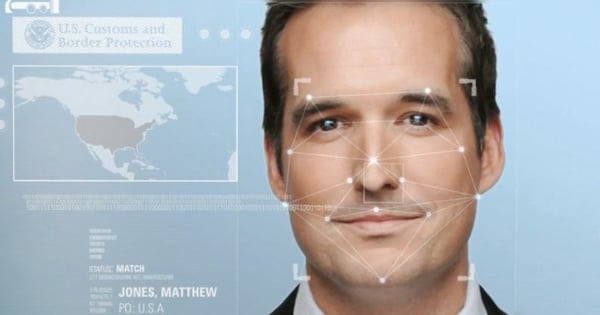

- Public Uproar Following Face Recognition Used in Secret for 10 Years

- The Anti-AI Party Is Polling Above 20% Across Multiple Countries in EU 2029 Elections

Will facial recognition technology become so mainstream that it happens wherever you go (without consent)?

Given this possible trajectory, the question we as people, as consumers, as employees, as (in my case) software vendors must ask is: what can we do to turn this around? How do we make the AI of the future likeable?

Taking Responsibility

The reality is that no one today (at least, I hope) is purposefully designing AI systems that do things like introduce or reinforce bias, or any other of the features of AI that make it scary and unlikable.

But at some point, we can’t all hide behind good intentions; the fact that people today aren’t taking specific care and actions to ensure AI performs properly and ethically is problematic. In other words, today, lackadaisical attitudes toward AI and the impact any decisions have on real people are a bigger issue than intentional malice.

It’s up to all of us (whether managers, individual contributors, or even consumers) to change our processes — or in the case of consumers, demand change — in two areas:

- Putting (and keeping) a human in the loop who is not only empowered, but encouraged to speak up when AI systems seem like they are headed down the wrong path — or maybe they’ve already gone down that path.

- Realizing that accountability in AI isn’t cheap. It’s hard work. It takes a lot more effort to put care into something than to not accept responsibility for outcomes because — “hey, it’s AI. Yeah I built it, but it has a mind of its own, so it’s not my fault.”

Software Designed for Responsibility?

I believe that when it comes to AI technology, software vendors have a responsibility here too. And that responsibility is threefold:

- AI technologies should make it costly to not see bias or other problems in AI systems. The development of AutoML, not to mention any further developments in the automation space, in some ways puts people a little too much on autopilot. Think about the proliferation of automation in finance that causes stock market volatility. If automation is where we’re heading, then clear checks in the process (for bias, interpretability, etc.) are critical.

- Human responsibility should be explicit, and software systems should prompt this change. That is, at the end of the day, put real people at the end of the line when it comes to responsibility in AI systems. Not just teams or groups, but individuals who have to answer to any accusations after the fact that things went wrong.

- We should make it easier for knowledge (and thus experience, viewpoints, and ideas) to be compounded. That means it’s not one person building something alone and releasing it into the wild; many, many people are involved, which means many opportunities to catch something that’s gone wrong (especially if #1 is done well).

I like to believe that all of these responsibilities and new product features will lead the way to a new discipline, which will then consequently pave the way to a more likeable AI in the future: responsible AI design.