This month, we are zooming in on the operational guidelines spearheaded by Singapore’s Personal Data Protection Commission (PDPC)*. Starting in 2019, the regulator’s approach to AI governance has been incredibly forward thinking: they have fostered guideline adoption for 200+ organizations by delivering actionable governance best practices and real-life implementation examples.

The Singaporean frameworks outline fundamental principles and detailed guidelines to support any organization in getting up to speed with AI governance. Governing AI will soon become a must-do under the push of AI-specific regulations, such as the Draft Artificial Intelligence Act set by the European Commission. The “why” of AI governance is now evolving to a “how,” and the framework created by Singapore provides you with a powerful baseline from which to build.

This blog post is the fifth of a series (check out the first one on France, the second on Canada, the third on the European Union, and the fourth on the U.S.) focusing on these directives, regulations, and guidelines — which are on the verge of being enforced — and their key learnings for AI and analytics leads.

*The Personal Data Protection Commission (PDPC) is Singapore’s main authority body for administering the Personal Data Protection Act (PDPA), 2012.

Step 1: A Universal AI Governance Framework Already Adopted by 200 Organizations

Remember the self-regulatory stance of the U.S.? The Singaporean government holds similar beliefs. Yet, their self-regulatory stance is not leading to the Wild West of AI.

What? How is this possible? The PDPC outlined detailed guidance that is readily implementable for the private sector. In other words, a step-by-step guide for AI governance and compliance. Such a framework stands the test of time, as it has been updated through public and industry consultations and completed with further guidance and references to ease adoption.

Singapore’s AI Governance Framework

In 2019, the PDPC released the first edition of the Model AI Governance Framework (MAIGF) at the World Economic Forum (WEF) in Davos, Switzerland. It was already positioned as a “living document” open to industry and institutional feedback to be reviewed in a couple of years.

It’s the first piece of guidance on AI governance in Asia and aims to be:

- Algorithm agnostic: It caters both to AI or data analytics methods.

- Technology agnostic: It applies regardless of the development language and data storage method.

- Sector agnostic: It serves as a baseline set for organizations that encourages sector-specific considerations and measures.

- Scale and business model agnostic: It does not focus on scale or size.

The framework is centered around two guiding principles, four areas of guidance, and real-life examples, all of which are outlined below:

- AI systems should be human-centric (i.e., ensure humans can easily interact with the system) and their decisions should be explainable, transparent, and fair.

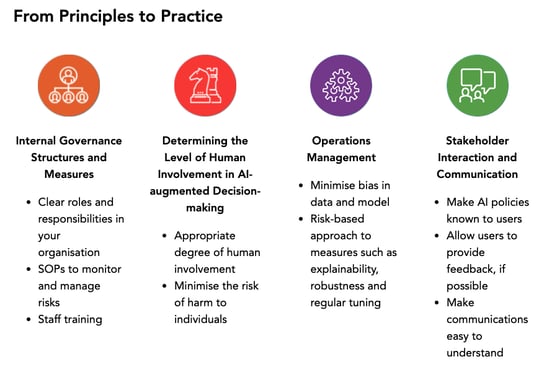

- These principles should be translated into (a) internal governance structures and measures, (b) processes gauging human involvement in AI-augmented decision making, (c) operations management, and (d) stakeholder interaction and communication.

MAIGF’s four areas of guidance — source

- Real-life examples from world-renowned organizations (i.e., Mastercard, Facebook, Merck Sharp & Dohme, etc.) on how to best implement the guidance. For example, how a probability versus severity of harm matrix was used to assess harm and the appropriate degree of human intervention.

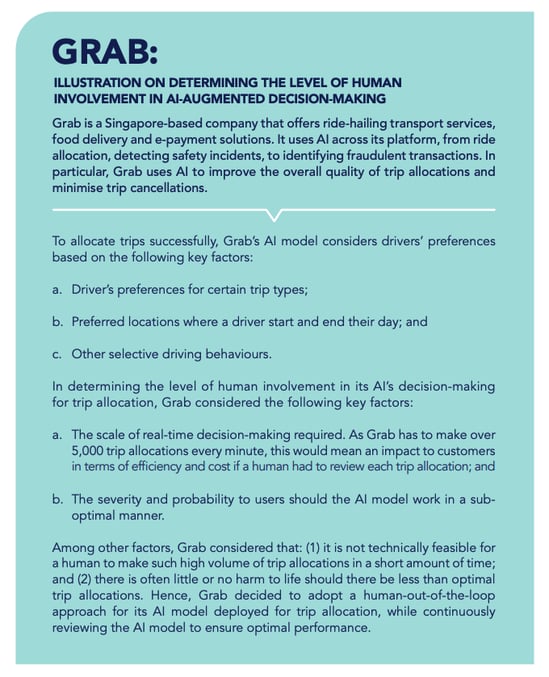

MAIGF’s real-life example on determining the level of human involvement in AI-augmented decision making — source

MAIGF’s real-life example on determining the level of human involvement in AI-augmented decision making — source

Following the first release in 2019, PDPC released a second version of MAIGF in 2020 with more industry examples and conceptual clarifications (the primer is also accessible here). The second release also included:

- A guide to implement MAlGF in collaboration with WEF’s Center for the Fourth Industrial Revolution and a contribution of 60 organizations.

- Two volumes of use cases (here and here) to demonstrate how local and internal organizations across different sectors and sizes implemented or aligned their AI governance practices with all sections of MAIFG.

It’s a lot of content, and one might feel overwhelmed at first glance, yet PDPC has supported nearly 200 accounts on how this governance model can be implemented and how it brings value to private organizations. Whether as a reflection piece or a fully integrated framework, the MAIGF helps democratize AI governance practices and creates a culture for efficient and responsible innovation. But wait, there’s more!

Step 2: AI Governance for Financial Services

In 2018, the Monetary Authority of Singapore (MAS) developed its own AI governance guidelines. They are naturally more extended and precise than PDPC’s as they are targeted at the financial actors of Singapore. They provide a very good example for how the MAIFG could be completed in or adapted to a specific sector.

First, MAS released the Principles to Promote Fairness, Ethics, Accountability and Transparency (FEAT) in the Use of Artificial Intelligence and Data Analytics in Singapore’s Financial Sector. The document lists 14 principles specific to financial products and services around four main concepts (FEAT):

- Fairness: AI systems’ use should be justified and reviewed.

- Ethics: AI-driven decisions should be held to human-driven decisions standards.

- Accountability: AI systems should be accountable internally and externally.

- Transparency: AI systems and how they are built should be explained to increase public confidence.

These principles have paved the way for a dedicated framework, Veritas, to promote the responsible adoption of AI and data analytics in financial services.

This framework is particularly relevant because it addresses the implementation challenges for the FEAT principles. The first phase of the project aims at addressing the Fairness principles:

- The first edition focuses on the development of fairness metrics for popular use cases with a high propensity for bias, like credit risk scoring and customer marketing. Again, this framework is developed with the help of a consortium that gathers key actors like HSBC or United Overseas Bank (UOB).

- The second edition focuses on building similar metrics for two insurance use cases: predictive underwriting and fraud detection.

The white papers and the open source code for the first edition are available here. Phase two of the Veritas cycle on tackling FEAT’s ethics principles is already planned.

Although MAS work delivery is less extensive than PDPC, it is still very impressive. Looking back on the first blog post of this series, we can observe a trend emerging across national financial services actors anticipating upcoming regulation by adopting an operational approach to AI governance and compliance.

Step 3: Reflecting on How These Frameworks Fit Within the Larger Galaxy

The Singaporean frameworks are one of many. If you haven’t found what you were looking for, there are more frameworks to start building AI principles and implement them successfully. This blog post series is full of resources: click here for some European-focused advice and here for some North American advice (or here too).

If there is one thing to learn from this series it’s that this framework is another move toward updating the governance framework and structure of AI. Simply put, it’s another market signal that AI governance is transitioning from a research trend to a corporate standard. All initiatives are different but ultimately, they all have the same demand from private players when it comes to AI: establish a structure to identify core principles and enforce them in your AI project lifecycle.

There is a second thing to learn from this blog series. You might be wondering why I am spending so much time delving into AI regulation around the world in the name of Dataiku. The answer is pretty straightforward — without AI principles and their initial implementation within a governance framework, it’s difficult to leverage any governance platform capabilities. If you don’t know what risks you are looking at, it’s difficult to address them with the appropriate tooling.

Then comes the tooling question. Dataiku’s role is to help companies build and deploy AI at scale. Initially, it leverages the platform logic to centralize data effort across teams to empower them to deliver more projects. Such logic is also applicable to governance as it helps addressing fundamental stakes regarding AI:

- Where are my models?

- How are my models performing?

- Are my models aligned with my business objectives and principles?

- Do the models carry any risks for my organization?

Governance is the next logical step to build resilience in AI and address the new set of guidelines and regulations. Dataiku has key capabilities to operationalize existing AI governance guidelines and navigate the upcoming regulatory environment. A detailed blog post is coming soon!

All this to say, we leave it to our customers to define and develop their own AI frameworks. We provide the technology and tools to govern AI and support compliance with upcoming regulations. It's a must-have for any active AI scaling journey!